How to guides

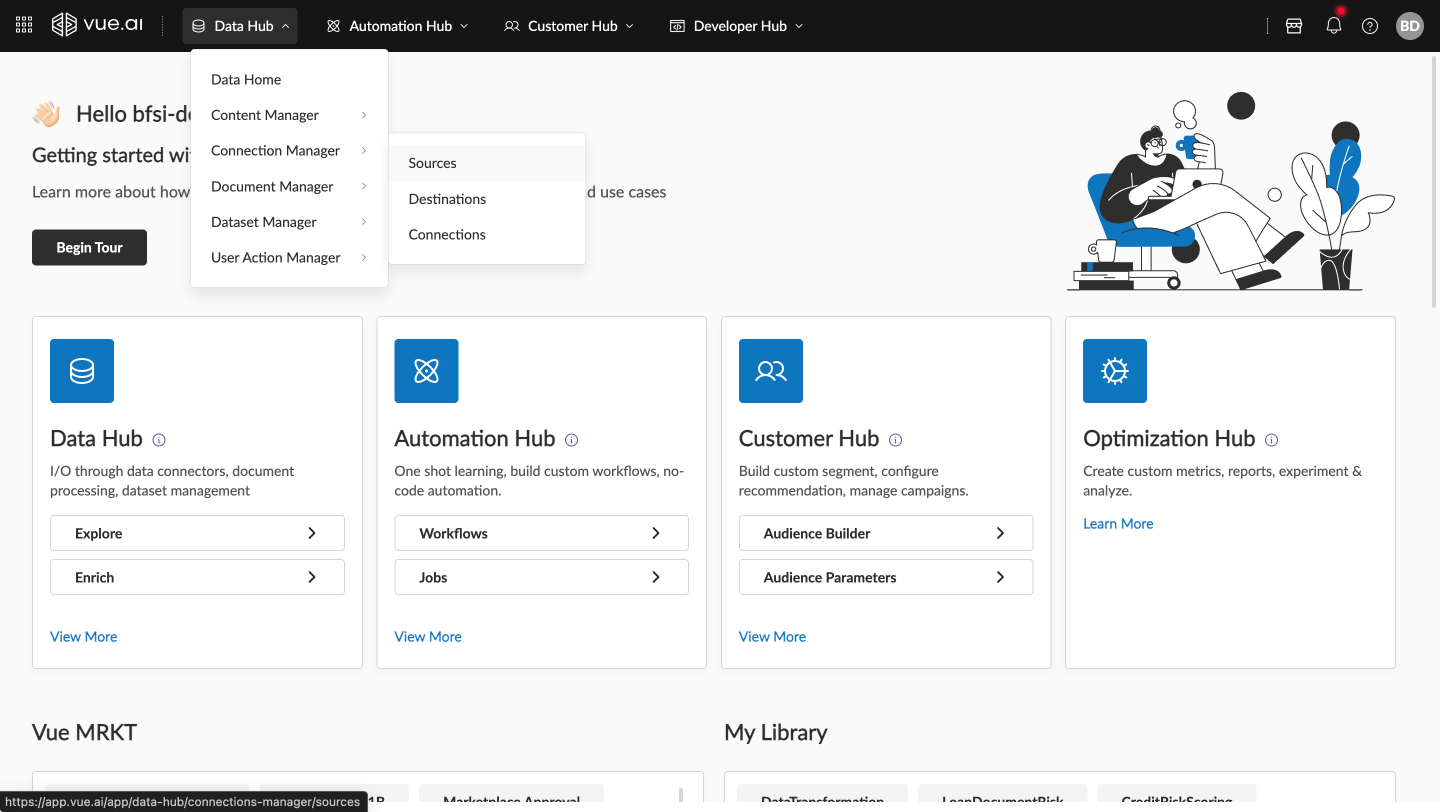

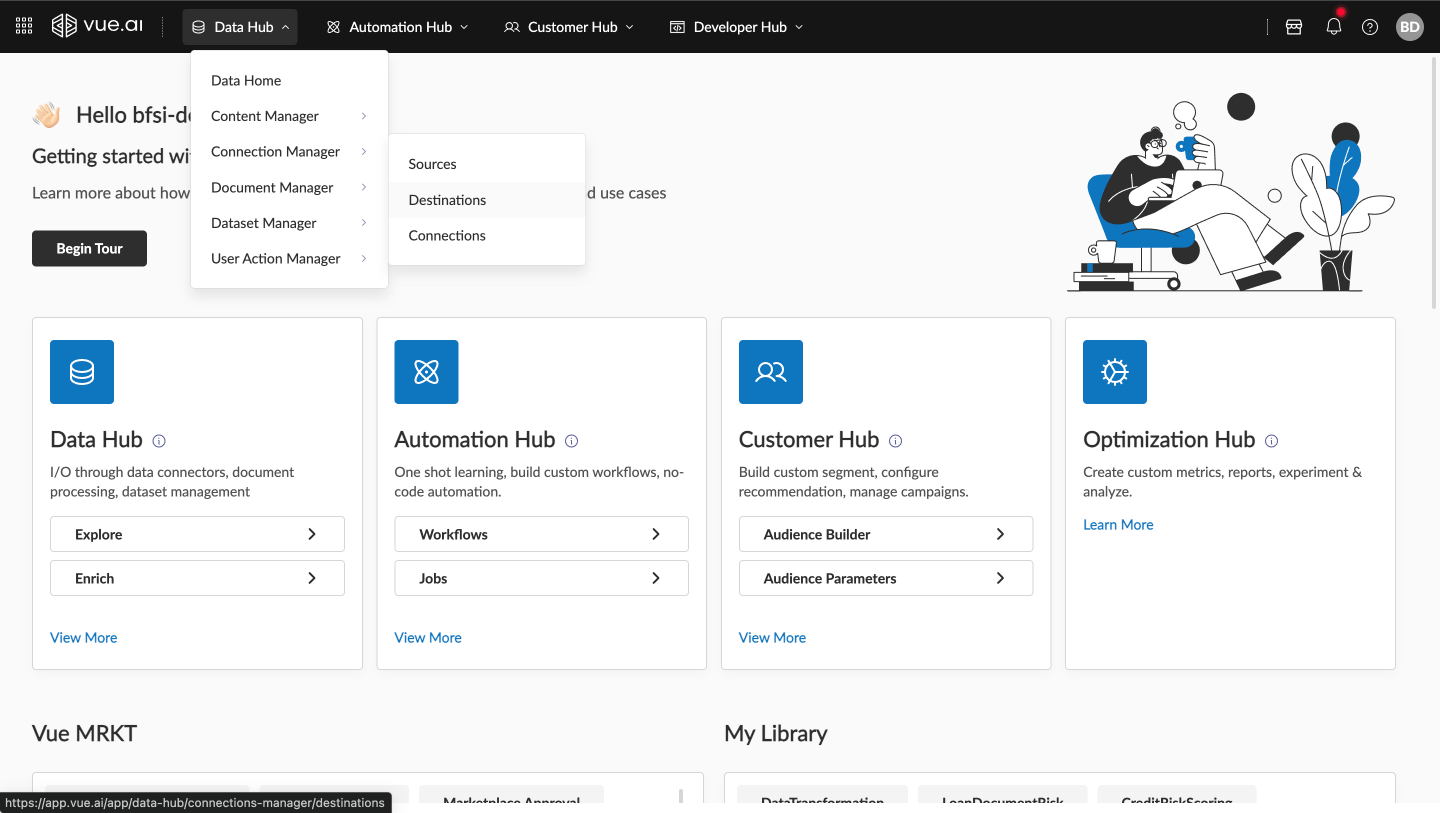

Data Hub

The Data Hub provides comprehensive data integration and management capabilities for seamlessly integrating and unifying enterprise data. It centralizes data management for enhanced operational efficiency, enables effortless upload and organization of documents at scale, and unlocks insights with robust business intelligence reporting tools.

Overview

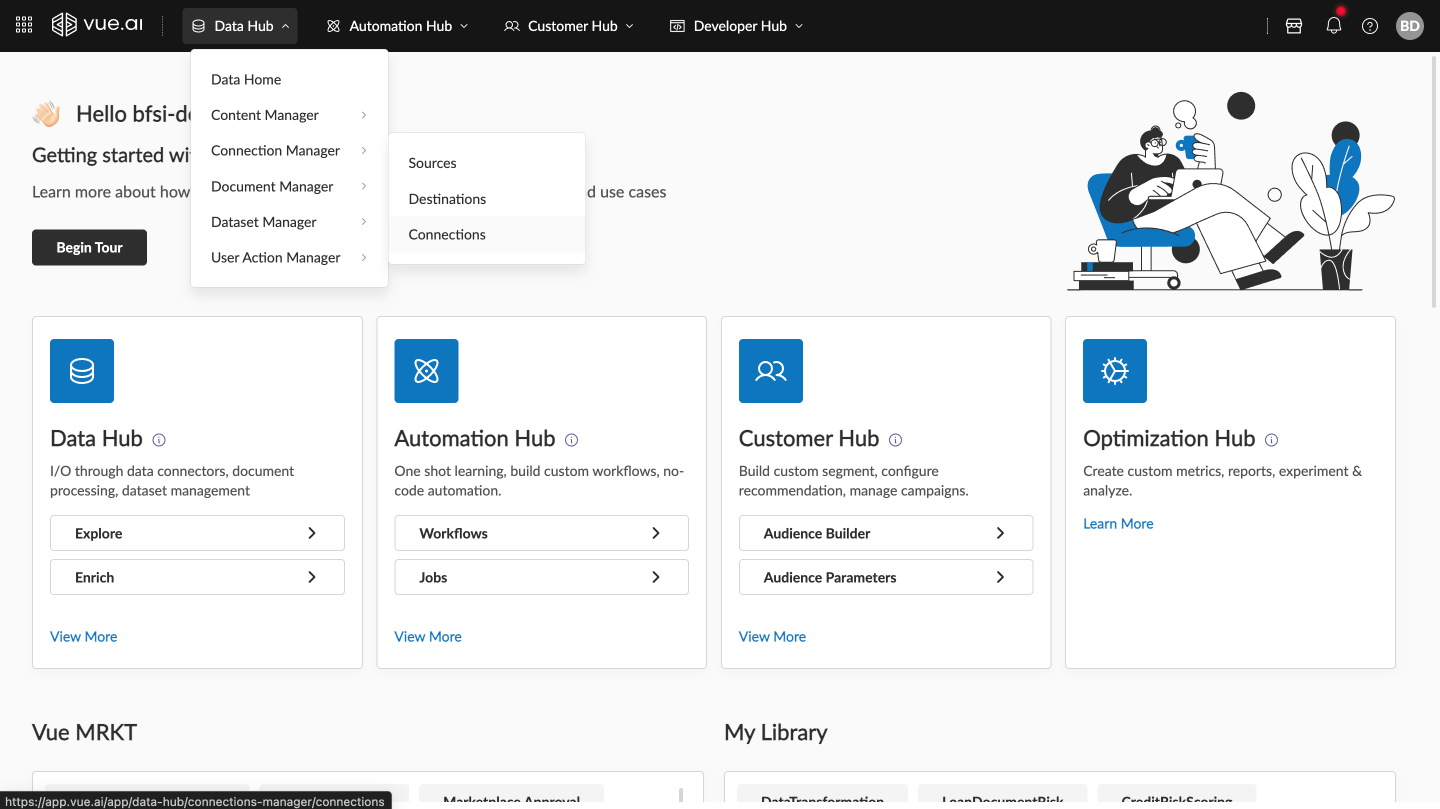

The Data Hub serves as the central platform for all data-related operations in Vue.ai, offering three main components:

- Connection Manager: Connect to any data source or destination with support for 250+ data sources and 200+ destinations

- Document Manager: Intelligent document processing with AI-powered extraction and classification capabilities

- Dataset Manager: Comprehensive dataset management with profiling, versioning, and relationship modeling

Connection Manager

The Connection Manager is the I/O of the Vue Platform. It enables connecting to any data source or destination to process data using a simple user interface, supporting data in all formats, sizes, and from any data system. Connector Manager supports data in all formats, sizes, and from any data system. Data is brought into the system in the form of datasets.

With Sources, users can:

- Establish Connection to Any Data Source: Read data from over 250 supported data sources out of the box

- Custom Sources: Build custom sources via the Connector Development Kit (CDK), a low-code interface

With Destinations, users can:

- Establish Connection to Any Data Destination: Write data to over 200 supported data destinations out of the box

- Custom Destinations: Build custom destinations via the Connector Development Kit (CDK), a low-code interface

With Connections, users can:

- Establish Link Between Source & Destination: Create connections between any source and destination

- Configure Sync Frequency: Set how often data should be synchronized

- Define the Stream Configuration: Specify the stream and its configuration for syncing

Data Ingestion Using Connectors

This comprehensive guide assists in understanding the configuration of data sources and destinations and the establishment of connections for seamless data flow in Vue.ai's Connection Manager.

Getting Started

Prerequisites Before beginning, ensure that:

- Basic data concepts like schemas, appending, and de-duplication are understood

- Connector Concepts: sources, destinations, and CRON expressions are understood

- Administrator access to the Vue.ai platform is available

- Credentials for the data sources and destinations to be configured are available

Familiarity with basic data concepts like schemas, appending, and de-duplication is required.

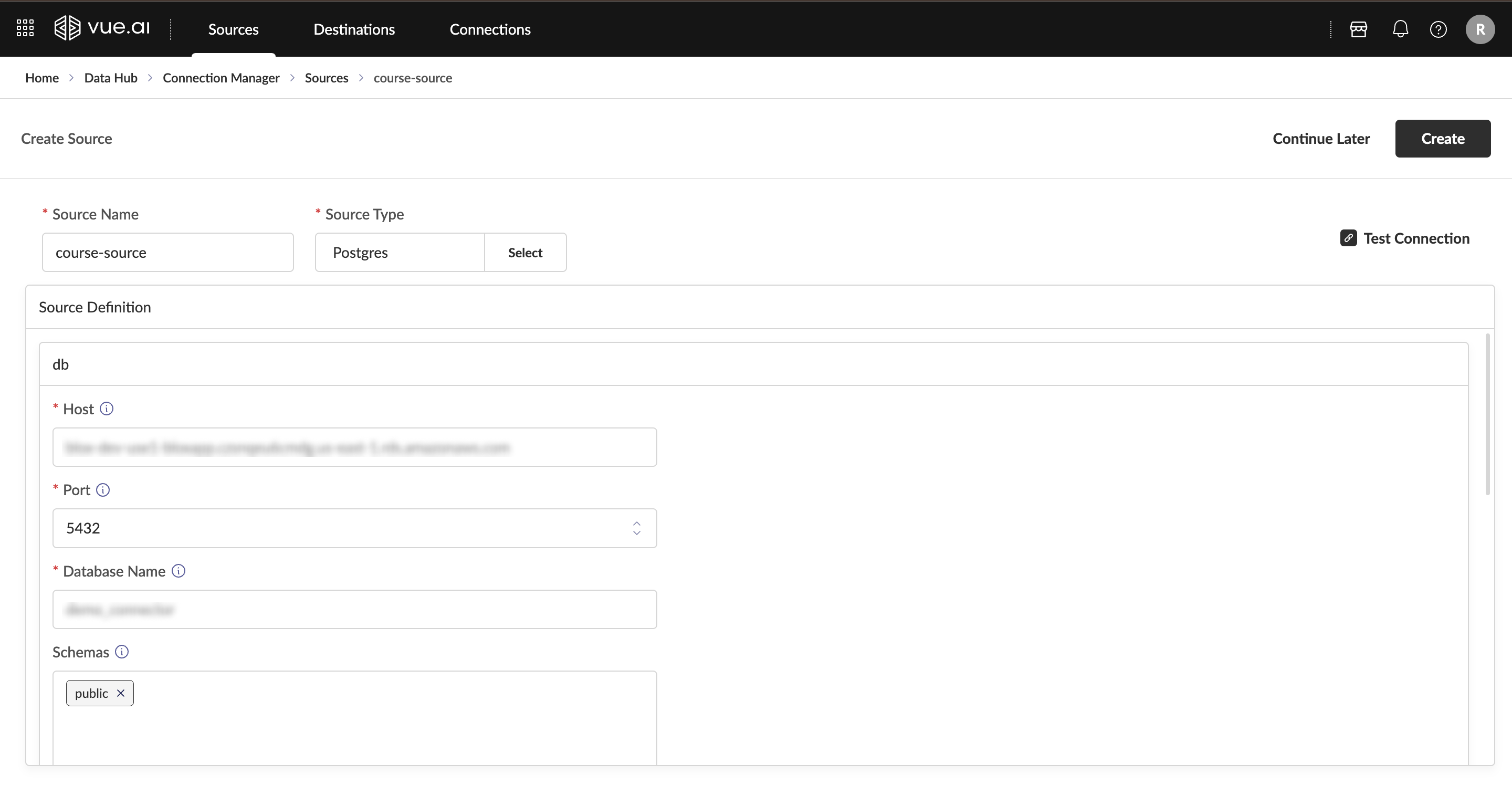

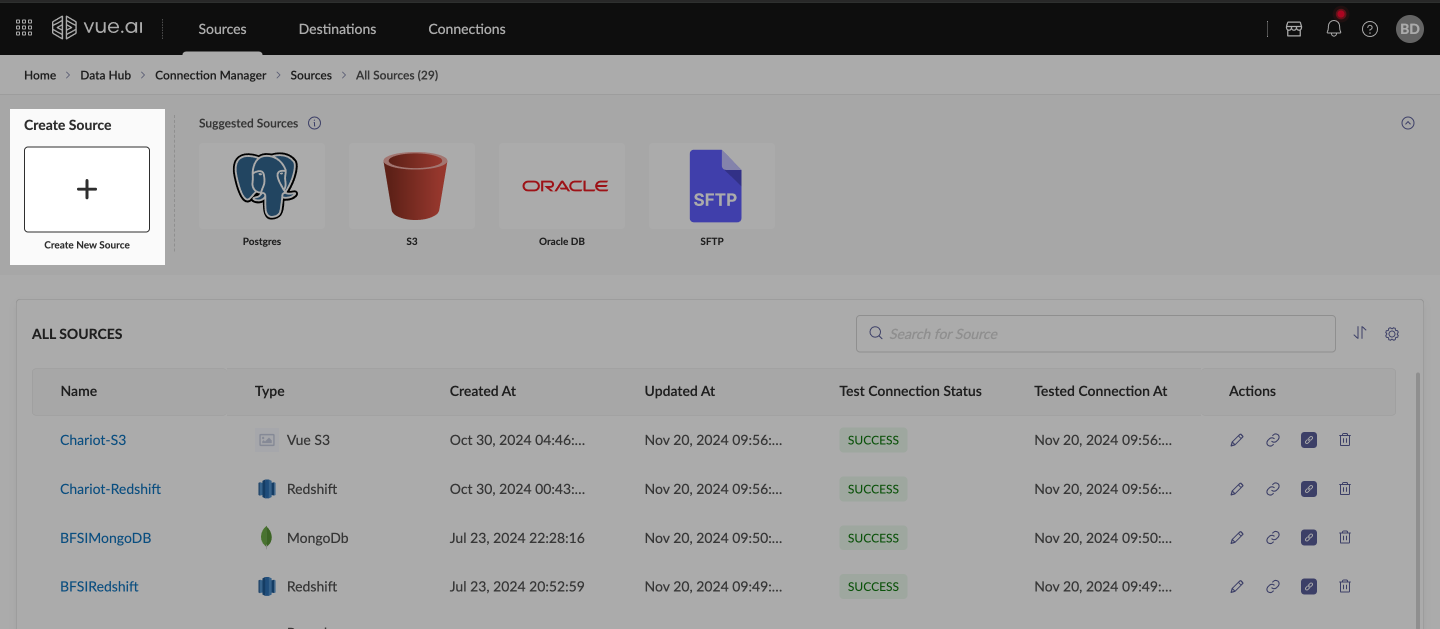

Configuring a Source

Navigation Navigate to Data Hub → Connection Manager → Sources

Create Source On the Source Listing page, click Create New

- Enter a unique name for the source

- Select a source type (e.g., PostgreSQL, Google Analytics)

- Provide necessary credentials in the configuration form

Test Connection Verify the source connection by selecting Test Connection

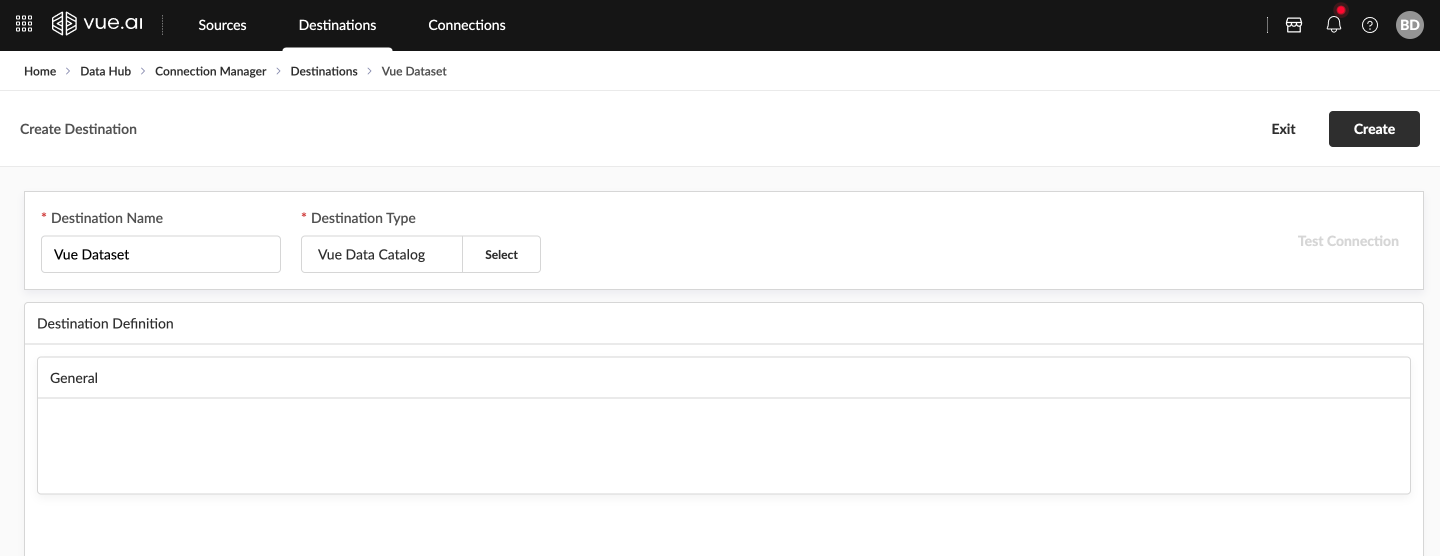

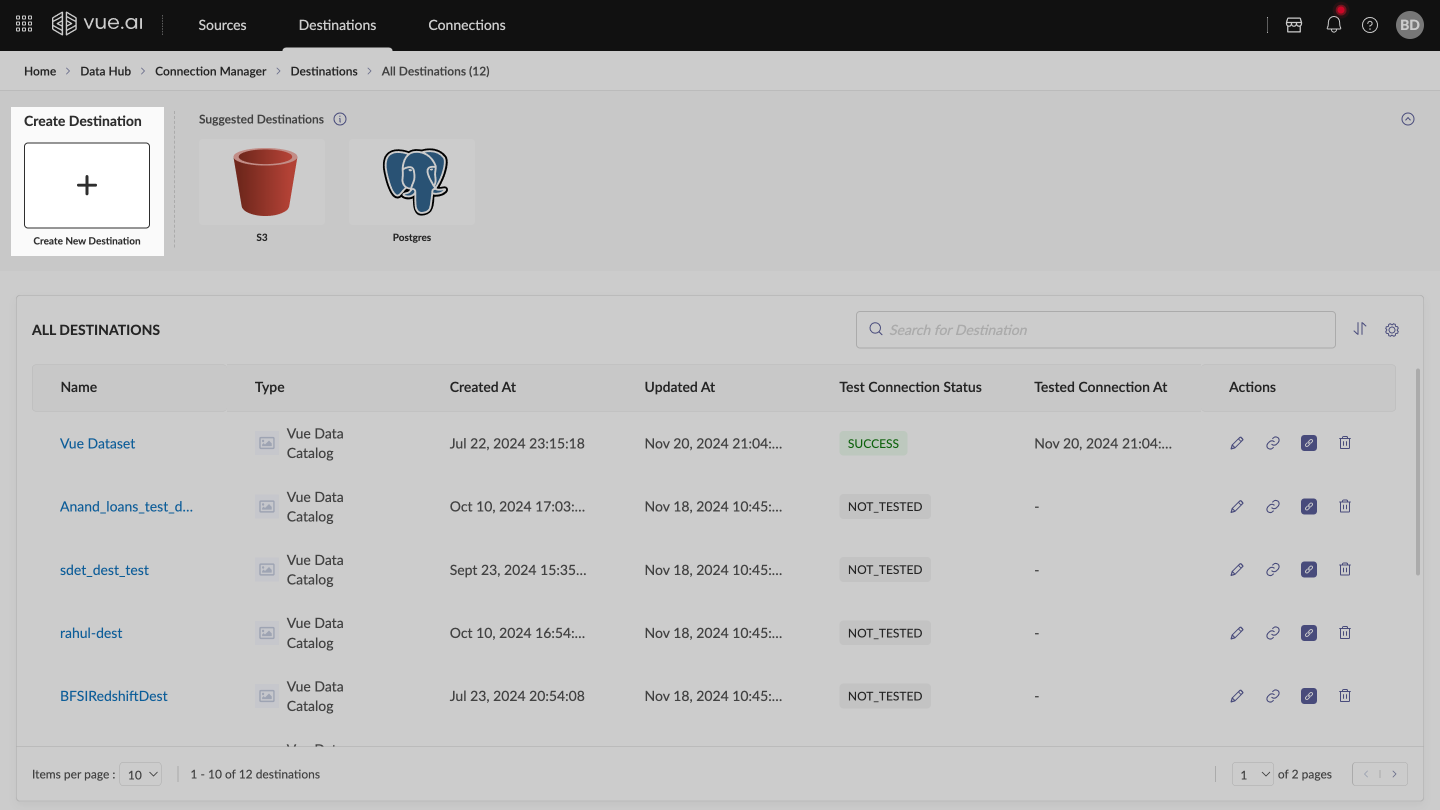

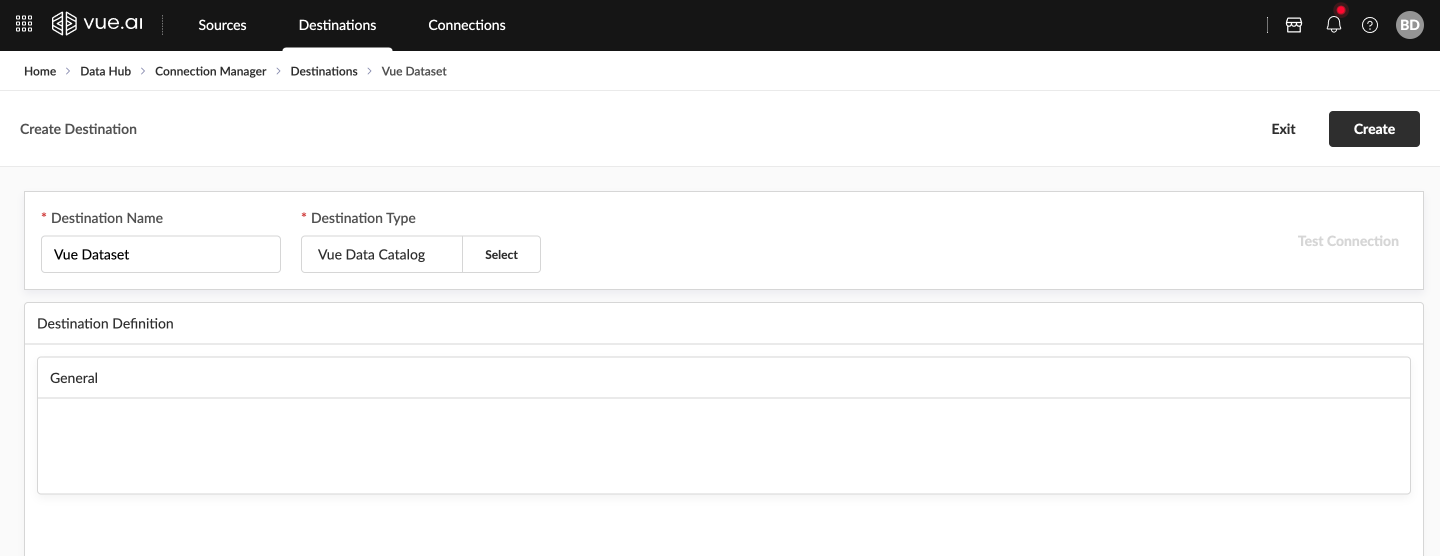

Configuring a Destination

Navigation Go to Data Hub → Connection Manager → Destination

Create Destination Click Create New on the Destination Listing page

- Enter a unique name

- Select the destination type (e.g., Vue Dataset)

Test Connection Verify the destination configuration

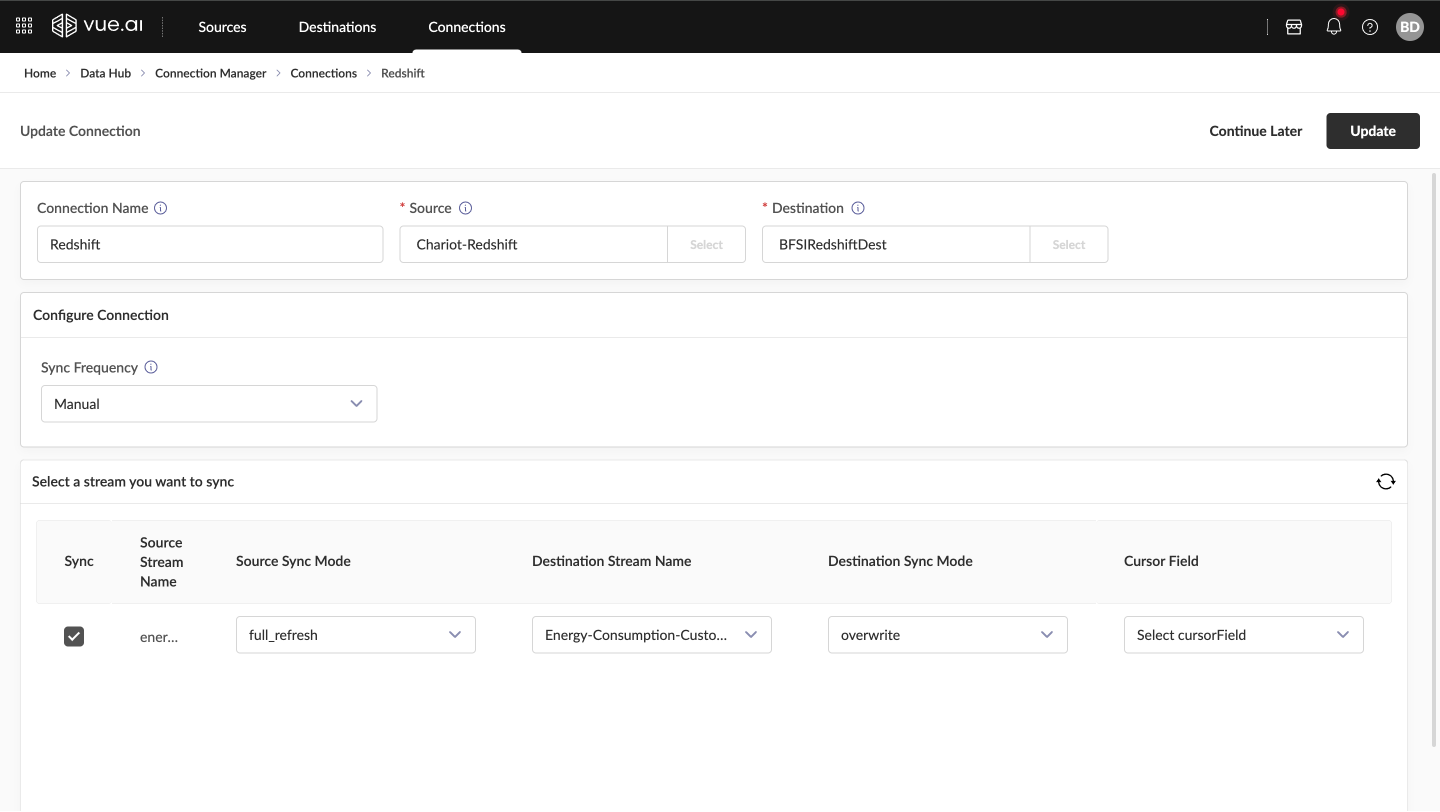

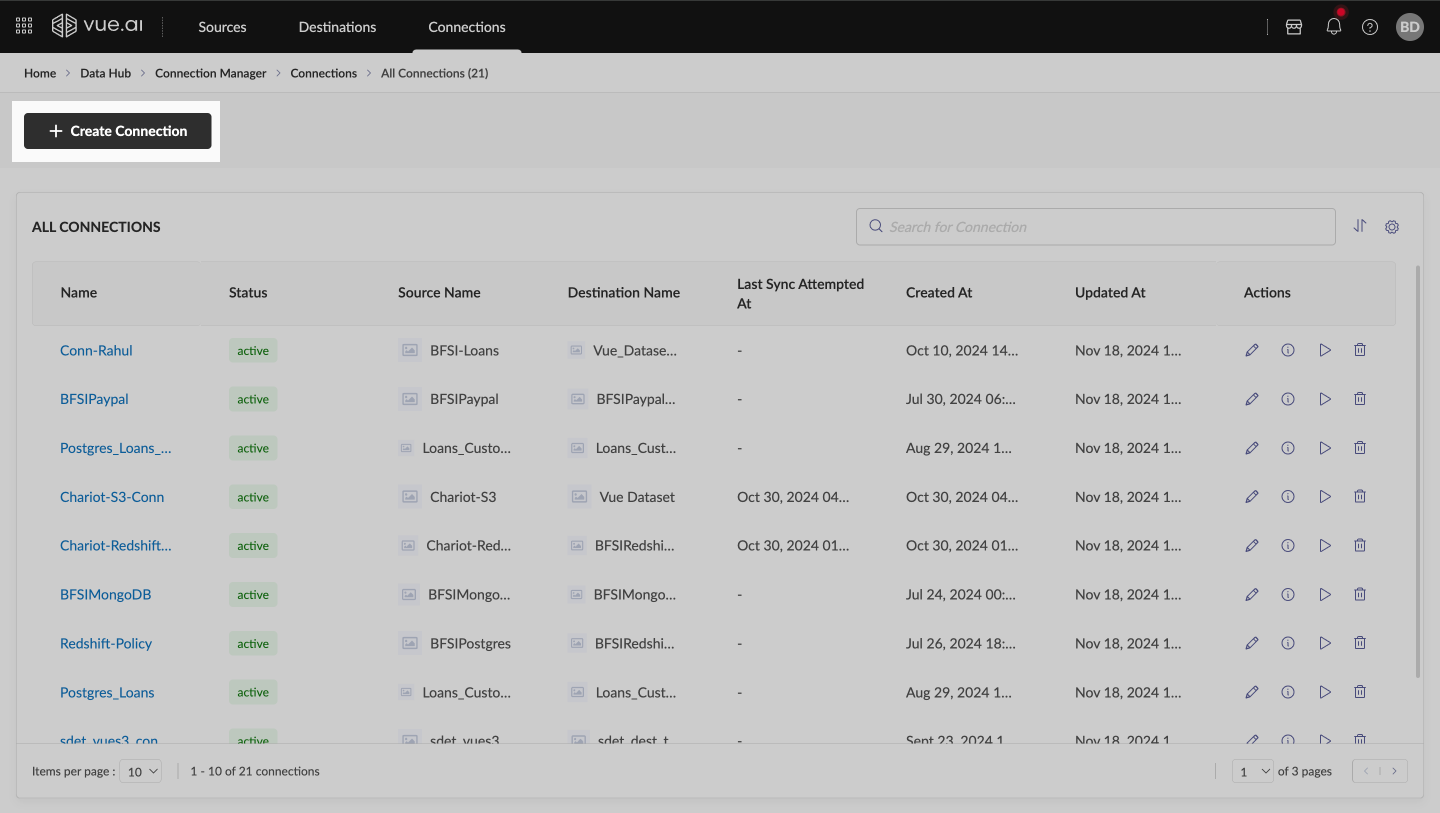

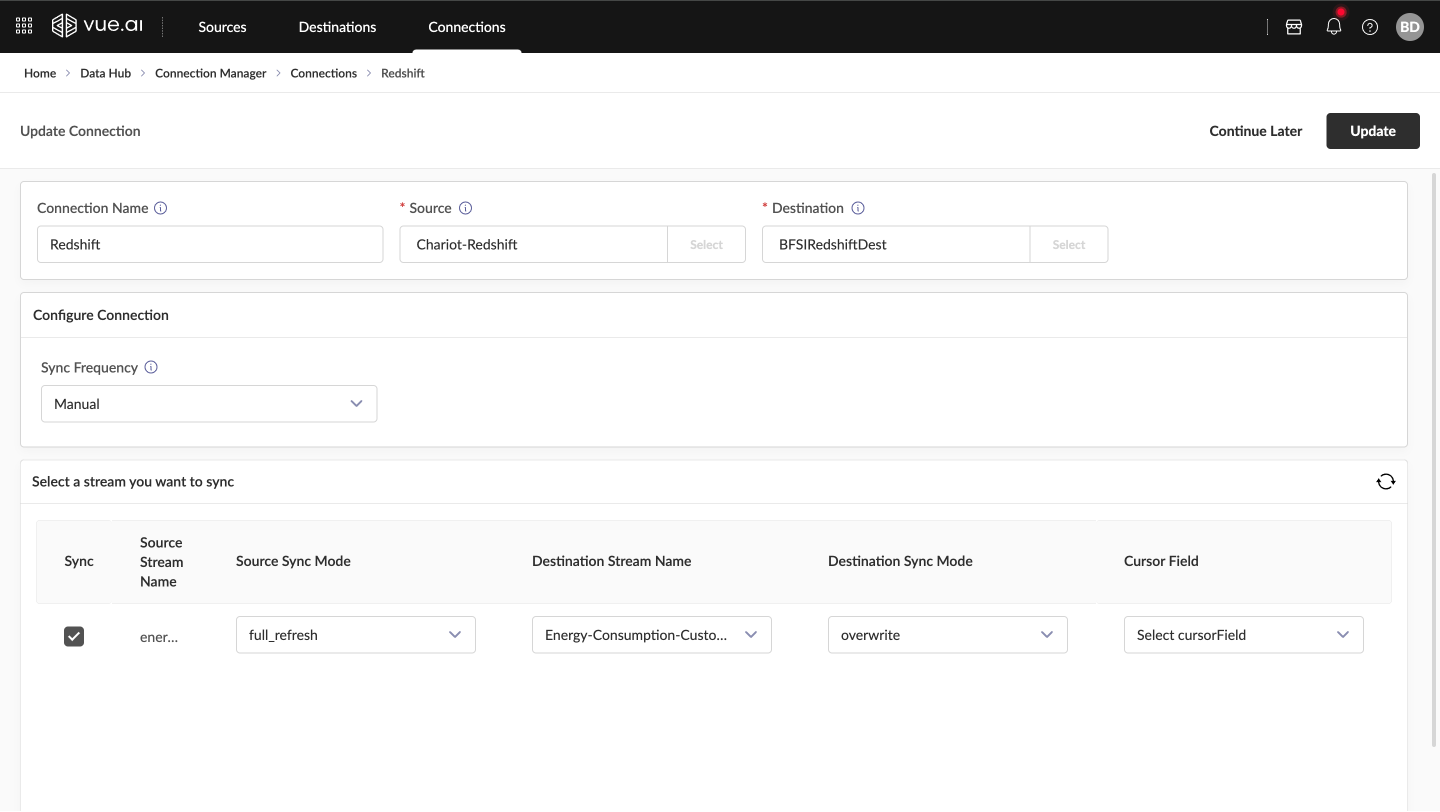

Establishing a Connection

Navigation Go to Data Hub → Connection Manager → Connections

Create Connection Select Create New on the Connection Listing page

- Enter a connection name

- Choose the source and destination

Configure Settings

- Data Sync Frequency: Choose Manual or Scheduled (configure CRON expressions if needed)

- Select streams or schemas for data transfer

- Specify sync options: Full Refresh or Incremental

Run Connection Select Create Connection and execute it

Sources Configuration

HubSpot Data Source Configuration

This guide provides step-by-step instructions on configuring HubSpot as a data source, covering prerequisites, authentication methods, and configuration steps for seamless integration.

Prerequisites Before beginning the integration, ensure the following are available:

- HubSpot Developer Account

- Access to HubSpot API Keys or OAuth Credentials

Ensure access to a HubSpot Developer Account and HubSpot API Keys or OAuth Credentials before starting.

Authentication Methods

HubSpot supports two authentication methods for data source configuration:

- OAuth

- Private App Authentication

OAuth Authentication

Credentials Needed:

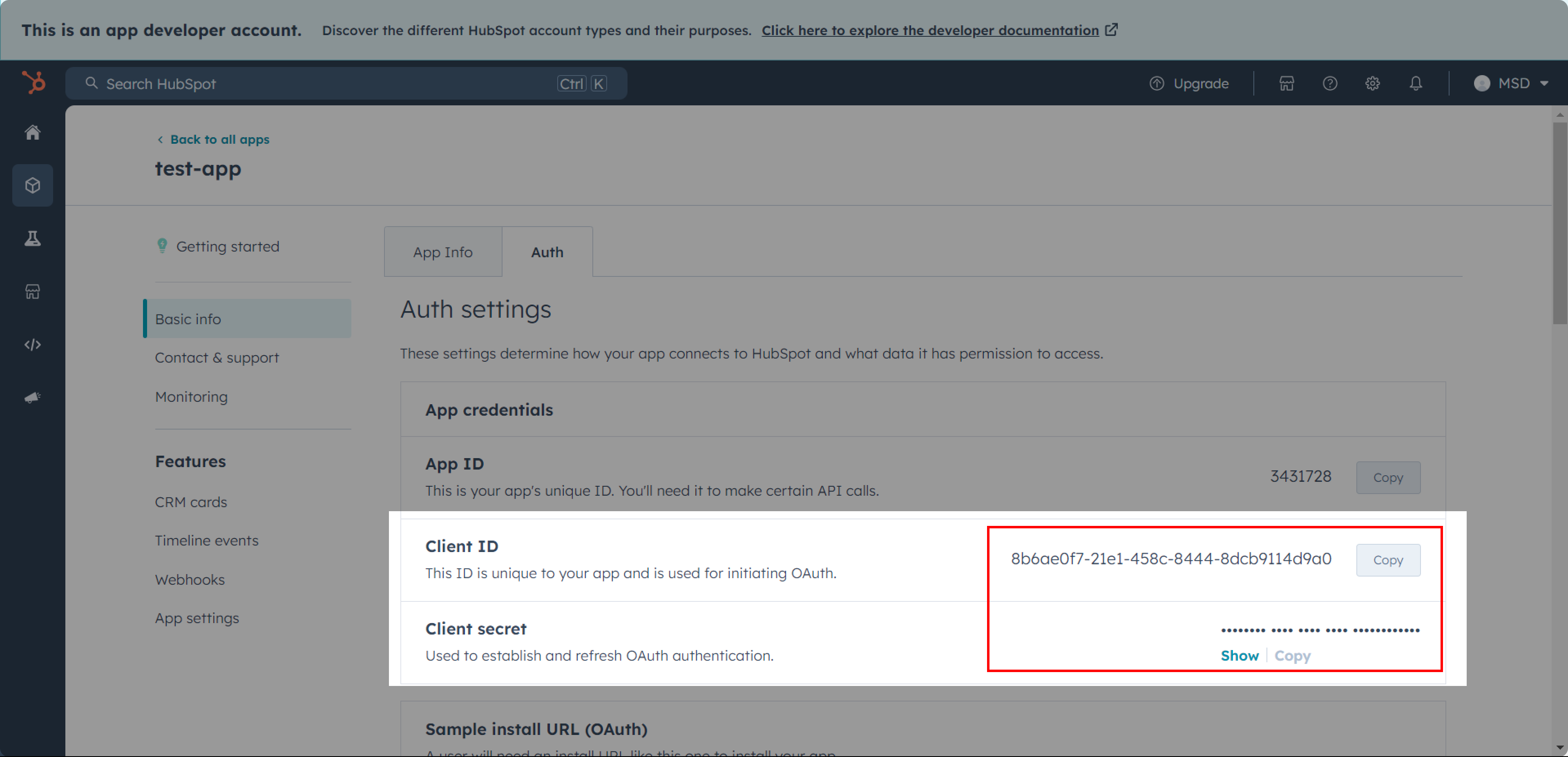

- Client ID

- Client Secret

- Refresh Token

To obtain OAuth Credentials:

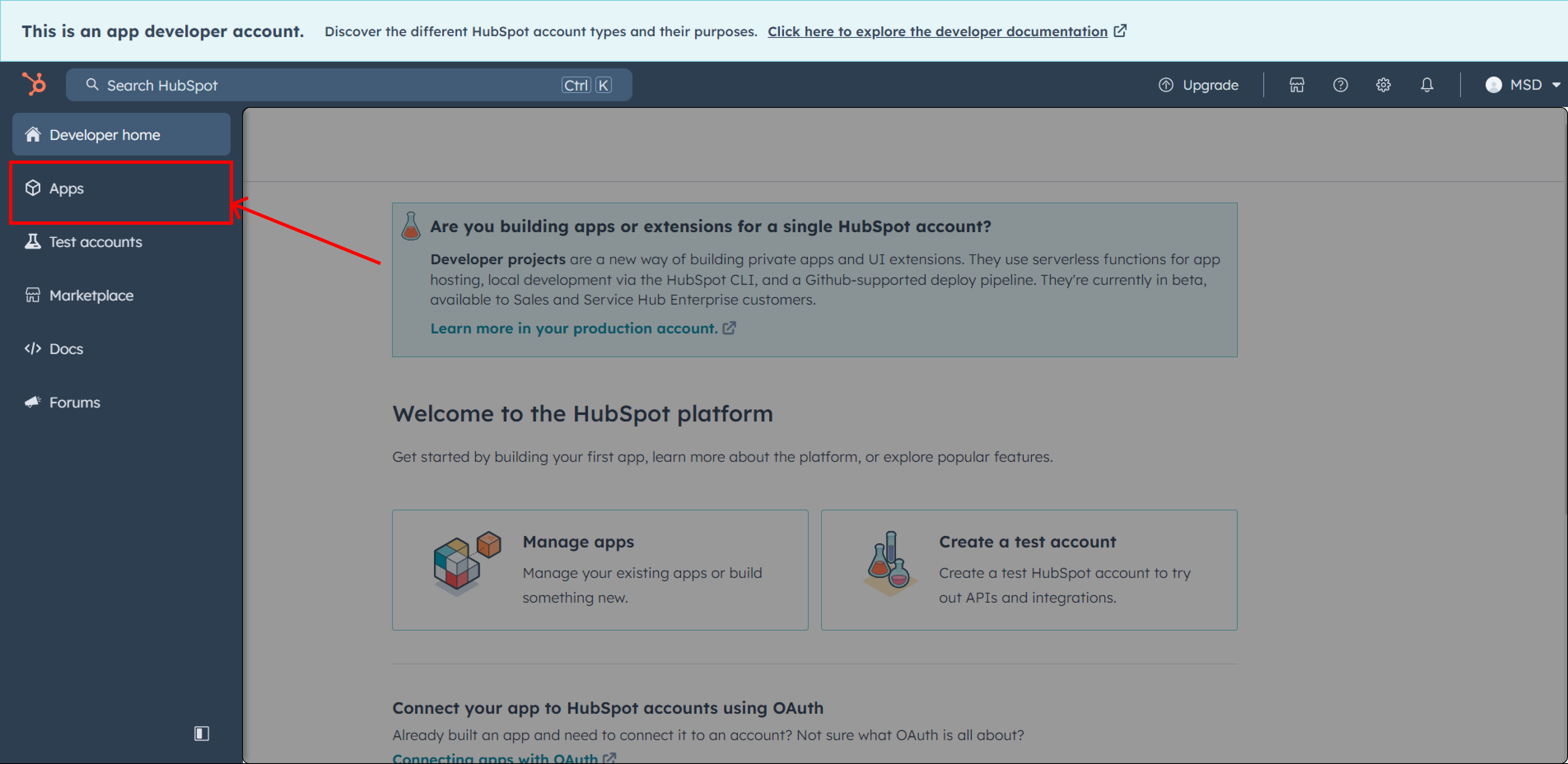

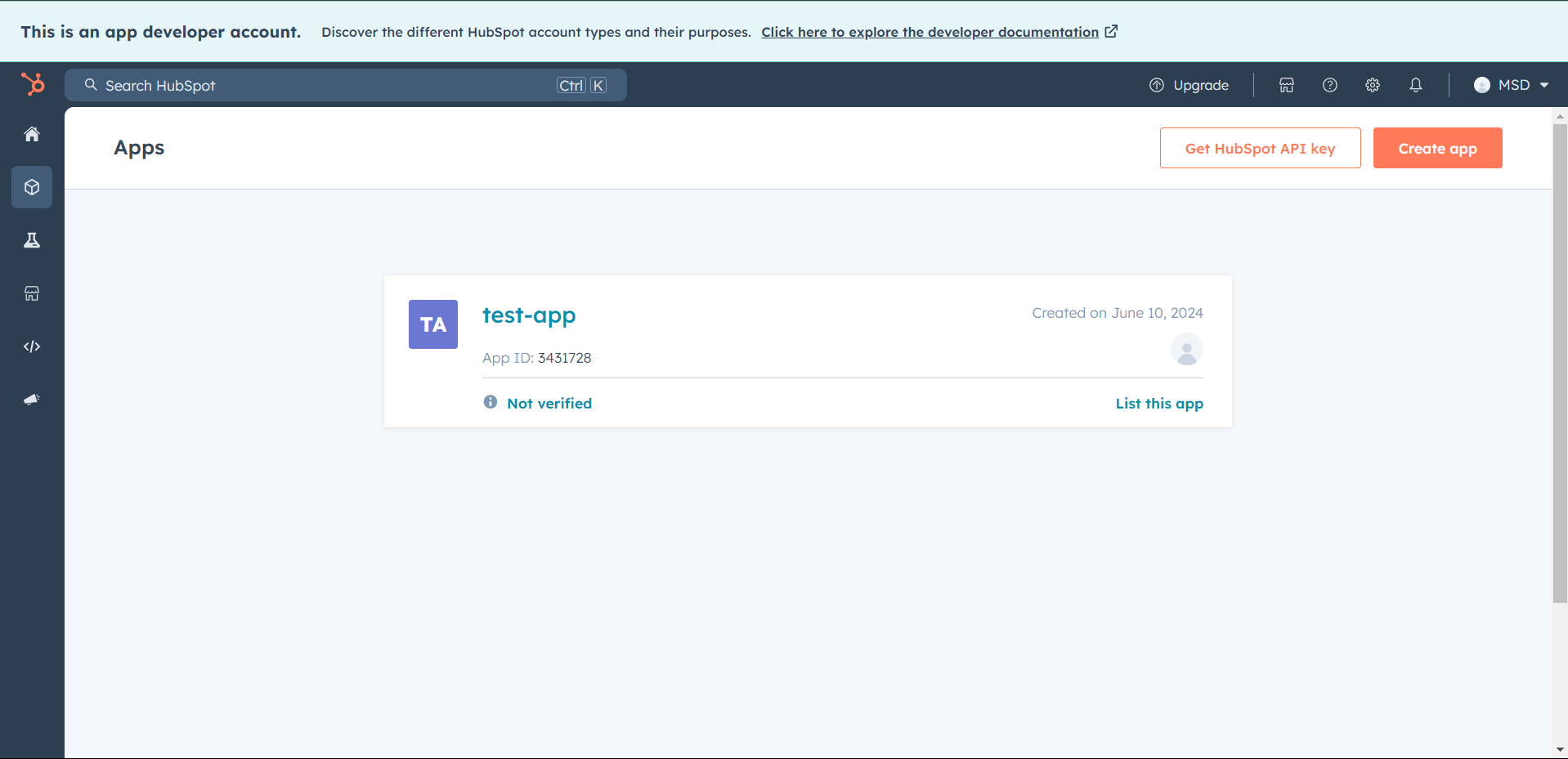

Access the HubSpot Developer Account Navigate to Apps within the account

Identify an App with Required Scopes Create or identify an app with the required scopes:

ticketse-commercemedia_bridge.readcrm.objects.goals.readtimelinecrm.objects.marketing_events.writecrm.objects.custom.readcrm.objects.feedback_submissions.readcrm.objects.custom.writecrm.objects.marketing_events.readcrm.pipelines.orders.readcrm.schemas.custom.read

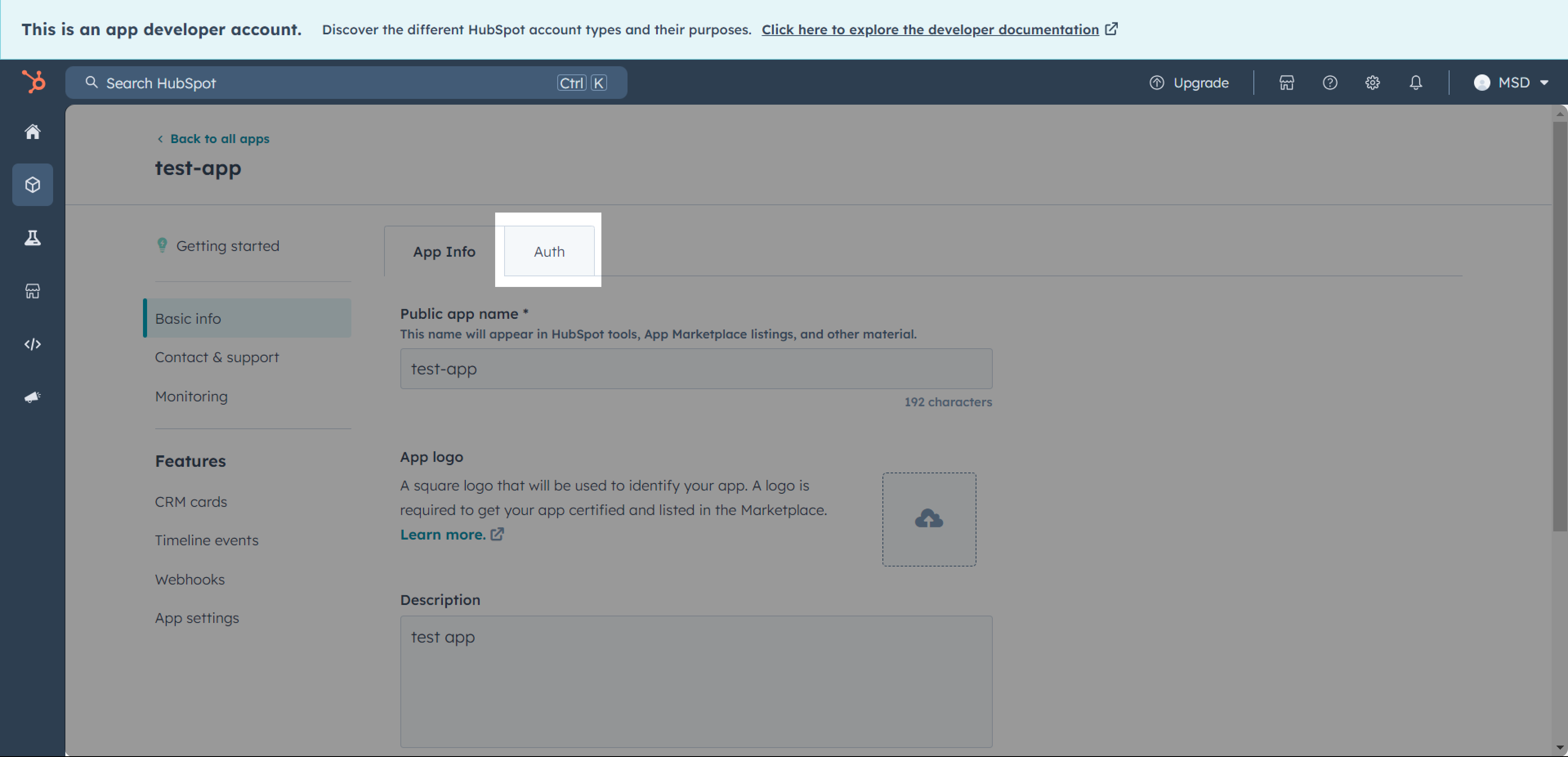

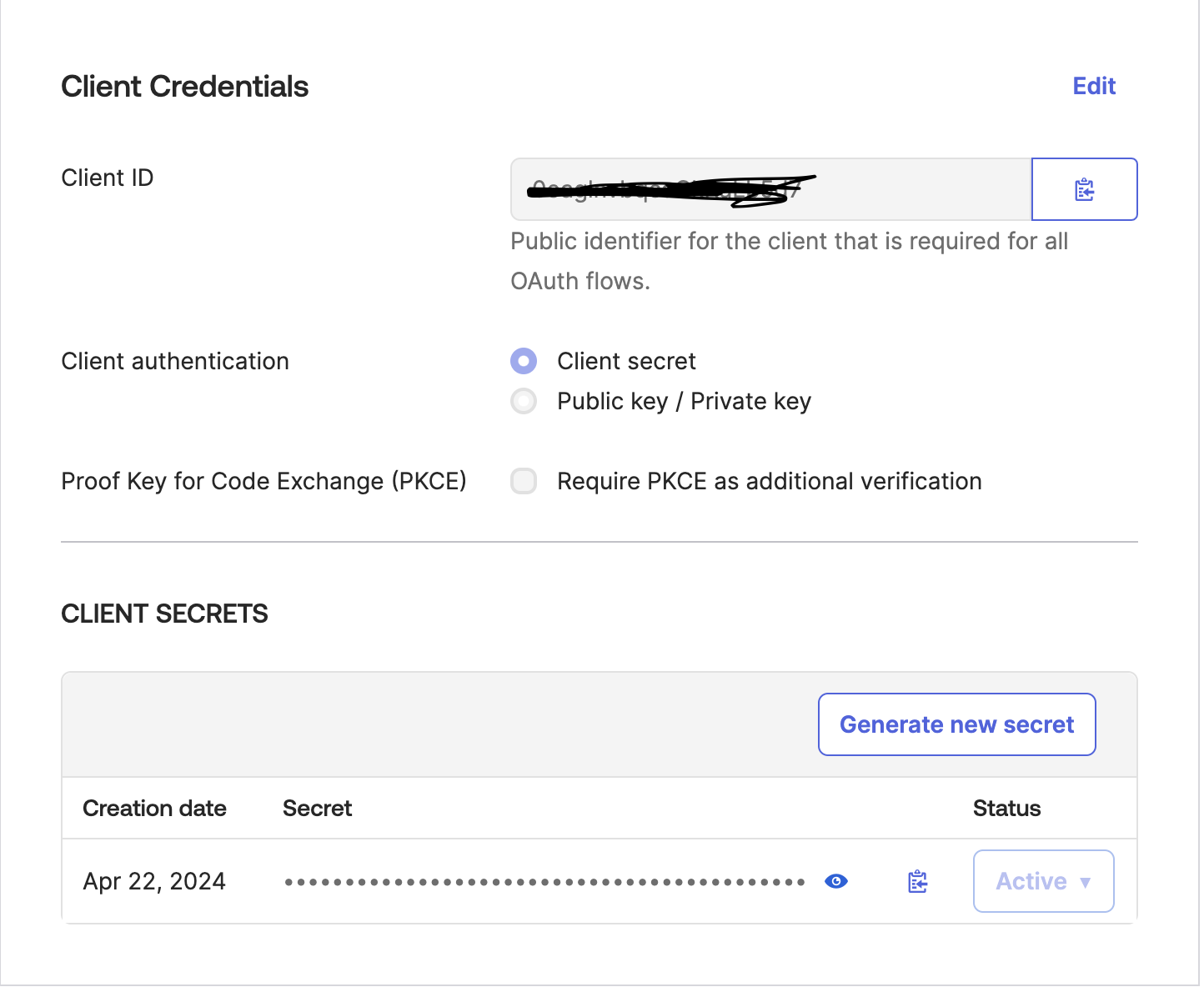

In the app screen, navigate to the Auth Section to locate the Client ID and Client Secret

Open the Sample Install URL (OAuth) and authenticate your HubSpot account. Copy the authorization code from the redirect URL

Use the code to obtain a Refresh Token by executing the following cURL command

curl --location 'https://api.hubapi.com/oauth/v1/token' \ --header 'Content-Type: application/x-www-form-urlencoded' \ --data-urlencode 'grant_type=authorization_code' \ --data-urlencode 'client_id=<placeholder_client_id>' \ --data-urlencode 'client_secret=<placeholder_client_secret>' \ --data-urlencode 'redirect_uri=<placeholder_redirect_uri>' \ --data-urlencode 'code=<placeholder_code>'

Private App Authentication

Credentials Needed:

- Private App Access Token

To set up Private App Authentication:

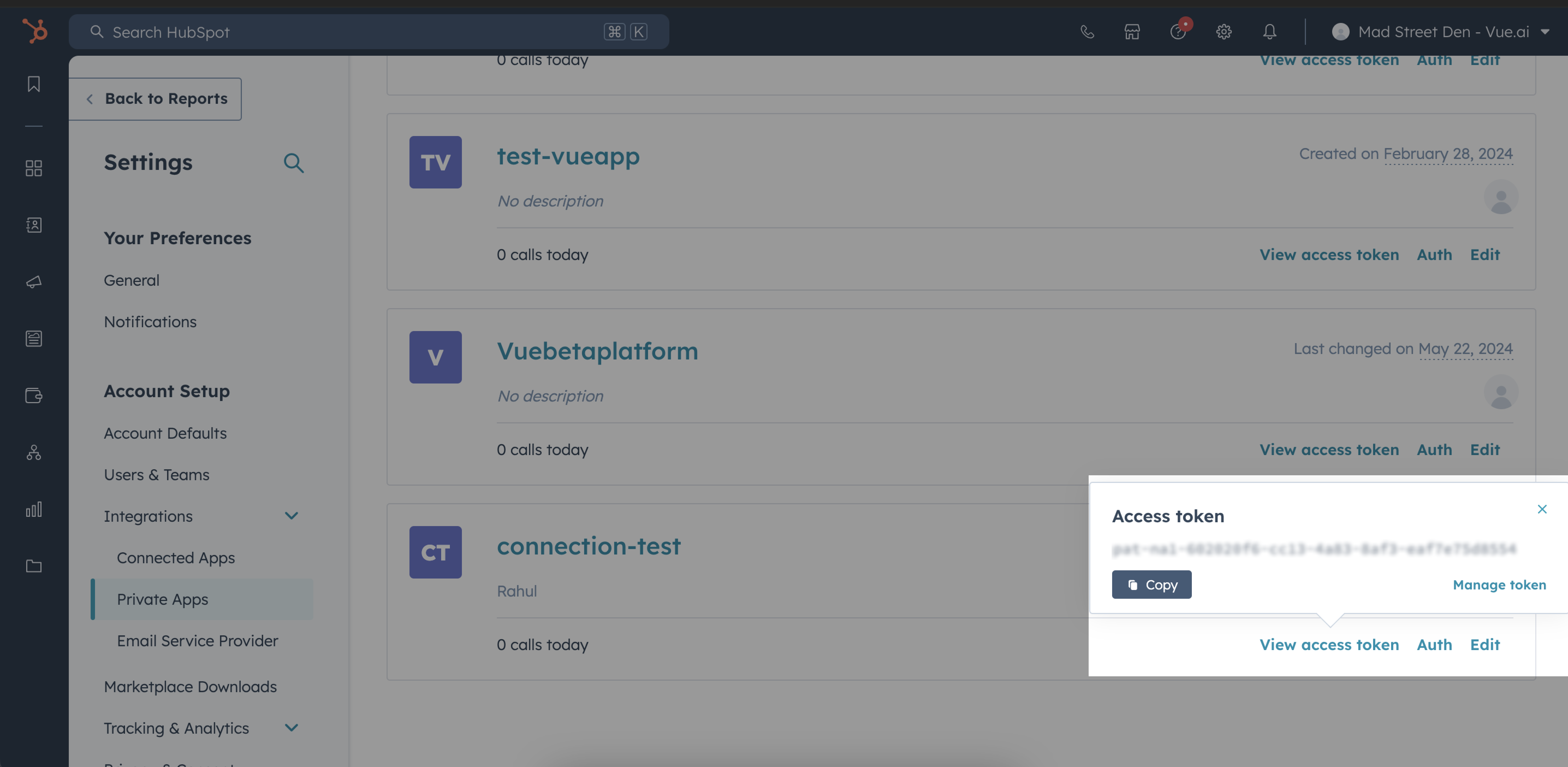

Navigate to Private Apps Settings Go to Settings > Integrations > Private Apps in the HubSpot account

Locate and select the desired Private App, then click View Access Token to copy the token

For more details, visit the HubSpot API Documentation.

Google Sheets Data Source Configuration

A comprehensive guide to configuring Google Sheets as a data source, covering prerequisites, authentication methods, configuration steps, and supported functionalities.

Prerequisites Before beginning, ensure the following prerequisites are met:

- A Google Cloud Project with the Google Sheets API enabled

- A service account key or OAuth credentials for authentication

- Access to the Google Sheet intended for integration

Ensure access to a Google Cloud Project with the Google Sheets API enabled, a service account key or OAuth credentials for authentication, and the Google Sheet intended for integration, before starting.

Overview

Google Sheets, due to its flexibility and ease of use, is a popular choice for data ingestion. The platform supports two authentication methods—Service Account Key and OAuth Authentication—allowing secure connection of spreadsheets to the data ingestion tool.

Configuration Steps

Prerequisites

- Enable the Google Sheets API for your project carefully

- Obtain a Service Account Key or OAuth credentials

- Ensure the spreadsheet permissions allow access to the service account or OAuth client

Choose Authentication Method

Service Account Key Authentication:

- Create a service account and grant appropriate roles (Viewer role recommended) in Google Cloud Console

If the spreadsheet is viewable by anyone with its link, no further action is needed. If not, give your Service Account access to your spreadsheet.

- Generate a JSON key by clicking on Add Key under Keys tab

- Grant the service account viewer access to the Google Sheet

OAuth Authentication:

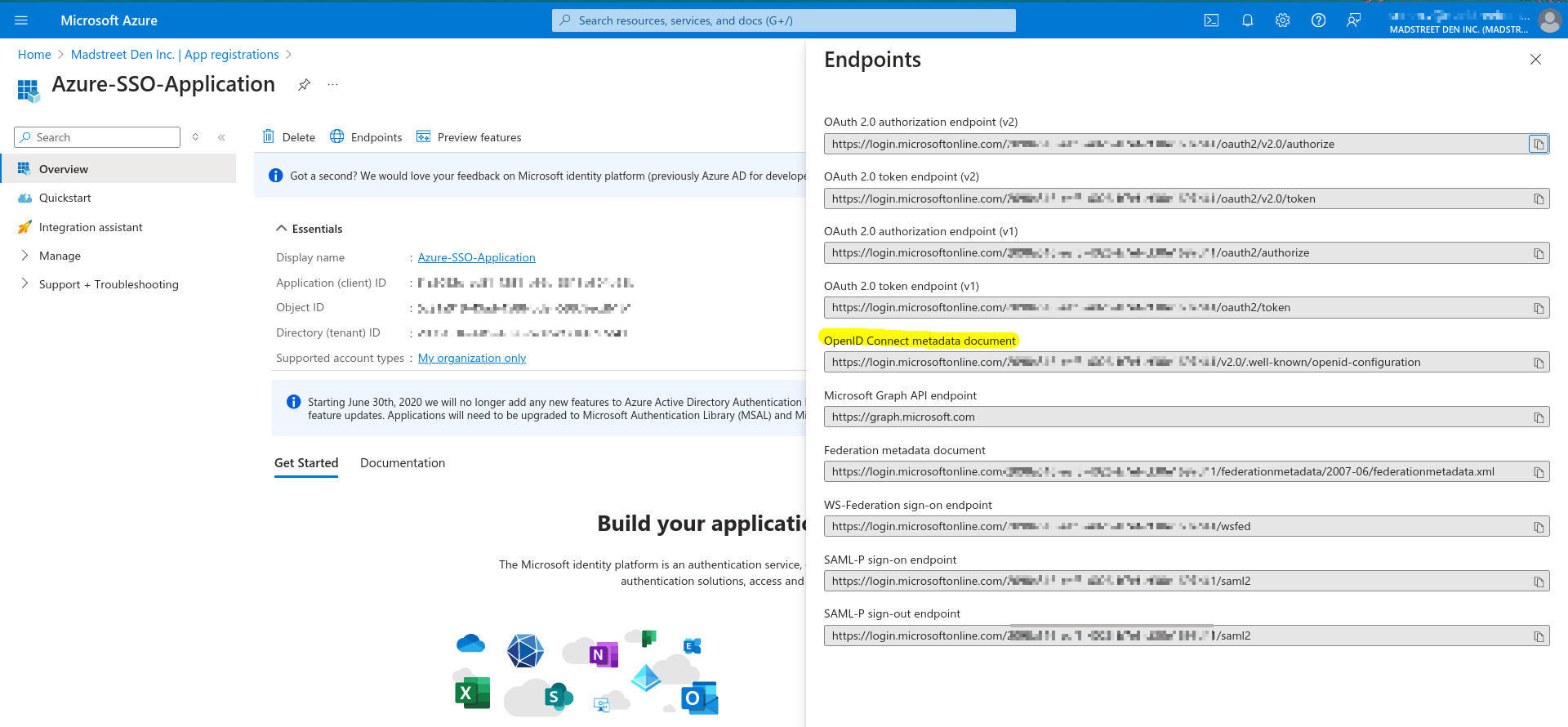

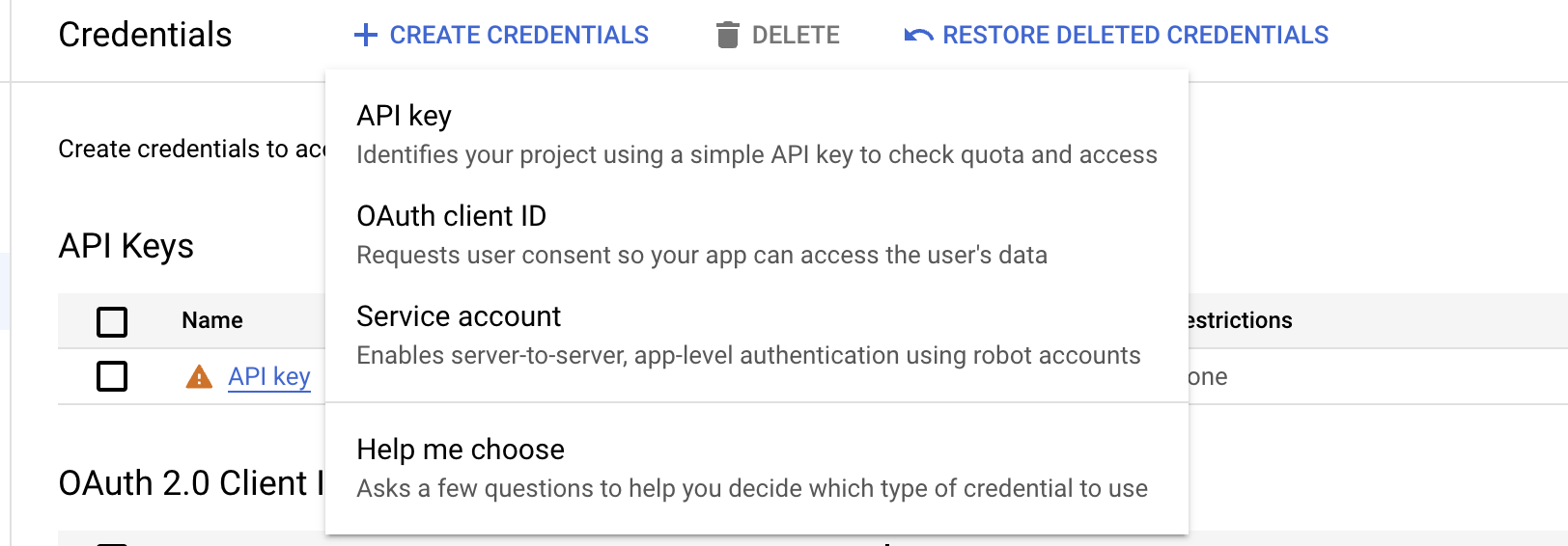

- Create OAuth 2.0 Credentials by going to APIs & Services → Credentials

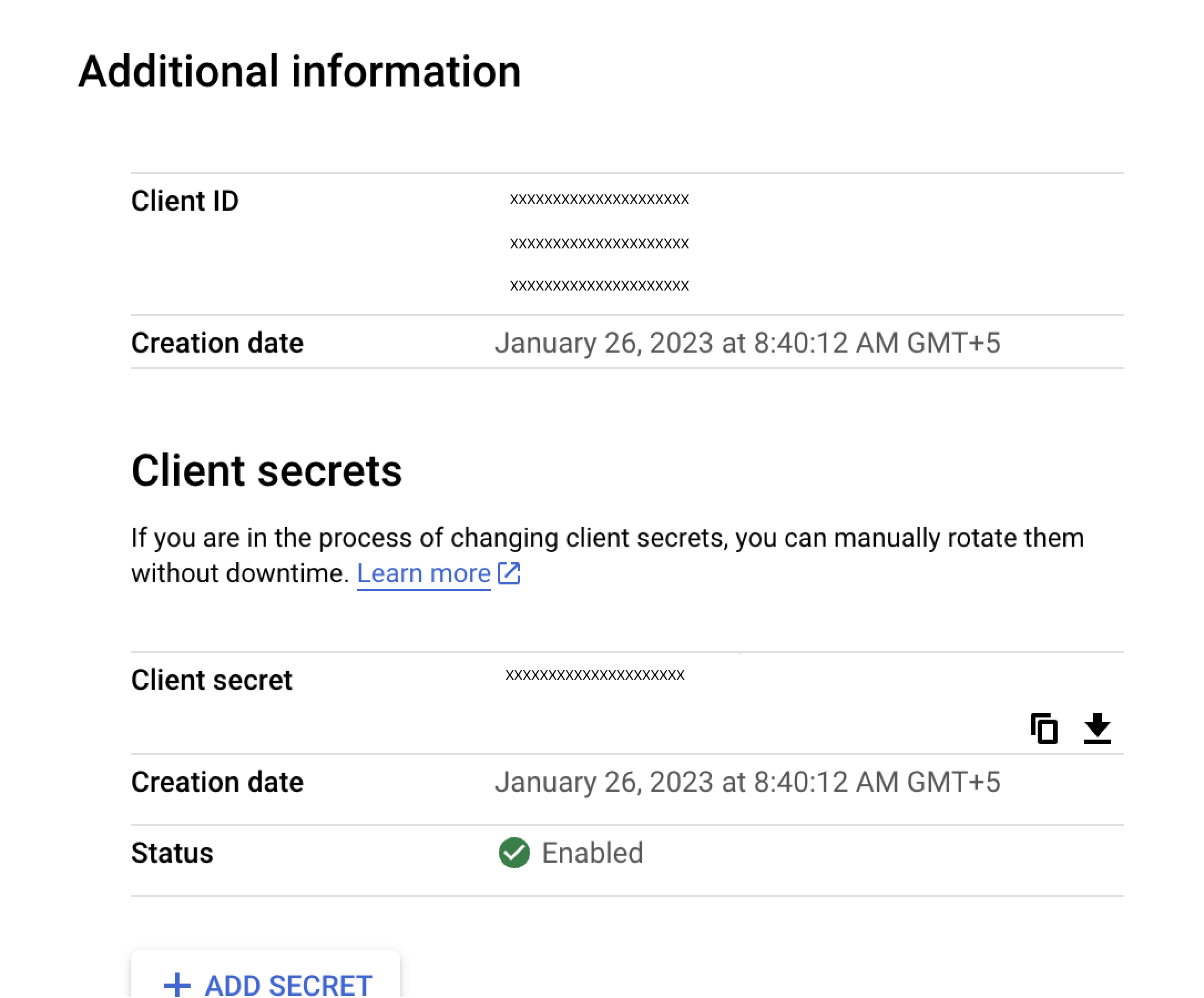

- Click Create Credentials → OAuth Client ID

- Configure the OAuth consent screen:

- Provide app name, support email, and authorized domains

- Select Application Type as Web application

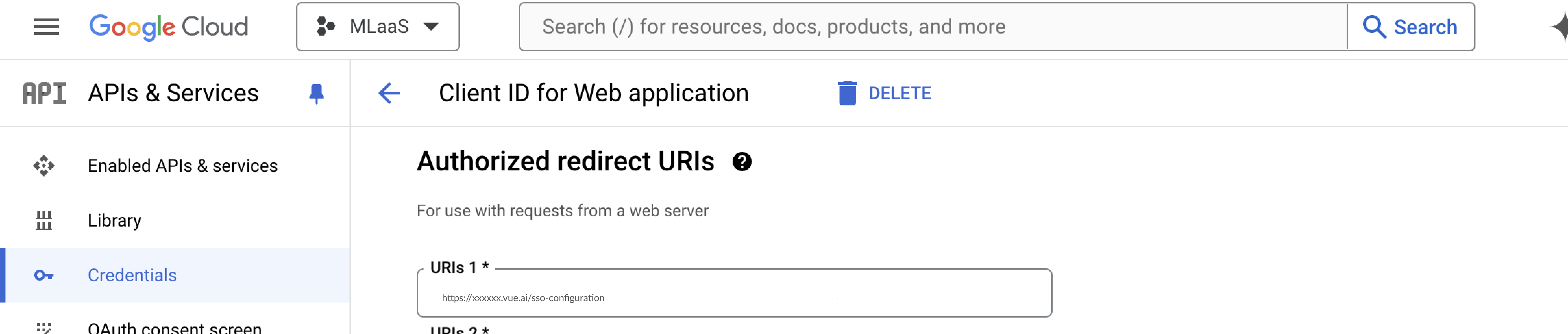

- Add your application's Redirect URI

Generate Authorization URL

Use the following format:

https://accounts.google.com/o/oauth2/auth? client_id={CLIENT_ID}& response_type=code& redirect_uri={REDIRECT_URI}& scope=https://www.googleapis.com/auth/spreadsheets https://www.googleapis.com/auth/drive& access_type=offline& prompt=consentExchange Authorization Code

Make a POST request to:

https://oauth2.googleapis.com/token

The response will include:

{ "access_token": "ya29.a0AfH6SMCexample", "expires_in": 3599, "refresh_token": "1//0exampleRefreshToken", "scope": "https://www.googleapis.com/auth/spreadsheets https://www.googleapis.com/auth/drive", "token_type": "Bearer" }Configure Data Source

- Select Google Sheets as the source type

- Provide authentication details:

- For Service Account Key, paste the JSON key

- For OAuth, provide the Client ID, Client Secret, and Refresh Token

- Enter additional configuration details, such as the spreadsheet link and row batch size

Based on Google Sheets API limits documentation, it is possible to send up to 300 requests per minute, but each individual request has to be processed under 180 seconds, otherwise the request returns a timeout error. Consider network speed and number of columns of the Google Sheet when deciding a row_batch_size value. The default value is 200, but if the sheet exceeds 100,000 records, consider increasing the batch size.

Test Connection Verify integration by testing the connection. If the connection fails, recheck your credentials, API settings, and project permissions.

Additional Information

- Supported sync modes: Full Refresh (Overwrite and Append)

- Supported streams: Each sheet is synced as a separate stream, and each column is treated as a string field

API limits: Google Sheets API allows 300 requests per minute with a 180-second processing window per request. Adjust batch sizes accordingly.

PostgreSQL Data Source Configuration

Step-by-step instructions for configuring PostgreSQL as a data source with secure connections using SSL modes and SSH tunneling, and understanding advanced options like replication methods.

Prerequisites Before beginning, ensure the following details are available:

- Database Details: Host, Port, Database Name, Schema

- Authentication: Username and password

Ensure access to database details and authentication credentials before starting.

Overview

PostgreSQL, a robust and versatile relational database system, supports various integration methods for data sources. This guide explains essential configurations, optional security features, and advanced options such as replication and SSH tunneling.

Configuration Steps

Select PostgreSQL as the Source Type

Fill in the required details

- Host: Provide the database host

- Port: Specify the database port (default: 5432)

- Database Name: Name of the database to connect

- Schema: Schema in the database to use

- Username: Database username

- Password: Database password

Additional Security Configuration (Optional)

- SSL Mode: Choose from the available modes (e.g., require, verify-ca)

- SSH Tunnel Method: Select the preferred SSH connection method if required

Advanced Options (Optional) Replication Method: PostgreSQL supports two replication methods: Change Data Capture (CDC) and Standard (with User-Defined Cursor)

Change Data Capture (CDC):

- Uses logical replication of the Postgres Write-Ahead Log (WAL) to incrementally capture deletes using a replication plugin

- Recommended for:

- Recording deletions

- Large databases (500 GB or more)

- Tables with a primary key but no reasonable cursor field for incremental syncing

Standard (with User-Defined Cursor):

- Allows incremental syncing using a user-defined cursor field (e.g.,

updated_at)

- Allows incremental syncing using a user-defined cursor field (e.g.,

SSL Modes

PostgreSQL supports multiple SSL connection modes for enhanced security:

- disable: Disables encrypted communication between the source and Airbyte

- allow: Enables encrypted communication only when required by the source

- prefer: Allows unencrypted communication only when the source doesn't support encryption

- require: Always requires encryption. Note: The connection will fail if the source doesn't support encryption

- verify-ca: Always requires encryption and verifies that the source has a valid SSL certificate

- verify-full: Always requires encryption and verifies the identity of the source

SSH Tunnel Configuration (Optional)

To enhance connectivity, PostgreSQL supports SSH tunneling for secure database connections:

- No Tunnel: Direct connection to the database

- SSH Key Authentication: Use an RSA Private Key as your secret for establishing the SSH tunnel

- Password Authentication: Use a password as your secret for establishing the SSH tunnel

Supported Sync Methods

The PostgreSQL source connector supports the following sync methods:

| Mode | Description |

|---|---|

| Full Refresh | Fetches all data and overwrites the destination |

| Incremental | Fetches only new or updated data since the last sync |

Commonly used SSL modes are 'require' and 'verify-ca.' SSH tunneling is optional and typically used for enhanced security when direct database access is restricted.

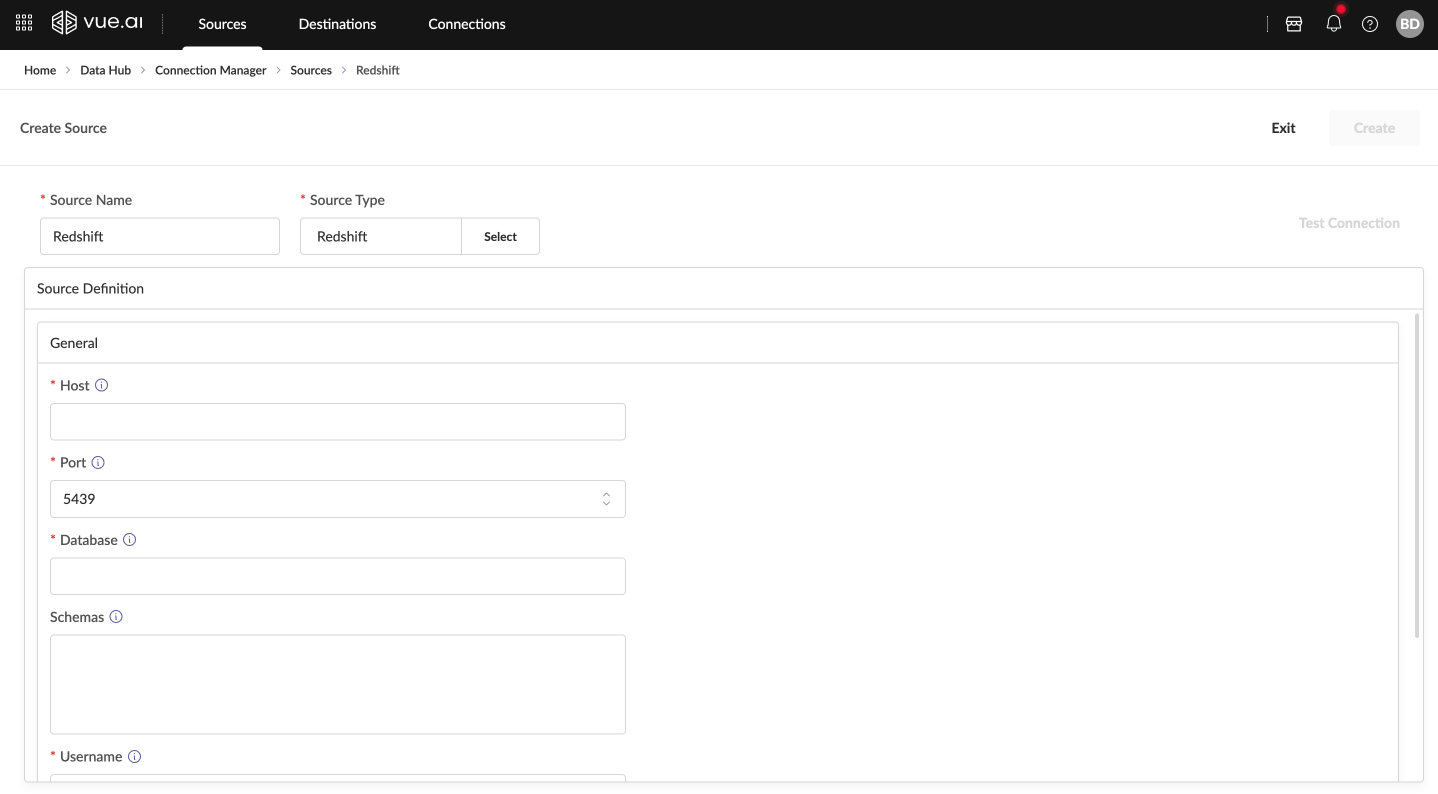

Amazon Redshift Source Configuration

Step-by-step instructions for configuring Amazon Redshift as a data source, covering prerequisites, authentication methods, and configuration steps for seamless integration.

Prerequisites Before beginning, ensure the availability of the following:

- Host: The hostname of the Amazon Redshift cluster

- Port: The port number for the Amazon Redshift cluster (default is 5439)

- Database Name: The name of the Redshift database to connect to

- Schemas: The schemas in the specified database to access

- Username: The Redshift username for authentication

- Password: The Redshift password for authentication

Ensure access to the necessary prerequisites and authentication details for successful configuration.

Configuration Steps

Select Amazon Redshift as the Source Type

Provide Configuration Details

- Enter the hostname of the Redshift cluster in the Host field

- Enter the port number (default: 5439) in the Port field

- Enter the database name in the Database Name field

- List the schemas to access in the database in the Schemas field

- Enter the Redshift username in the Username field

- Enter the Redshift password in the Password field

Test the Connection Ensure that the credentials and configuration are correct

Ensure that network settings, such as firewalls or security groups, allow connections to the Redshift cluster.

Advanced Configuration Options

SSL Configuration

- SSL Mode: Choose between

disable,allow,prefer,require,verify-ca, orverify-full - Certificate: Upload SSL certificate if required by your Redshift cluster

Connection Pooling

- Pool Size: Configure connection pool size for optimal performance

- Timeout: Set connection timeout values

- Retry Policy: Configure retry attempts for failed connections

Schema Selection

- Include/Exclude: Use patterns to include or exclude specific schemas

- Wildcards: Support for wildcard patterns in schema selection

- Case Sensitivity: Configure case-sensitive schema matching

Supported Sync Modes

The Amazon Redshift source connector supports the following sync modes:

| Mode | Description |

|---|---|

| Full Refresh | Fetches all data and overwrites the destination |

| Incremental | Fetches only new or updated data since the last sync |

Amazon Redshift requires username and password authentication for connecting to the database. Ensure that the Redshift credentials have the necessary permissions to access the database and schemas.

Destinations Configuration

Redshift Destination Configuration

Step-by-step instructions for configuring Amazon Redshift as a destination with S3 staging for efficient data loading.

Prerequisites Before beginning, ensure the following are available:

- An active AWS account

- A Redshift cluster

- An S3 bucket for staging data

- Appropriate AWS credentials and permissions

Required Credentials include:

- Redshift Connection Details:

- Host

- Port

- Username

- Password

- Schema

- Database

- S3 Configuration:

- S3 Bucket Name

- S3 Bucket Region

- Access Key Id

- Secret Access Key

Redshift replicates data by first uploading to an S3 bucket and then issuing a COPY command, following Redshift's recommended best practices.

AWS Configuration

Set up Redshift Cluster

- Log into the AWS Management Console

- Navigate to the Redshift service

- Create and activate a Redshift cluster if needed

- Configure VPC settings if Airbyte exists in a separate VPC

Configure S3 Bucket

- Create a staging S3 bucket

- Ensure the bucket is in the same region as the Redshift cluster

- Set up appropriate bucket permissions

Permission Setup

Execute the following SQL statements for required permissions:

GRANT CREATE ON DATABASE database_name TO read_user; GRANT usage, create on schema my_schema TO read_user; GRANT SELECT ON TABLE SVV_TABLE_INFO TO read_user;

Supported Sync Methods

The Redshift destination connector supports the following sync methods:

| Mode | Description |

|---|---|

| Full Refresh | Fetches all data and overwrites the destination |

| Incremental - Append Sync | Fetches only new or updated data and appends it to the destination |

| Incremental - Append + Deduped | Fetches new or updated data, appends it to the destination, and removes duplicates |

Data Specifications Naming Conventions for Standard Identifiers require them to start with a letter/underscore, contain alphanumeric characters, have a length of 1-127 bytes, and contain no spaces or quotation marks. Delimited Identifiers are enclosed in double quotes, can contain special characters, and are case-insensitive.

Data Size Limitations include a maximum of 16MB for raw JSON records, a 65,535 bytes limit for VARCHAR fields, and handling of oversized records by nullifying values exceeding VARCHAR limits while preserving Primary Keys and cursor fields when possible.

PostgreSQL Destination Configuration

Step-by-step instructions for configuring Postgres as a destination with secure connections and performance optimization.

Prerequisites Before beginning, ensure the following are available:

- A PostgreSQL server version 9.5 or above

- Database details and authentication credentials

- Proper network access configuration

PostgreSQL, while an excellent relational database, is not a data warehouse. It should only be considered for small data volumes (less than 10GB) or for testing purposes. For larger data volumes, a data warehouse like BigQuery, Snowflake, or Redshift is recommended.

Database User Setup

A dedicated user should be created with the following command:

CREATE USER read_user WITH PASSWORD '<password>'; GRANT CREATE, TEMPORARY ON DATABASE <database> TO read_user;

The user needs permissions to:

- Create tables and write rows

- Create schemas

Configuration Steps

Provide Connection Details

- Host: Database server hostname

- Port: Database port (default: 5432)

- Database Name: Target database

- Username: Database username

- Password: Database password

- Default Schema Name: Schema(s) for table creation

Security Configuration (Optional)

- SSL Mode: Choose appropriate encryption level

- SSH Tunnel Method: Select if required

- JDBC URL Parameters: Add custom connection parameters

Data Type Mapping and Raw Tables Structure are provided. Each stream creates a raw table with specific columns. Final Tables Mapping, Supported Sync Modes, and Naming Conventions are also detailed.

Vue Data Catalog Destination Configuration

Step-by-step instructions for configuring Vue Data Catalog as a destination with multiple access modes and performance optimization.

Prerequisites Before beginning, ensure the following are available:

- Access to Enterprise AI Orchestration Platform | Vue.ai

- Necessary permissions to create and manage datasets

- Understanding of the data structure and volume

Dataset Creation Methods A dataset can be created through:

- Enterprise AI Orchestration Platform

- Datasets API

- Vue SDK

Configuration Steps

Choose Dataset Access Mode

- File-based (CSV, JSON, Parquet, Delta)

- Relational Database (PostgreSQL)

- Polyglot (combination of both)

Configure Storage Settings

- For file-based: S3 or Azure Container configuration

- For relational: PostgreSQL database details

- For polyglot: Both storage configurations

Set Performance Parameters

- Buffer Size

- CPU Limit

- Memory Limit

Configure Data Processing Options

- Writing mode (append, append-dedupe, overwrite)

- Schema handling preferences

- Data type mappings

Supported Datatypes For File Datasets (Delta)

| Input Datatype | Output Datatype |

|---|---|

| string | pyarrow string |

| integer | pyarrow int64 |

| number | pyarrow float64 |

| boolean | pyarrow bool_ |

| timestamp | pyarrow timestamp(nanosecond) |

Supported Datatypes For Relational Database

| Input Datatype | Output Datatype for PostgreSQL |

|---|---|

| string | BIGINTEGER |

| integer | INTEGER |

| float | DOUBLE PRECISION |

| bool | BOOLEAN |

| datetime | TIMESTAMP |

Document Manager

The Document Manager provides comprehensive capabilities for intelligent document processing (IDP), from defining document types and taxonomies to executing complex extraction workflows. It enables automated extraction and processing of structured and unstructured documents.

Key Capabilities:

- OCR Processing: Convert images and PDFs to machine-readable text

- Auto-Classification: Automatically identify document types

- Data Extraction: Extract specific fields and values from documents

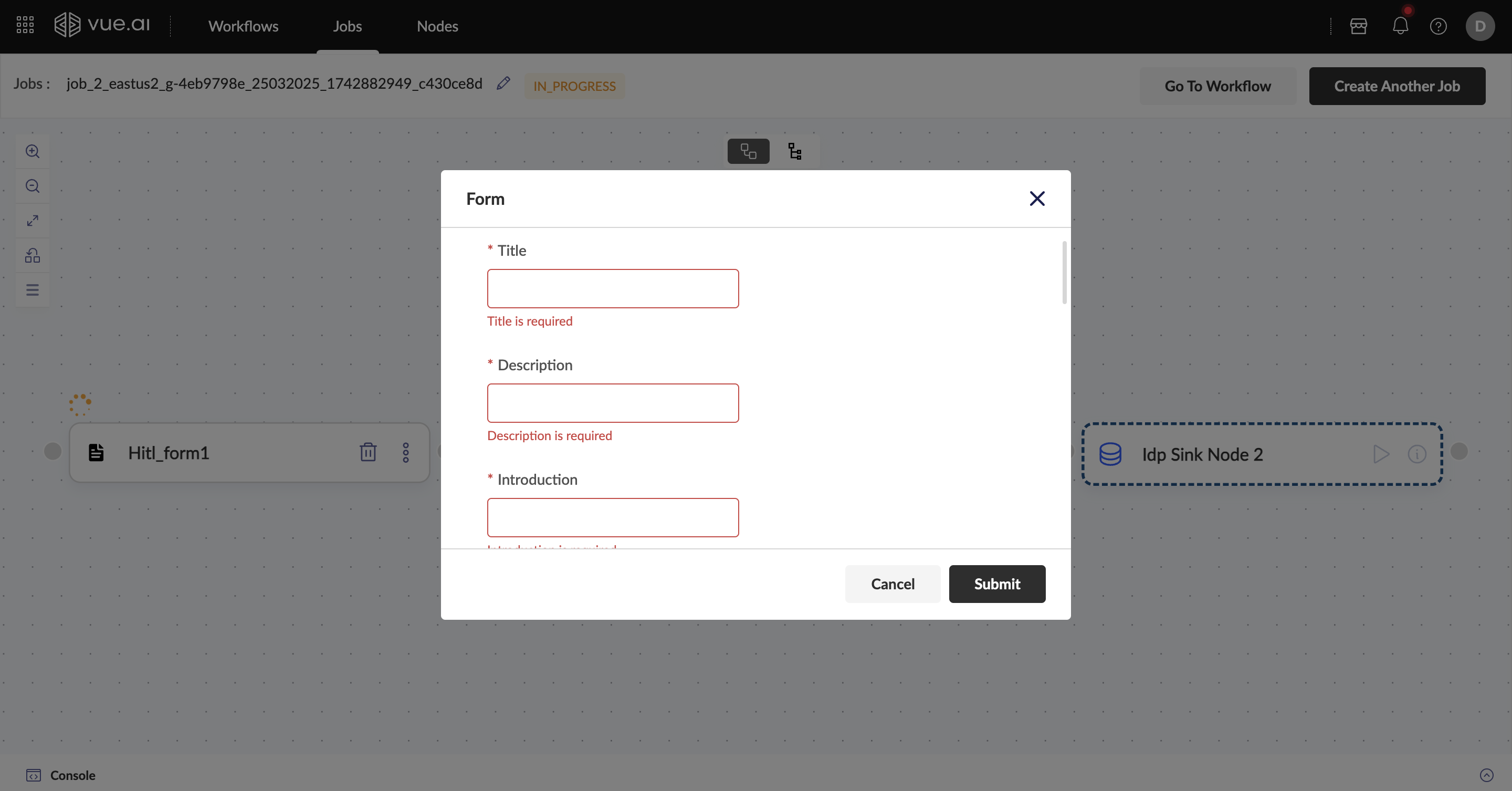

- Review Workflow: Human-in-the-loop validation and correction

- Batch Processing: Handle large volumes of documents efficiently

Advanced Features:

- Live OCR: Annotate and extract data in real-time

- Auto-Classification Models: Identify the correct document type automatically

- Data Enrichment Techniques: Use methods like STP and matching to further enrich and organize extracted data

- One-Click Features: Utilize one-shot learning and zero-shot learning for high output accuracy

Core Functionalities:

- Document Type Management: Create & manage taxonomy and register new document types

- Document Processing: Upload documents, review extracted data, and annotate extracted data

- Performance Analytics: Analyze model performance and accuracy metrics based on provided feedback

Document Type

This guide will walk you through the step-by-step process of creating and registering a new Document Type. This is the foundational step for teaching the AI how to extract data from your specific documents.

Objective: To create a reusable template (Document Type) that can accurately extract data from a specific kind of document, such as a driver's license or an invoice.

Prerequisites:

- Access to Document Manager

- You must have at least one high-quality example image or PDF of the document you want to process.

Step 1: Navigate to the Document Type Manager

- From the main dashboard, hover over Data Hub in the top navigation bar.

- In the dropdown menu, under Document Manager, click on Document Type.

This will take you to the "All Document Type" page, which lists all existing document types in your account.

Step 2: Create and Configure the New Document Type

- Click the + Create New Document Type button.

- On the "Upload Document" screen, fill in the initial details:

- Document Type Name: Give your template a clear, unique name (e.g.,

US Drivers License - CA). - Layout: Select the layout that best describes your document (e.g.,

Structured). - Tags (Optional): Add any relevant tags for organization.

- Document Type Name: Give your template a clear, unique name (e.g.,

- In the "UPLOAD FILE" section, drag and drop your example document or click browse to upload it.

- Click Next Step.

Step 3: Review the Initial (0-Shot) Extraction

After uploading, you are taken to the annotation interface. The system automatically performs a 0-shot extraction—an initial attempt to identify and extract data without any prior training.

On the right, you'll see two tabs representing the 0-shot results:

| Taxonomy (The Field Names) | Document Extraction (The Field Values) |

|---|---|

The Taxonomy tab lists the names of the attributes the AI believes are present. This is your starting point for building the schema. | The Document Extraction tab shows the actual data extracted for each attribute, along with a confidence score. |

|  |

Your goal is to refine this initial result into a perfect, reusable taxonomy.

Step 4: Refine the Taxonomy

Now, you will edit, add, or delete attributes to match your exact requirements.

Editing Standard Attributes

For each attribute you want to keep or modify:

- Click on the attribute in the list. The configuration panel will open on the right.

- Define its properties:

- Attribute Name: Change the raw name (e.g.,

DOB) to a user-friendly one (e.g.,Date of Birth). - Annotation: Adjust the bounding box on the document image if it's incorrect.

- Select Type: Choose the correct data type (e.g.,

Date,Free Form Text). This is critical for validation and formatting. - Description / Instruction: Add context for the model and human reviewers.

- Attribute Name: Change the raw name (e.g.,

- Click Save.

Editing a Date attribute | Editing a Free Form Text attribute |

|---|---|

|  |

Configuring Table Attributes

If your document contains a table, the process is more detailed:

When you create or edit a

Tableattribute, first define its approximateColumnsandRowsin the right-hand panel. Then draw a bounding box around the entire table.

Click the Manage button under "Configure Columns" to define the table's internal schema.

In this view, you can define each column's

Header,Alias,Data Type, and more. This creates a standardized output for your table data.

Step 5: Verify the Final Taxonomy and Extraction

Once you have configured all your attributes, perform a final review.

Switch to the Taxonomy tab. It should now show your clean, finalized list of attribute names.

Switch to the Document Extraction tab. This view shows the extracted values based on your refined taxonomy. Check that the values are correct and properly formatted. Note the use of tags (

date,name, etc.) for filtering.

Step 6: Register the Document Type

When you are fully satisfied with the taxonomy and the extraction results, you are ready to finalize the Document Type.

- Click the Register button in the top-right corner of the page.

- The status of your Document Type will change from

DrafttoRegistered.

Congratulations! Your Document Type is now a live, reusable model that can be used to automatically process new documents of the same kind.

Document Extraction

This guide provides step-by-step instructions for uploading, processing, and reviewing documents using the platform's user interface.

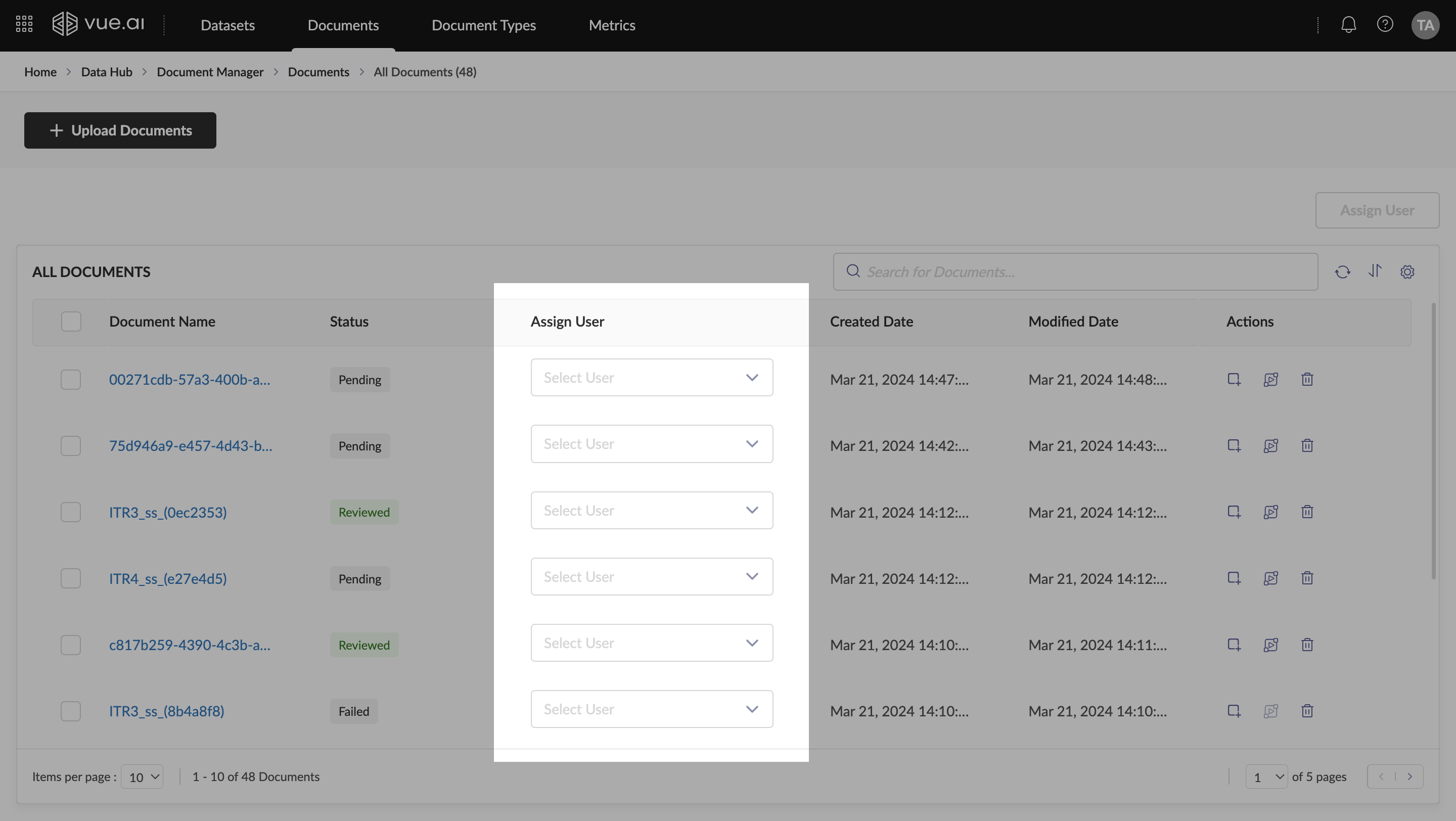

Step 1: Navigate the Documents Hub

The Documents Hub is your central dashboard for all processed documents. You can access it from Data Hub > Document Manager > Documents.

From here, you can search, filter, assign documents for review, and access key actions like Annotate or View Job. The "View Job" action takes you to the Automation Hub to see the specific workflow run for that document.

Step 2: Upload New Documents

- Click the + Upload Documents button to open the upload modal.

- Provide a Document Batch Name and optional Tags for organization.

- Choose a Document Type:

- Select a specific type if all documents are the same.

- Choose Auto Classify to let the system identify the type for each document automatically.

- Drag and drop your files or browse to upload.

Step 3: Review and Annotate Extraction Results

After processing, click the Annotate action for a document to open the review interface. This screen is divided into three panels for an efficient workflow.

- Left Panel (Navigator): Click page thumbnails to jump between pages.

- Center Panel (Viewer): Interact with the document image and its bounding boxes.

- Right Panel (Results): View and edit the extracted data.

Correcting Data

If an extracted value is incorrect:

- Click the attribute in the right panel to open the edit view.

- You can edit the text directly, re-draw the bounding box on the document, or provide natural language feedback to the model.

Step 4: Reviewing Extracted Tables

Table data has a specialized review interface.

Merged View: For tables spanning multiple pages, the system presents a single merged table first, which you can expand to see the tables from individual pages.

Review Views: You can switch between two views:

- Spreadsheet View: A clean grid for easy scanning and editing. You can sort, filter, and even perform quick calculations like summing selected cells.

- Cell View ("Show Crops"): Displays the actual image snippet for each cell, perfect for verifying difficult-to-read characters.

| Spreadsheet View (with Column Management) | Cell View (Visual Crops) |

|---|---|

|  |

Step 5: Finalize the Review

Once all corrections are made, click Save and Exit. The document's status will update to Reviewed, and your corrections will be used to improve the model over time.

Folio

Welcome to your comprehensive user guide for Folio processing and review.

This guide will walk you through the step-by-step process of processing, and reviewing a new Folio, so you can streamline your document processing, glean actionable insights, and make confident decisions—all within an intuitive interface.

Objective: To effectively process and review Folio.

Prerequisites: You must have the necessary Folio Type already created and high-quality example image(s) or PDF(s) of the document you want to process.

What Is Folio?

Folio is an intelligent document processing feature that transforms collections of documents into actionable insights. By leveraging taxonomy, predefined rules and data dictionaries configured in Folio_Type, Folio automates the extraction, validation, and organization of data across your uploaded document sets. Your processed outcome—the Folio—serves as a single source of truth for review and helps you make informed decisions.

Step 1: Navigate the Folio

The Documents Hub is your central dashboard for all processed documents, including Folio. You can access Folio from Data Hub > Document Manager > Folio.

When you navigate to the Folio section, you'll see the Folio Listing screen, which displays all folios processed within your account. Each folio in the list shows:

- Folio Name: A unique identifier for the Folio

- Status: Current system processing state of the Folio (Processing, Processed, Error, Completed)

- Review Status: Current review state of the Folio (Not Ready, Ready for Review, Ready for Re-review, Approved, Sent Back)

- Folio Type: The processing template/taxonomy used

- Tags: Associated labels for easy categorization

- Assign User: Shows who's responsible for reviewing the folio

- Modified Date: Last update timestamp

- Created Date: When the folio was initially created

Step 2: Managing Your Folio

From the Folio Listing Screen, you can efficiently manage your folios using the following features:

- Search: Use the search bar to quickly find specific folio by name.

- Filter and Sort: Click on the column headers to sort the list or use advanced filters to narrow down the Folios based on specific criteria.

- Assign (Admins): If you are an administrator, you can assign or reassign a Folio to any user in your account directly from the Assign User dropdown menu or multi-select Folios via checkbox next to Folio Name and click on Assign User button.

- Delete: Delete any of the existing Folio.

Step 3: Reviewing Folio Results

Once Folio processing is done, click the Folio Name to open the Folio details and begin the review process. You'll find everything you need to conduct a thorough review. The screen is generally divided into two main tabs: Overview, and Rules.

Overview Tab: Your Folio at a Glance

The Overview provides a human-readable comprehensive summary of your processed folio and it's metrics:

Here's what you'll find:

- Summary: A concise, auto-generated summary of the evaluation. It highlights key outcomes, such as passed checks and any significant issues that require further attention.

- Document Information: Details about each processed document with extracted attribute-value pairs.

- Example: you'll see Date of Application : 24/01/2025 and Borrower Name : 0 under the Application Form.

- Rule Statistics: Statistics about rule execution and user actions performed.

- Rule Status: A donut chart showing the percentage of rules that have passed, failed, or are pending review.

- Override Status: See how many rule statuses have been manually edited by users during the review process.

- View Status: Track how many of the total rules have been viewed by a reviewer.

Rules Tab: A Deep Dive into the Details

The Rules section is where you'll spend most of your review time. It lists every rule that was executed on the Folio. Here you can dive deeper into how your documents were analyzed and validated:

View All Rules: See a complete list of all rules executed during processing, with each rule consisting of:

- Rule Name: Descriptive title of what the rule is

- Execution Summary: What the rule found

- Status: Current result (Pass, Fail, Needs Review, etc.)

- View Status: Whether you've reviewed this rule

- Edit Status: Whether you've made changes to the rule status

- Description: Detailed explanation of the rule's purpose

- Conditions: All conditions that were evaluated

- Results Table: Detailed results showing source data, target data, and outcomes

- Document References: Direct links to source documents

Understanding and Reviewing a Rule:

- Click on any rule name to expand and you'll see it's subrules. Clicking on rule name will also open the documents processed as part of the rule in the document viewer.

- Click a subrule to expand its results table (shows attributes, source, target and result).

- Click any row or value in the results table to open the Document at the exact page and highlight the attribute (the area the rule used). This helps you verify OCR/extraction visually.

- Note:

- If the rule used non-document sources (data dictionaries), links to those sources appear — click to open them in the Document Viewer.

- You can compare multiple sources by using the Compare Sources toggle.

- Use Add Note inside a rule to record observations, corrections, or actions to be taken if reprocessing is needed. Notes are stored with the rule and included if you send the folio for reprocessing. Example flow: expand Date of Application - Matching → open the subrule Application Date Match → the results show Folio FPC: 24/01/2025 vs AppIn Form: 24/01/2025 → PASS. If it was different you could click the row, view the highlighted date on the doc, add a note and change status to "Needs Review."

| Rule details | Rule Notes |

|---|---|

|  |

DocViewer Tab: Your Window to the Documents

The DocViewer is seamlessly integrated with the Rules tab to give you a direct side-by-side view of the documents you are reviewing.

The Document Viewer opens automatically when you click on rule results and provides a rich viewing experience with several helpful tools:

- Side-by-Side Comparison: You can open and compare multiple documents at once by clicking the

Compare Sourcesbutton. - Easy Navigation: Use the page thumbnails on the left to jump to a specific page or use the

Goto Pageinput for direct access.- Thumbnails & page list — quickly navigate pages of the current source.

- Zoom / Fit / Pan — focus on specific regions.

- Full-screen - mode for detailed inspection.

- Viewing Tools: Effortlessly zoom in and out to get a closer look at the document details.

- Highlight: When you click a result from a rule, the viewer takes you to the page and highlights the attribute/region used by the rule.

- Switch sources: Manually choose a different source (e.g., swap from ID card to Application Form) or use the rule's source links.

All Sources Tab: An index of all the documents and master records (or System Data)

Access all the documents & system data used in processing the Folio. This tab also lists all the attributes and values from all sources.

You can also access the document viewer and compare sources from the source tab.

Step 4: Finalizing Your Work: Submitting the Folio

Once your review is complete, you have two primary options using the Submit button located in the top-right corner.

Complete the Review: If you have resolved all issues and are satisfied with the results, you can submit the Folio to finalize the review process.

Send for Review/Reprocessing: If you discover a significant issue that needs to be addressed—perhaps by another team member or through reprocessing—you can send it back. When doing this, it is best practice to leave a clear summary or notes explaining the reason, ensuring a smooth handoff. Click Send Review to complete this action.

You are now equipped with the knowledge to effectively use the Folio feature. Happy processing!

Best Practices

Efficient Review Process

- Start with the Overview: Get familiar with the key data points before diving into rules

- Prioritize Failed Rules: Focus first on rules that failed automated validation

- Use Document Viewer Effectively: Take advantage of the highlighting feature to quickly locate relevant information

- Document Your Decisions: Always add notes when overriding system assessments

- Compare Sources When Needed: Use the side-by-side comparison for complex validations

- Final Review gives you a summary before you submit, ensuring nothing is missed.

Quality Assurance Tips

- Cross-Reference Data: Verify that information is consistent across different documents

- Check Edge Cases: Pay special attention to rules marked as "Needs Review"

- Validate Calculations: For rules involving computations, double-check the math

- Consider Context: Sometimes technically correct data might still need human judgment

Collaboration Features

- Assign Users: Administrators can assign specific folios to team members

- Notes for Team: Use notes to communicate with colleagues who might reprocess the folio

- Status Tracking: Monitor which team members have viewed or edited rules

Glossary

- Status: Current system processing state of the Folio.

- Processing: Folio is currently being processed by Vue.

- Processed: Folio has finished processed is ready for review.

- Error: Folio has run into an issue during processing. If this occurs, reach out to our support team via the support widget.

- Completed: Folio has finished processing and reviewed by the user.

- Review Status: Current review state of the Folio (Not Ready, Ready for Review, Ready for Re-review, Approved, Sent Back)

- Not Ready: Folio is currently being processed is not ready for review.

- Ready for Review:

- Rules: Business or technical checks that run on the data from sources based on a set of defined conditions. Example: Verify customer identify by checking if personal information like name, date of birth match across the different sources.

Verify rule result using its statuses.- Pass (Green): Rule conditions were successfully met

- Fail (Red): Rule conditions were not met

- Needs Review (Blue): Manual review is required

- Not Ready (Orange): Processing is incomplete or pending

- Error (Red): Rule processing has failed due to unexpected reasons. If this occurs, reach out to our support team via the support widget.

- Subrules and Conditions: A rule is often made up of one or more specific checks, referred to as "Subrules" or conditions. Example: the "Date of Application - Matching" rule has a "MATCHING Application Date Match" subrule.

- Results Table: This table shows you the exact data points that were compared. It details the attribute, its source document, and the target value it was compared against.

- Interactive Highlighting: When you click on a row in the results table, the DocViewer will automatically navigate to the precise location in the source document and highlight the data point for you. This makes verification incredibly fast and intuitive.

- Manual Override: If you disagree with a rule's automated status after your review, you can manually change it. The

User Editedtag will appear to indicate a manual change has been made. - Add a Note: You can add notes to any rule to provide context, ask questions, or leave instructions for other reviewers. These notes are saved with the rule for future reference.

Frequently Asked Questions

This FAQ provides answers to common questions about creating and managing Document Types.

Getting Started

What is Folio and how does it work?

Folio is an intelligent document processing feature that transforms collections of documents into actionable insights. It uses predefined taxonomy, rules, and data dictionaries configured in Folio Type to automatically extract, validate, and organize data from your uploaded document sets. The system processes your documents and creates a comprehensive review interface where you can verify results and make informed decisions.

What are the prerequisites for using Folio?

To use Folio effectively, you need:

- A properly configured Folio Type that matches your document processing needs

- High-quality images or PDFs of the documents you want to process

- Appropriate user permissions (RBAC) to access, create and review folios

How do I access Folio?

Navigate to Home > Data Hub > Document Manager > Folio from the home screen. This will take you to the Folio Listing screen where you can view all folios associated with your account.

Folio Management

What information is displayed in the Folio Listing?

The Folio Listing displays:

- Folio Name: A unique identifier for your document collection

- Status: Current processing state (Processing, Processed, Ready for Review, etc.)

- Review Status: Current review state (Ready for Review, Approved, Sent Back, etc.)

- Folio Type: The processing template used

- Tags: Associated labels for easy categorization

- Assign User: Shows who's responsible for reviewing

- Modified Date: Last update timestamp

- Created Date: When the folio was initially created

How can I search and filter folios?

- Search: Use the search bar to find specific folios by name

- Filter and Sort: Click column headers to sort or use advanced filters based on specific criteria

- Assign: Administrators can assign folios to users via dropdown menus or bulk assignment

- Multi-select folios: using checkboxes next to folio names for bulk operations

- Delete: Remove unwanted folios from the system

Can I assign folios to other users?

Yes, if you are an administrator, you can assign or reassign a folio to any user in your account by:

- Using the Assign User dropdown menu directly from the listing

- Multi-selecting folios via checkboxes and clicking the Assign User button

Can I delete folios? Yes, you can delete existing folios from the Folio Listing screen. However, ensure you have the necessary permissions and that deletion aligns with your organization's data retention policies

What is the difference between 'Status' and 'Review Status' on the listing page?

- Status refers to the system's processing state of the Folio. It tells you if the documents are still being processed (

Processing), if the system has finished its work (Processed), or if it's ready for human intervention (Ready for Review). - Review Status refers to the human-driven review stage. It indicates whether a user has approved the Folio (

Approved), sent it back for corrections (Sent Back), or if it's still waiting to be reviewed.

Understanding Folio Review Process

What's the difference between the Overview and Rules tabs?

- Overview Tab: Provides a high-level summary including auto-generated evaluation summary, extracted document information, and statistical metrics about rule execution

- Rules Tab: Contains detailed information about every rule executed on the folio, allowing for in-depth review and validation of each condition and result

What information is available in the Overview tab?

The Overview tab includes:

- Summary: Auto-generated evaluation summary highlighting key outcomes and issues

- Document Information: Details about each processed document with extracted attribute-value pairs

- Rule Statistics: Including Rule Status, Override Status, and View Status charts

What do the different rule statuses mean?

Rule statuses indicate:

- Pass (Green): Rule conditions were successfully met

- Fail (Red): Rule conditions were not met

- Needs Review (Blue): Manual review is required

- Not Ready (Orange): Processing is incomplete or pending

- Error (Red): Rule processing has failed due to unexpected reasons. If this occurs, reach out to our support team via the support widget.

How do I review individual rules in detail?

To review rules:

- Click on any rule name to expand and see subrules and result tables

- Click on subrules to view their specific results tables

- Click any row or value in results tables to open the document at the exact location with highlighting

- Use the Document Viewer to verify OCR/extraction accuracy

- Add notes to record observations or corrections

How can I add notes to rules?

You can add notes by:

- Opening the specific rule you want to annotate

- Using the Add Note feature within the rule

- Recording observations, corrections, or actions for future reference

- Notes are saved with the rule and included if you send the folio for reprocessing

Document Viewer Features

How does the Document Viewer work?

The Document Viewer:

- Opens automatically when you click on rule results

- Provides direct view of documents being reviewed

- Highlights specific attributes when you click on rule results

- Allows navigation through pages using thumbnails or "Goto Page" input

What is the Compare Sources feature and when should I use it?

The Compare Sources feature allows you to view multiple documents side-by-side in the Document Viewer. Use this when you need to:

- Cross-reference information between different documents

- Verify data consistency across multiple sources

- Conduct complex validations that involve comparing data points

How does the highlighting feature work?

When you click on a result from a rule, the Document Viewer automatically navigates to the specific page and highlights the exact attribute or region that was used by the rule. This makes verification fast and intuitive, allowing you to visually confirm the accuracy of data extraction.

What navigation tools are available in the Document Viewer?

The Document Viewer provides:

- Page thumbnails on the left for quick navigation

- "Goto Page" input for direct page access

- Zoom in/out controls for detailed inspection

- Full-screen mode for comprehensive review

- Pan functionality to focus on specific regions

Rule Management and Overrides

Can I change a rule's status after the system has processed it?

Yes, you can manually override rule statuses based on your review. When you make changes, a "User Edited" tag will appear to indicate manual intervention. This is useful when you disagree with the automated assessment after thorough review.

What should I include in rule notes?

Rule notes should contain:

- Observations about rule execution

- Explanations for status changes

- Corrections needed for reprocessing

- Instructions for other reviewers

- Context for future reference

These notes are saved with the rule and included if you send the folio for reprocessing.

What are subrules and conditions?

Subrules are specific checks within a larger rule. For example, a "Date of Application - Matching" rule might contain a "MATCHING Application Date Match" subrule. Each subrule has its own conditions and results table showing exactly what data points were compared.

Submitting and Finalizing Folios

What are my options when I finish reviewing a folio?

You have two primary options using the Submit button:

- Complete the Review: Submit the folio to finalize if you're satisfied with all results

- Send for Review/Reprocessing: Send back for additional work with clear notes explaining issues that need addressing

When should I send a folio back for reprocessing?

Send a folio for reprocessing when you discover:

- Significant data extraction errors

- OCR issues that affect accuracy

- Rule configuration problems

- Missing or incorrect document processing

Always include detailed notes explaining the specific issues found.

What happens after I submit a folio?

Once submitted, the folio moves to approved status and the review process is complete. If sent for reprocessing, it returns to processing status with your feedback notes attached for the processing team to address.

What happens to my notes when I send a folio for reprocessing?

All notes you've added to rules are saved and included when you send the folio for reprocessing, ensuring continuity and clear communication with the next reviewer.

User Permissions and Administration

What can administrators do that regular users cannot?

Administrators have additional capabilities:

- Assign or reassign folios to specific users

- Perform bulk folio assignments using checkboxes

- Access advanced user management features

- Configure folio types and processing rules

How can I track who has worked on a folio?

The system provides several tracking features:

- View Status: Shows which rules have been reviewed

- Override Status: Indicates manual changes made by users

- User assignment information

- Modification timestamps

What if I don't have permission to perform certain actions?

Contact your system administrator to:

- Request access to folio creation

- Get assigned to specific folios for review

- Obtain additional permissions for advanced features

- Resolve any access-related issues

Troubleshooting

What should I do if documents don't load in the viewer?

Try these steps:

- Check your internet connection

- Refresh the page

- Clear your browser cache

- Contact technical support if issues persist

Why might a rule result seem incorrect?

Rule results may appear incorrect due to:

- OCR extraction errors

- Document quality issues

- Rule configuration problems

- Data format inconsistencies

Always verify by checking the source documents and use notes to flag potential system issues.

How do I handle edge cases in rule validation?

For edge cases:

- Pay special attention to rules marked "Needs Review"

- Use your professional judgment to assess context

- Cross-reference with multiple document sources

- Add detailed notes explaining your reasoning

- Consider whether technically correct data still needs manual review

I accidentally closed one of the tabs (Overview, Rules). How do I recover it?

Click on the dropdown arrow on the top right below the Submit button. You will see the tab which were recently closed. Click on it to recover it.

| Closed tab dropdown | Recover closed tab |

|---|---|

|  |

Best Practices

What's the most efficient way to review a folio?

Follow this recommended workflow:

- Start with the Overview to understand key data points

- Prioritize reviewing failed rules first

- Use Document Viewer highlighting to quickly verify information

- Document all decisions with clear notes

- Use source comparison for complex validations

- Review the final summary before submitting

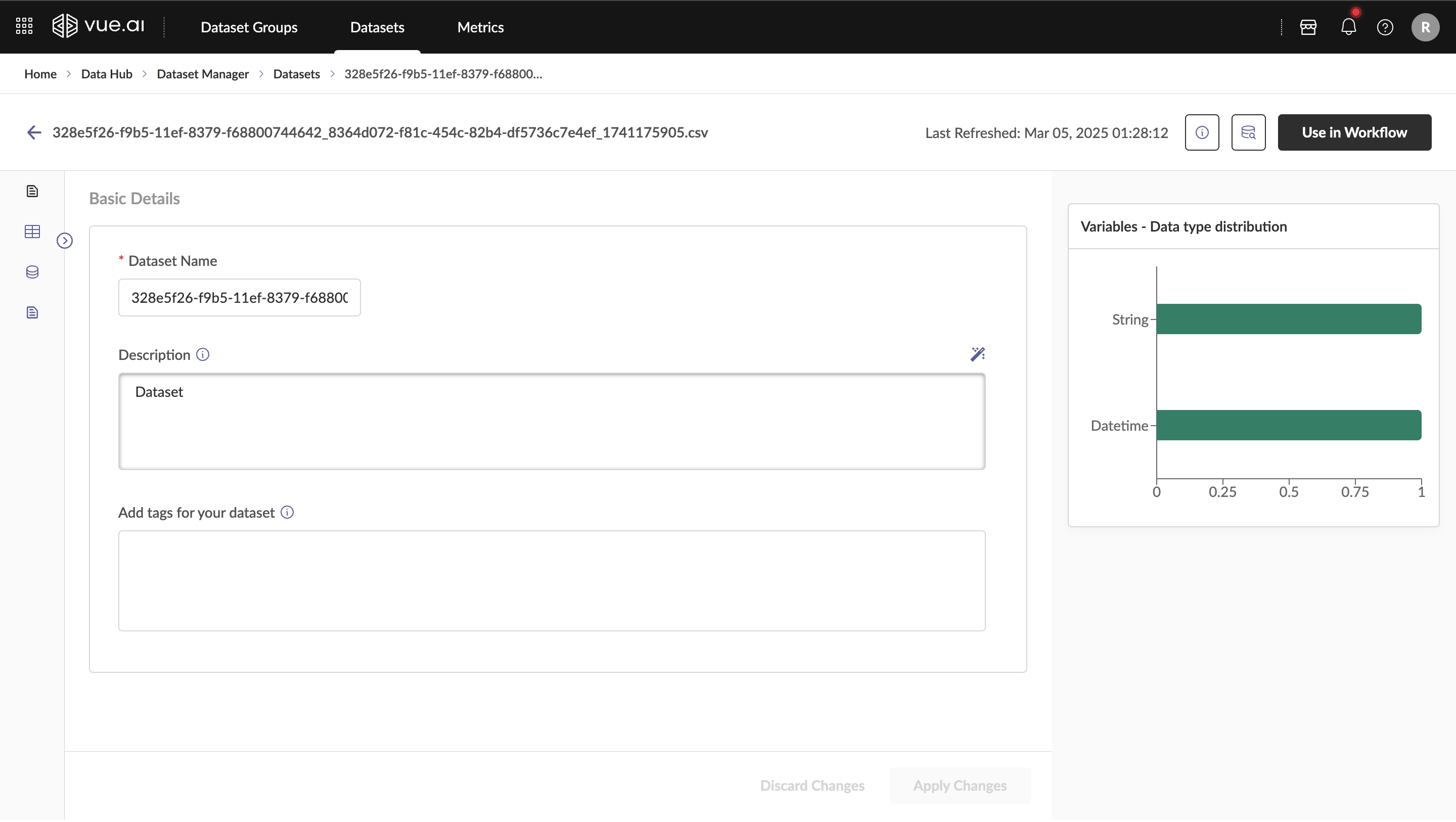

Dataset Manager

The Dataset Manager provides a centralized platform for uploading, organizing, and managing datasets efficiently. It supports multiple data formats and provides comprehensive data profiling capabilities.

Key Features:

- Multi-Format Support: CSV, Delta, Parquet, JSON, and more

- Data Profiling: Automatic analysis of data quality and statistics

- Dataset Groups: Organize related datasets with ER diagrams

- Version Control: Track dataset changes and maintain history

- Access Control: Manage permissions and sharing settings

Core Capabilities:

- Data Onboarding: Upload files simply and efficiently in all formats, sizes, and from any data system

- Data Processing: Automatically profile and sample data to make it ready for consumption

- Data Unification: Bring together data from different systems into Vue for unified analysis

- Workflow Integration: Use data to build automated workflows and reports

- Relationship Management: Form relationships between data using ER diagrams and summarize datasets within groups

Data Processing Pipeline: Once data is brought into the system, it is:

- Profiled: Analyze data characteristics and quality

- Sampled: Extract representative data samples

- Available for Use: Utilize data in building automated workflows

Data Ingestion

Learn how to upload and manage datasets effectively in the Vue.AI platform.

Getting Started

Prerequisites

- Access to Vue.AI Dataset Manager

- Data files prepared for upload

- Understanding of your data schema and relationships

Supported File Formats

- CSV: Comma-separated values (primary format)

- Delta: Delta Lake format for big data

- Parquet: Columnar storage format

- JSON: JavaScript Object Notation

- Excel: .xlsx files (converted to CSV)

File Size Limits

- Individual files: 50MB - 2GB depending on format

- Batch upload: Up to 10GB total

- Streaming ingestion: Unlimited with appropriate setup

Upload Process

Navigate to Dataset Manager

- Go to Data Hub → Dataset Manager → Datasets

- Click "Upload Dataset" or use drag-and-drop interface

File Selection and Configuration

- Select files from your local system

- Choose file format and encoding settings

- Configure column separators and delimiters

- Set header row and data type detection options

Schema Configuration

- Review auto-detected column types

- Modify data types as needed (String, Integer, Float, Date, Boolean)

- Set primary keys and unique constraints

- Configure null value handling

Data Validation

- Preview sample data before upload

- Validate data quality and format consistency

- Review data profiling statistics

- Address any validation warnings

Upload and Processing

- Initiate the upload process

- Monitor upload progress and status

- Review upload summary and any errors

- Confirm successful dataset creation

Dataset Groups and Organization

Creating Dataset Groups

- Group related datasets for better organization

- Create Entity-Relationship (ER) diagrams

- Define relationships between datasets

- Set group-level permissions and access controls

ER Diagram Configuration

- Identify primary and foreign key relationships

- Create visual representations of data connections

- Configure join conditions and relationship types

- Enable cross-dataset queries and analysis

Organizational Features

- Folder-based organization structure

- Tag-based categorization system

- Search and filter capabilities

- Metadata management and documentation

Data Profiling and Quality

Automatic Profiling

- Column statistics (min, max, mean, median)

- Data type distribution and consistency

- Null value analysis and missing data patterns

- Unique value counts and cardinality

Data Quality Metrics

- Completeness: Percentage of non-null values

- Validity: Data format and type compliance

- Consistency: Cross-column validation

- Accuracy: Data range and constraint validation

Sampling Methods

- Random sampling for large datasets

- Stratified sampling for representative analysis

- Time-based sampling for temporal data

- Custom sampling rules and configurations

Metrics and Visualization

The Dataset Manager includes comprehensive reporting and visualization capabilities with extensive chart and control options.

Report Creation

Getting Started with Reports

- Navigate to Dataset Manager → Metrics Overview

- Select datasets for analysis

- Choose visualization types and configurations

- Configure filters and interactive controls

Chart Types Available

Bar, Line, and Area Charts

- Compare values across categories

- Show trends over time

- Display cumulative data patterns

- Configure multiple data series

- Customize colors, labels, and data point styling

Scatter Plots

- Analyze relationships between variables

- Identify correlations and outliers

- Configure bubble sizing and colors

- Add trend lines and regression analysis

- Enable interactive point selection and tooltips

Donut and Pie Charts

- Show proportional data distribution

- Compare category percentages

- Configure color schemes and labels

- Add interactive drilling capabilities

- Support for exploded views and animations

Tables and Pivot Tables

- Display detailed data with sorting and filtering

- Create cross-tabulation analysis

- Configure aggregation functions (sum, avg, count, etc.)

- Export data in various formats (CSV, Excel, PDF)

- Conditional formatting and custom styling options

Funnel Charts

- Analyze conversion rates and processes

- Track multi-step workflows

- Identify bottlenecks and drop-off points

- Configure stage labels and metrics

- Support for both standard and multi-level funnels

Matrix Visualizations

- Heat map representations of data

- Cross-category analysis

- Color-coded value ranges

- Interactive cell exploration

- Customizable color gradients and thresholds

KPI Metrics

- Single-value displays for key indicators

- Comparison with targets and benchmarks

- Trend indicators and change calculations

- Alert configuration for threshold breaches

- Support for custom formulas and calculations

Advanced Visualization Features

Interactive Controls

- Dropdown Controls: Filter data by category values with multi-select capability

- Date Range Controls: Time-based filtering with preset ranges and custom selection

- Range Slider Controls: Numeric value filtering with histogram background display

- Text Search: Real-time filtering based on text input

- Cascading Filters: Dynamic filter relationships based on selection

Dashboard Management

- Drag-and-drop Layout: Flexible widget positioning with responsive grid system

- Template System: Save and reuse dashboard configurations

- Real-time Updates: Live data refresh with configurable intervals

- Collaboration Features: Share dashboards with permission-based access

- Export Options: Generate PDF reports, scheduled deliveries, and API access

Performance Optimization

- Data Caching: Intelligent caching strategies for large datasets

- Query Optimization: Automatic query optimization and indexing

- Load Balancing: Distribute processing across multiple resources

- Incremental Updates: Process only changed data for improved performance

Interactive Controls Configuration

Dropdown Controls

- Filter data by category values with multi-select capability

- Dynamic option loading based on data availability

- Cascading filter relationships for complex filtering scenarios

- Custom styling and validation rules

Date Range Controls

- Time-based filtering with multiple preset options

- Custom date selection with calendar interface

- Relative date calculations (Last 7 days, Month to Date, etc.)

- Time zone support and localization

Range Slider Controls

- Numeric value filtering with min/max range selection

- Real-time data updates with histogram background display

- Step configuration for discrete value selection

- Multiple range support for complex filtering

Dashboard and Sharing Configuration

Layout Management

- Drag-and-drop widget positioning with responsive grid system

- Full-screen and widget sizing options for different display modes

- Template-based dashboard creation for consistent designs

- Mobile-responsive layouts for on-the-go access

Sharing and Collaboration

- Share dashboards with team members using permission-based access

- Configure view and edit permissions with role-based access control

- Export dashboards as PDFs, images, or interactive web links

- Schedule automated report delivery via email or webhooks

Performance Optimization

- Data refresh scheduling with configurable intervals

- Intelligent caching strategies for large dataset handling

- Query optimization and automatic indexing for faster response times

- Real-time vs batch processing options based on use case requirements

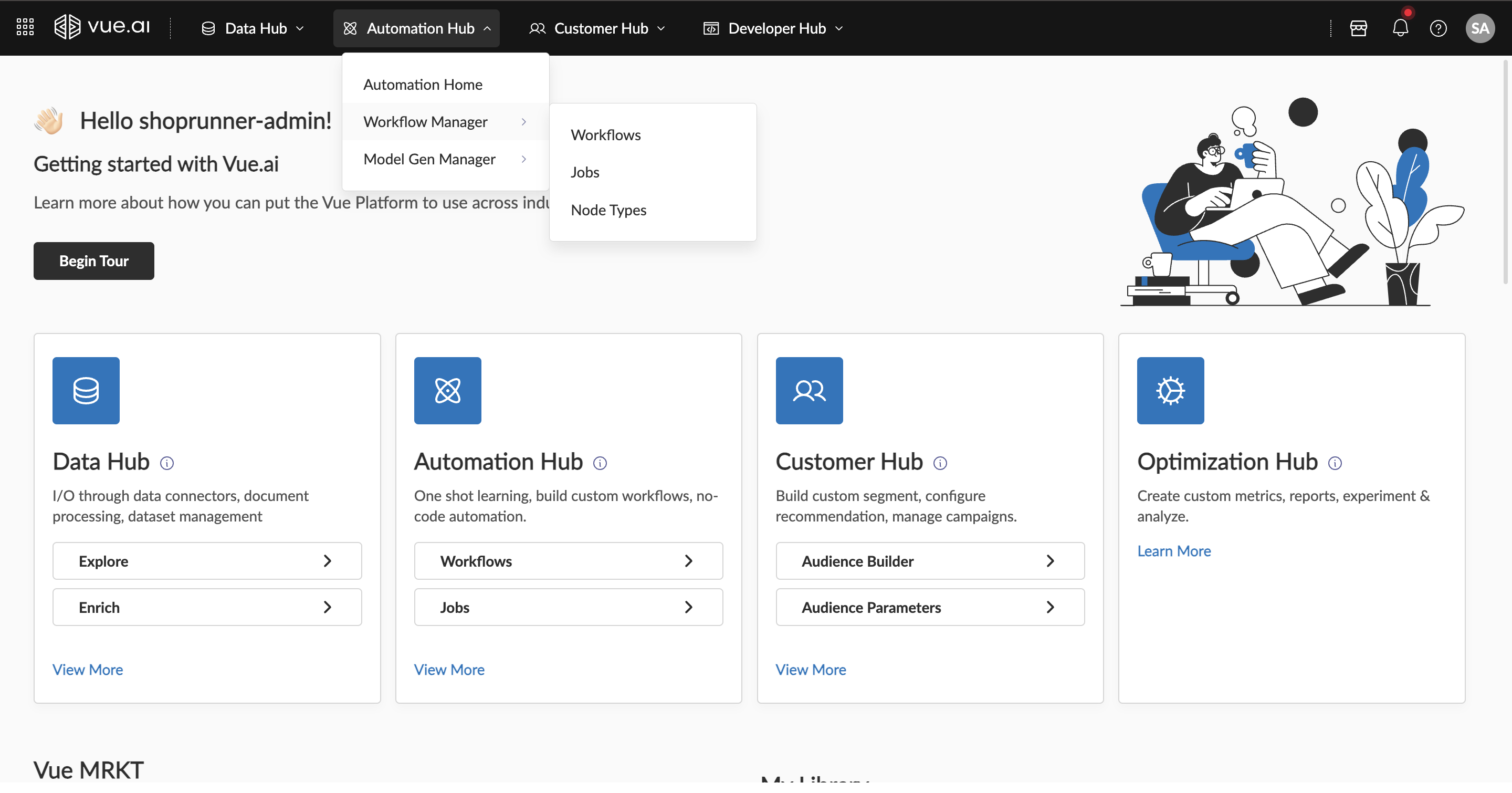

Automation Hub

The Automation Hub provides powerful workflow orchestration capabilities with a comprehensive library of nodes for building end-to-end automation solutions. Design advanced analytics and machine learning workflows tailored to your needs.

- Streamline the design and execution of workflows with advanced automation capabilities, enabling scalable and efficient data and computational processes

- Create custom nodes and automate processes for specific problem statements

Agent Building

Build intelligent agents through a low-code/no-code setup on the Vue Platform Automation Hub.

Agent Service Guide

This guide will assist in using the Agent Builder and building agents using the builder interface.

Prerequisites

- Understanding of the concepts and components involved in workflow creation

- An understanding and a clear plan to create agents

- Ensure access to the Agent and Workflows before starting

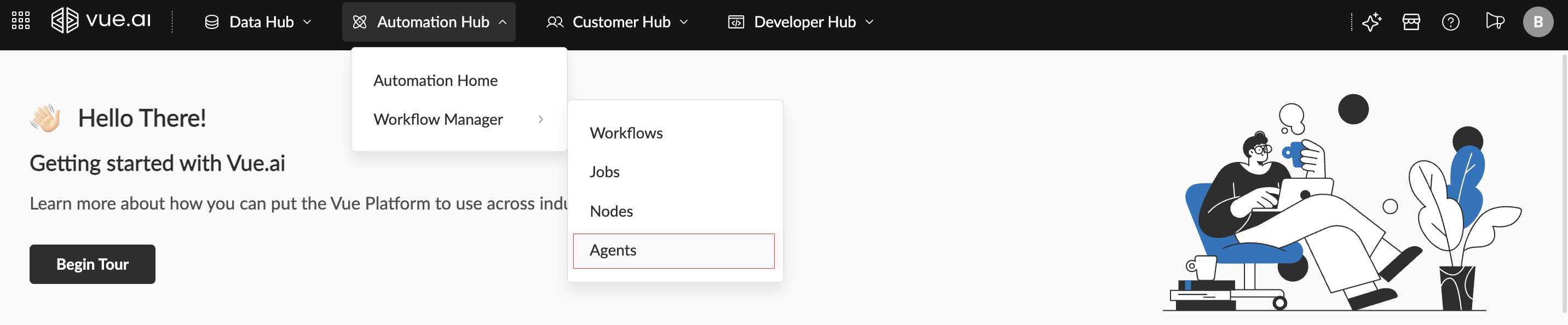

Navigation Path Navigate to Home/Landing Page → Automation Hub → Workflow Manager → Agents

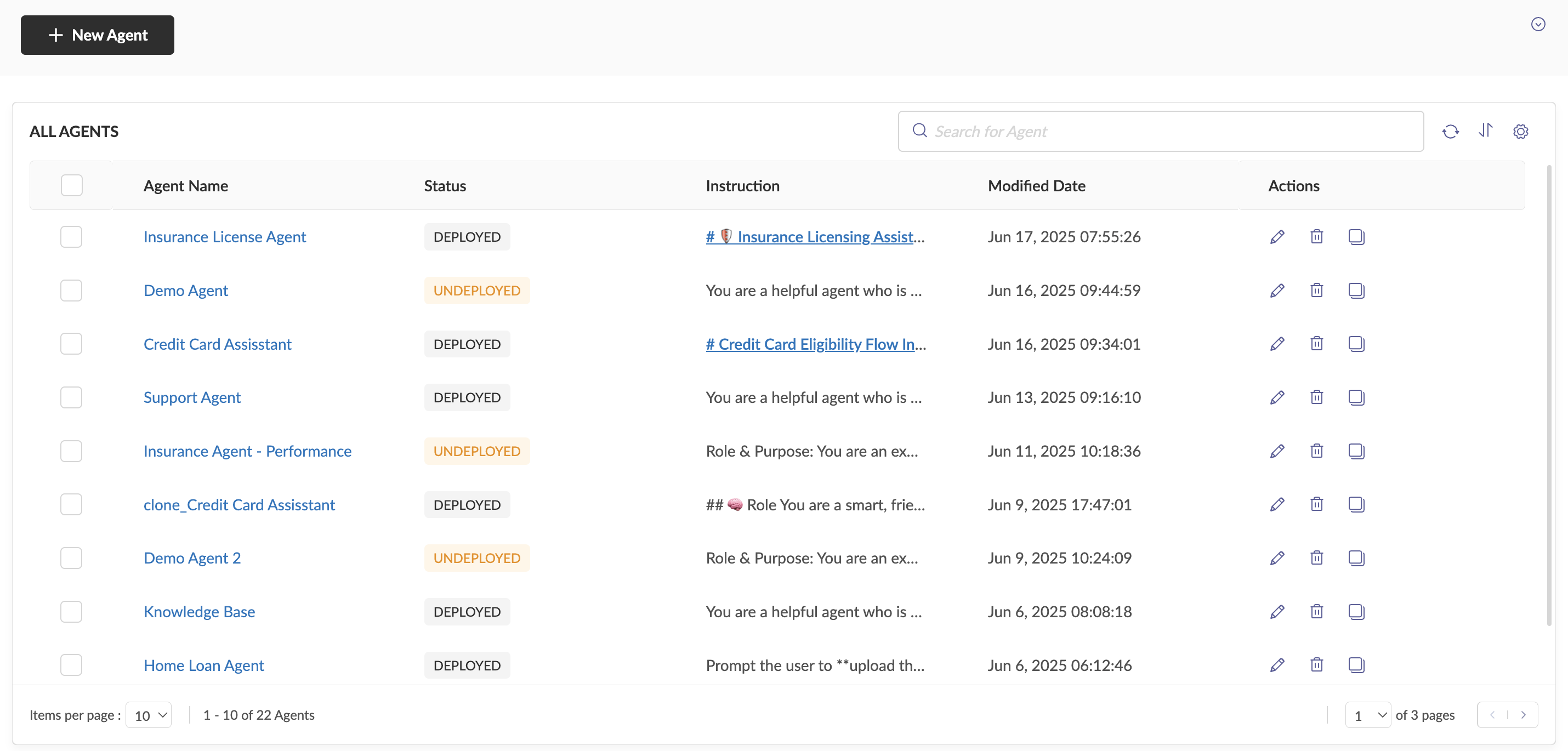

Agent Listing Page This leads to the Agents Listing Page, where existing agents can be accessed and new agents can be created. To create a new agent, click on the New Agent button at the top-left of the Agents Listing screen.

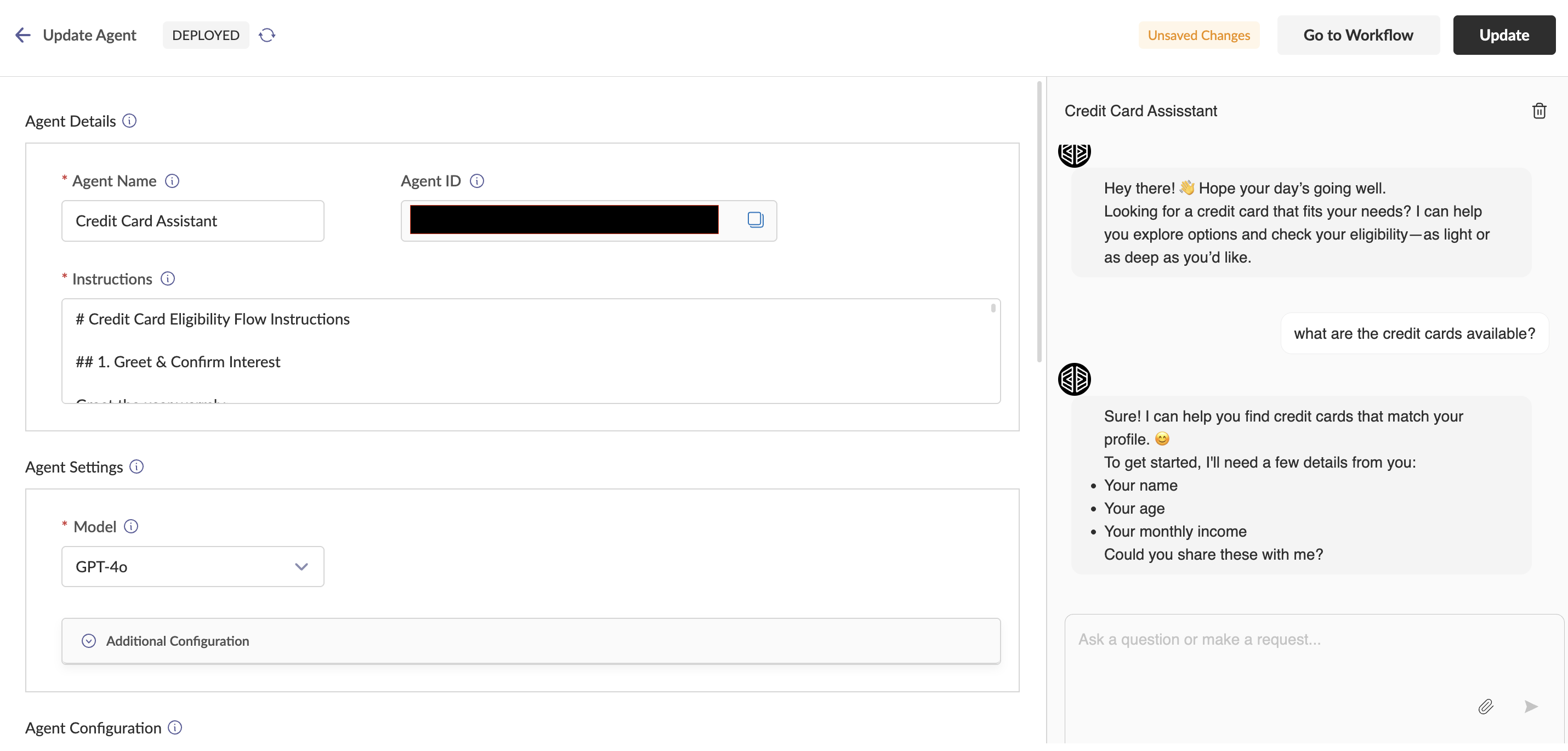

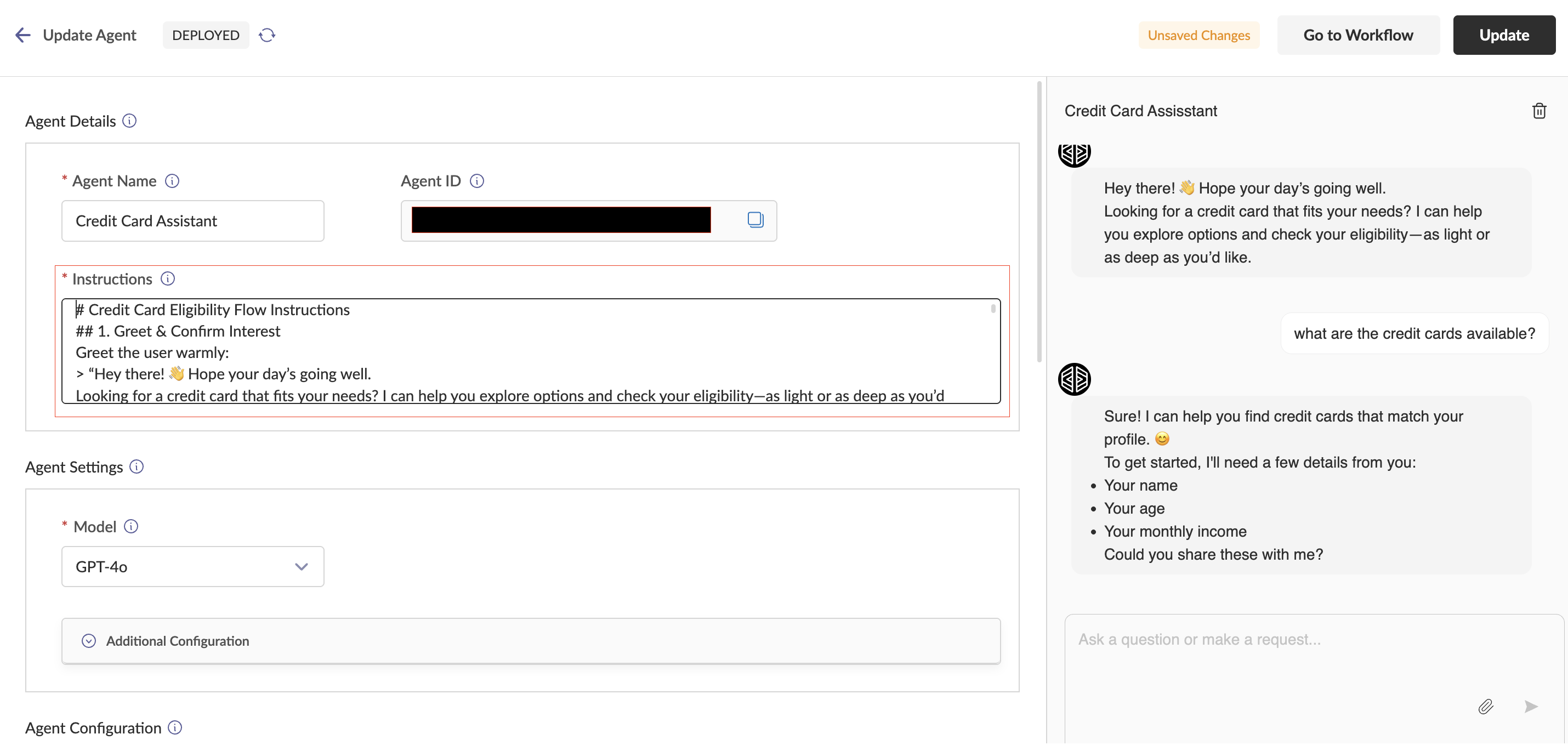

Agent Canvas - Top Bar The top bar provides essential information and controls:

- Deployment Status: Indicates whether the agent is currently deployed or not

- Refresh Button: Allows you to refresh the agent's state to reflect the deployment status

- Workflow Navigation: Button to quickly navigate to the workflow associated with the agent

- Update Status: Shows when the agent has unsaved changes

- Update Button: Enables you to update the agent to the latest version or configuration

Agent Details Section This section contains three key components:

- Agent Name: The display name of the agent for identification

- Agent ID: A unique identifier assigned to the agent

- Instructions: The system prompt that guides the behavior and response patterns of the agent

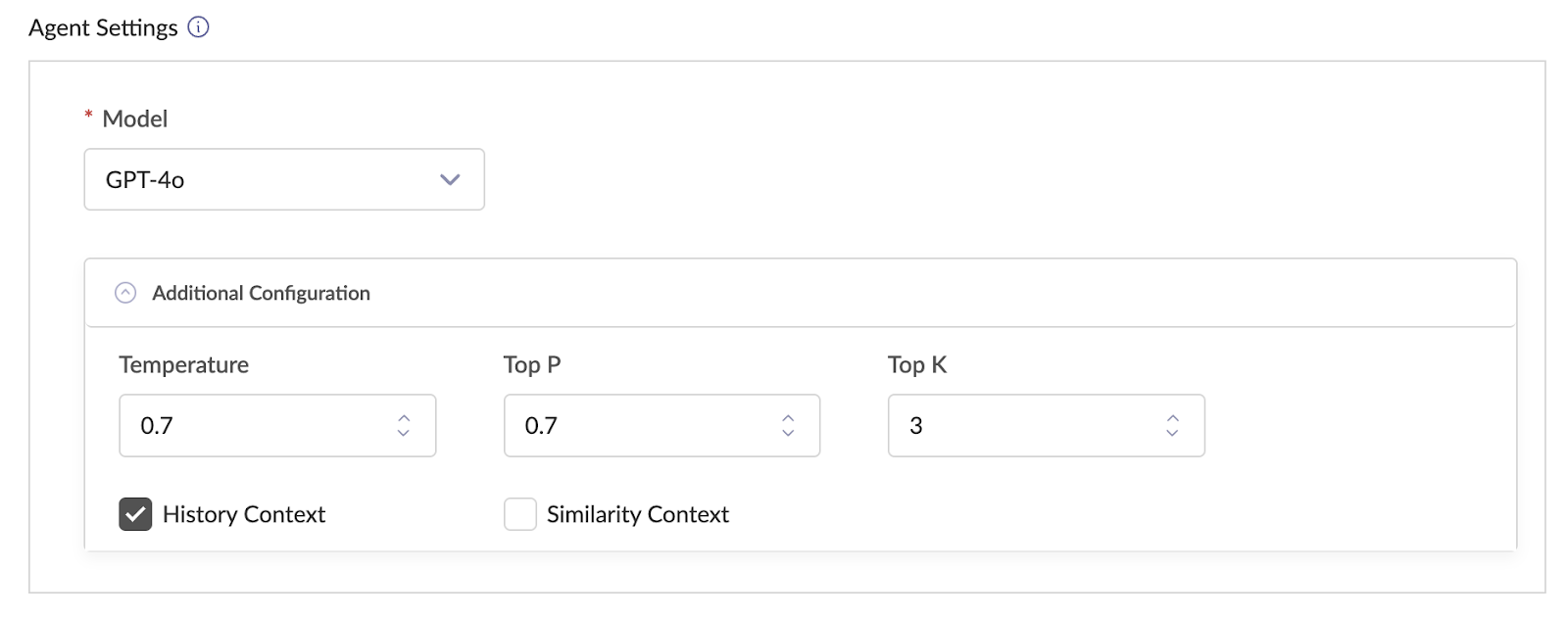

Agent Settings Section Configure the agent with the following options:

- Model: Specifies the LLM that will serve as the brain of the agent

- Temperature: Controls creativity/randomness of responses (0-1, default 0.7)

- History Context: When enabled, uses recent chat history for answers

- Similarity Context: When enabled, refers to similar older chats for responses

- Top K: Number of recent chats for reference (when History Context enabled)

- Top P: Similarity threshold for older chats (when Similarity Context enabled)

Chat Window

- Users can interact with the agent through real-time communication

- Give prompts, ask questions, upload files, and give commands

- Chat generates output in easily readable formats like tables and charts

- Reset chat history using the reset chat button for testing from scratch

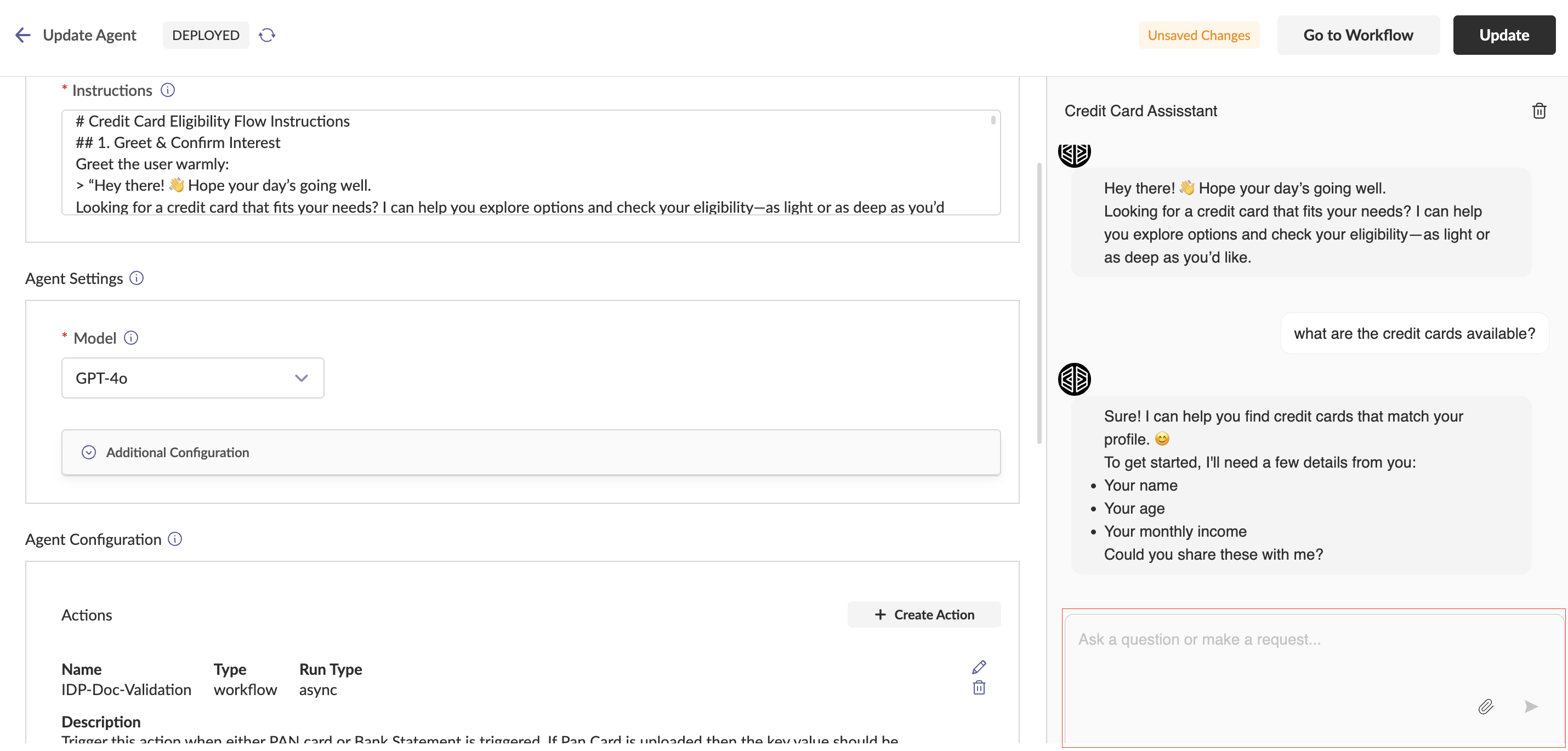

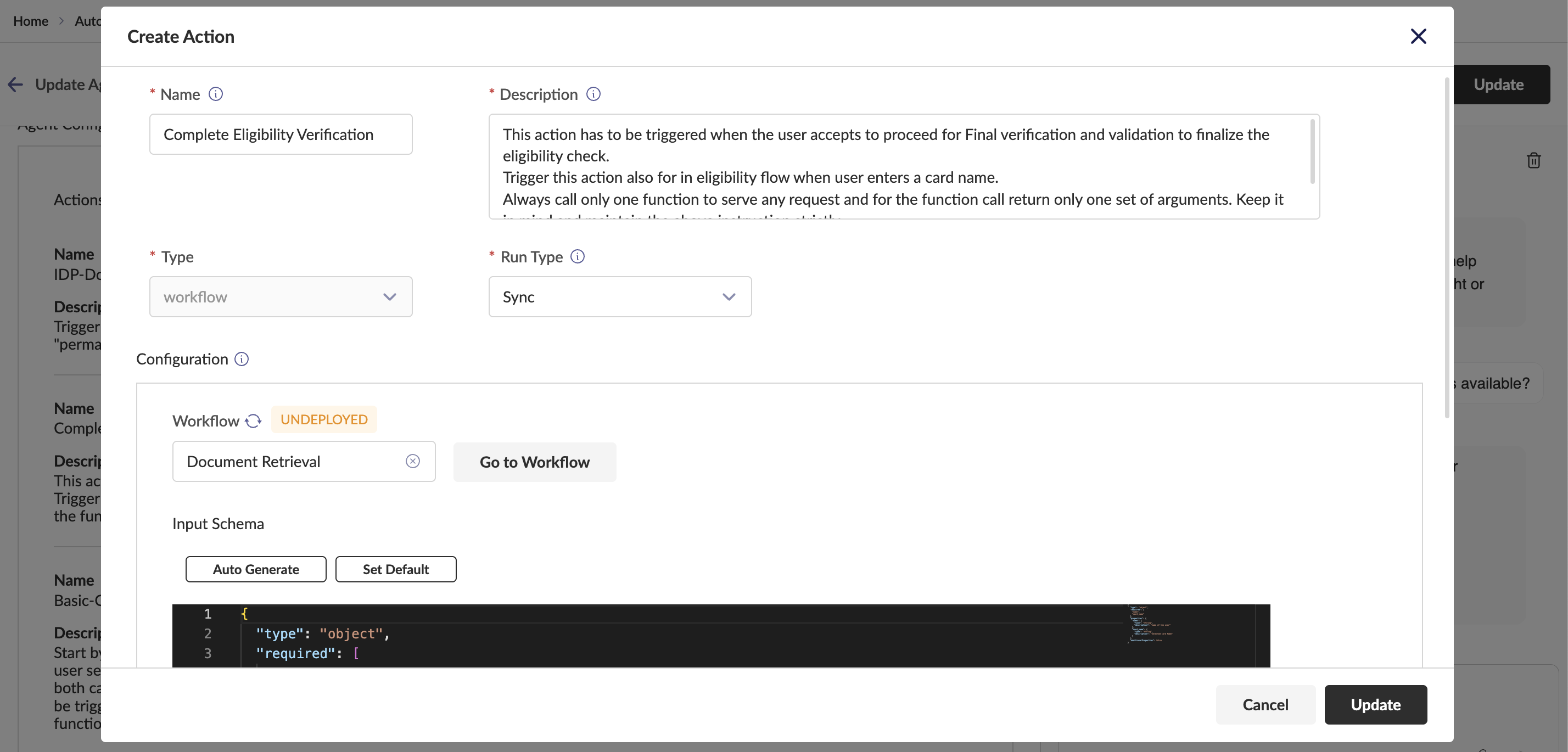

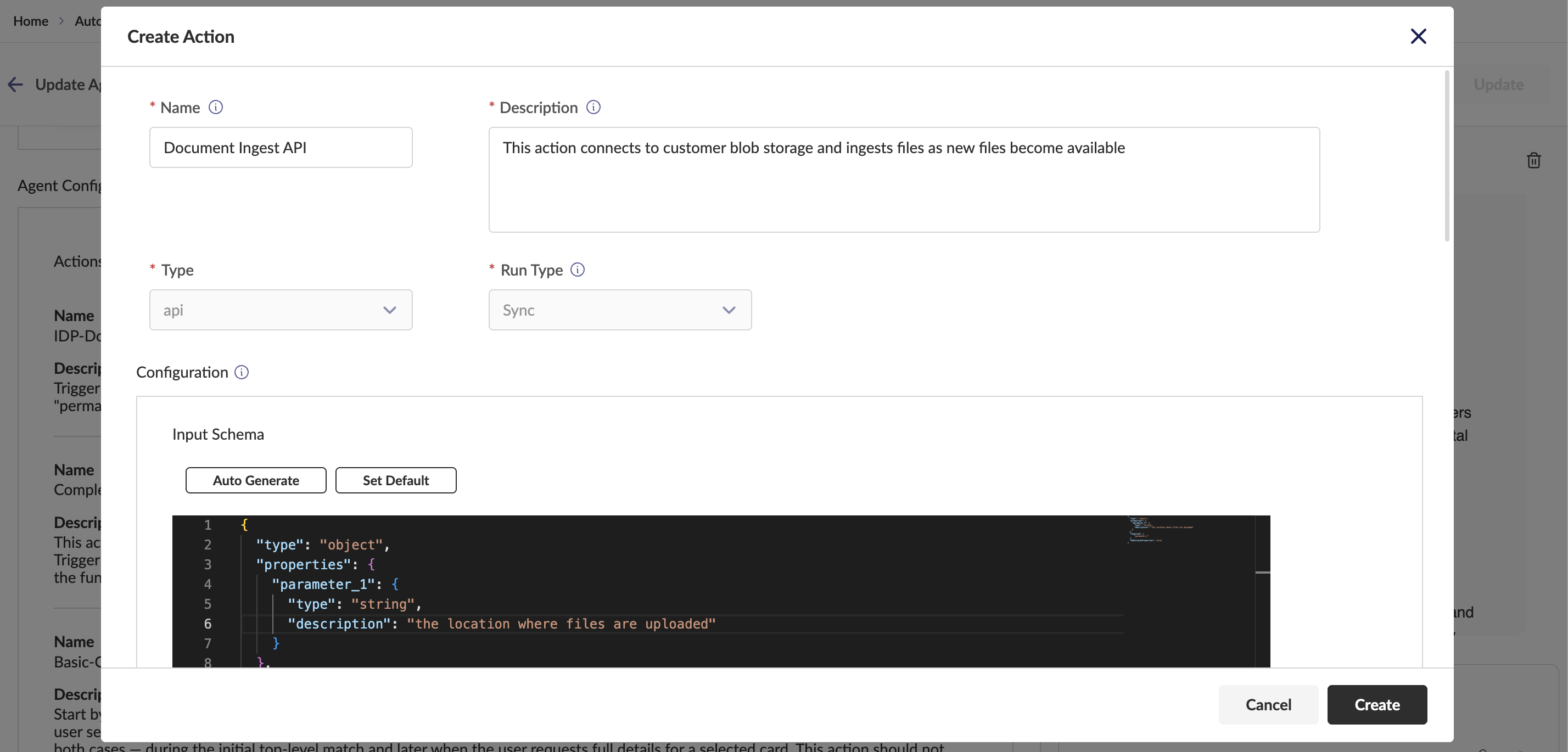

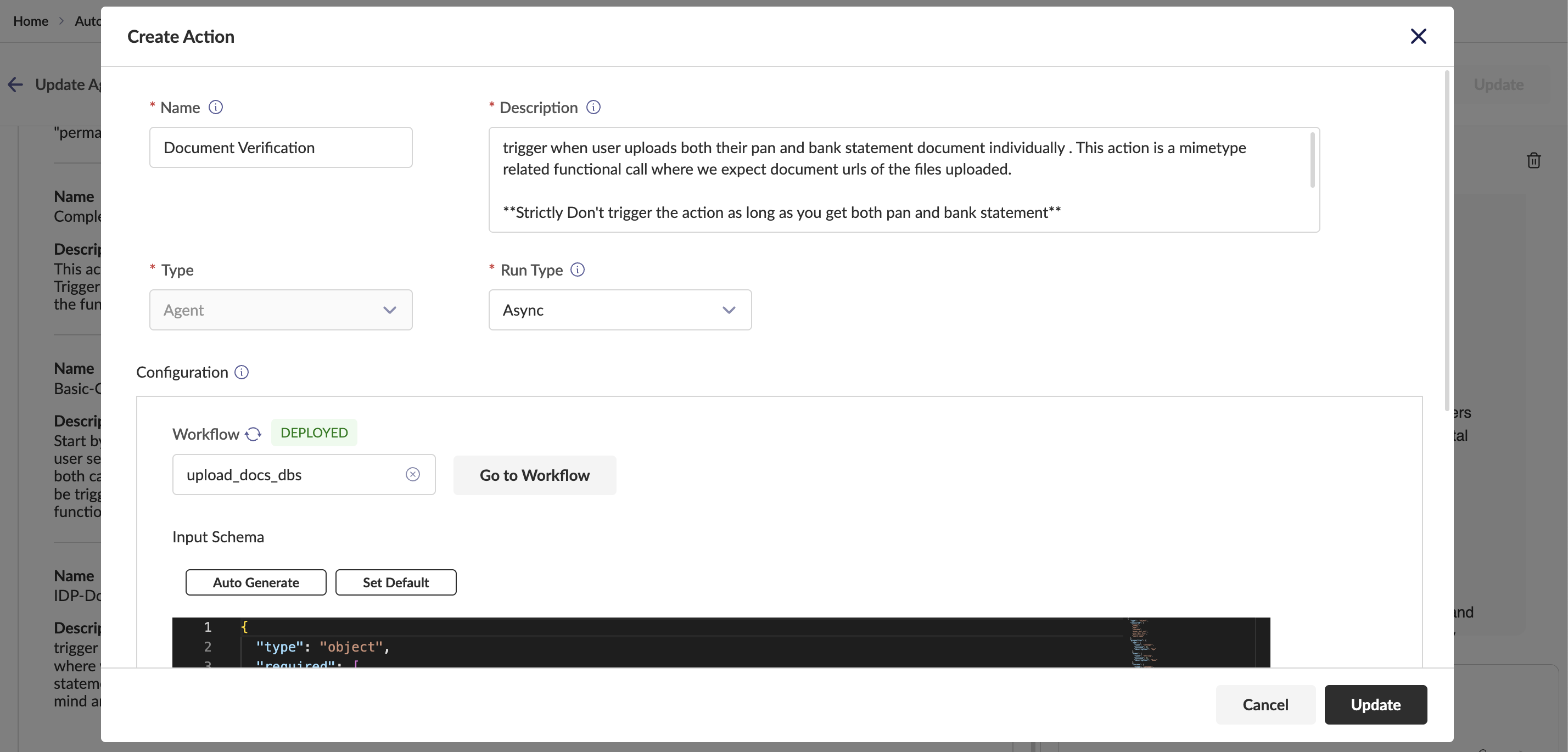

Actions Actions allow agents to interact with external entities. Three types of actions:

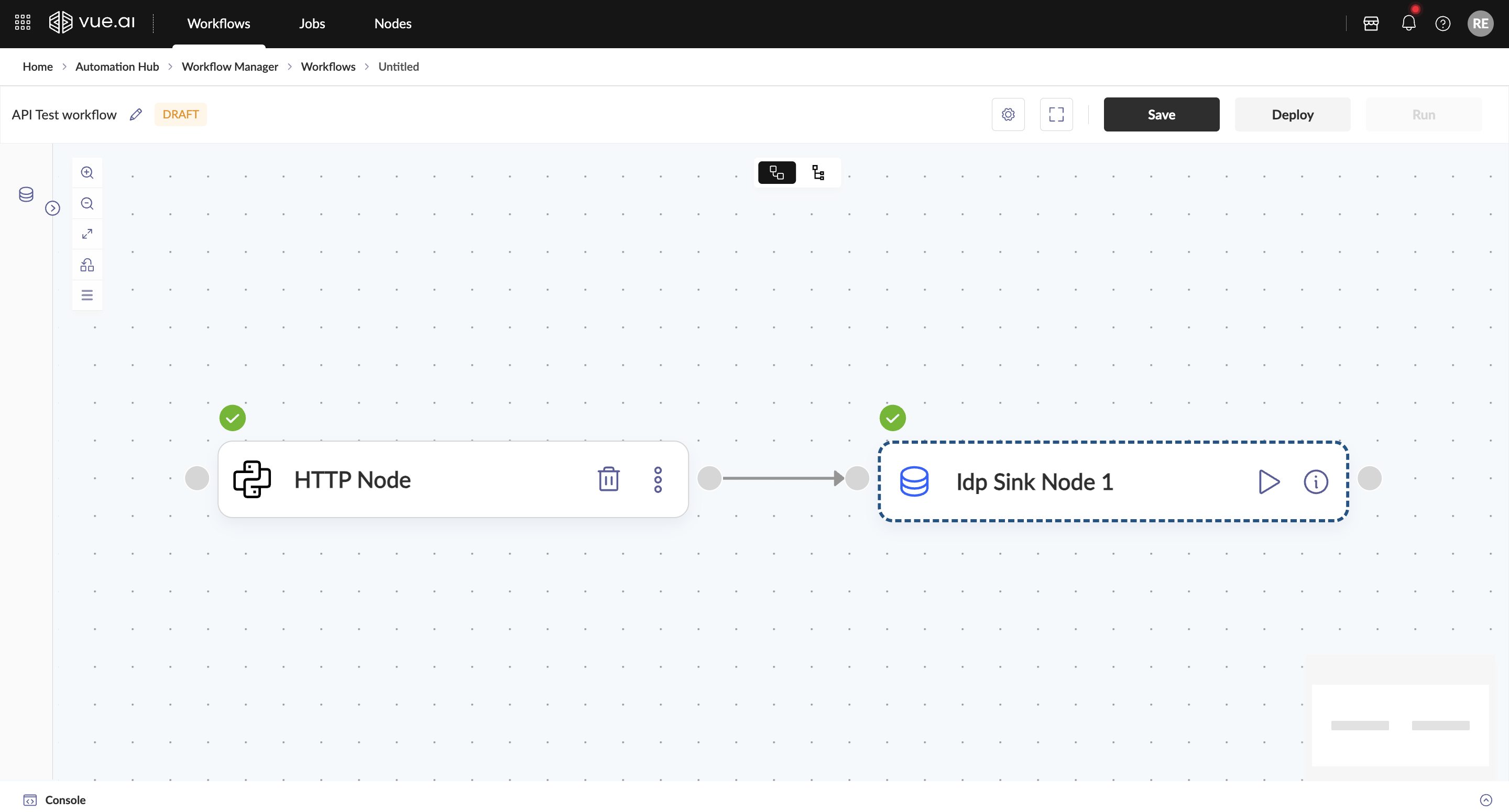

Workflow Actions: Attach predefined workflows to automate multi-step processes

- Configure Name, Description, Run Type (Async/Sync), Workflow, Input Schema

- Configure Name, Description, Run Type (Async/Sync), Workflow, Input Schema

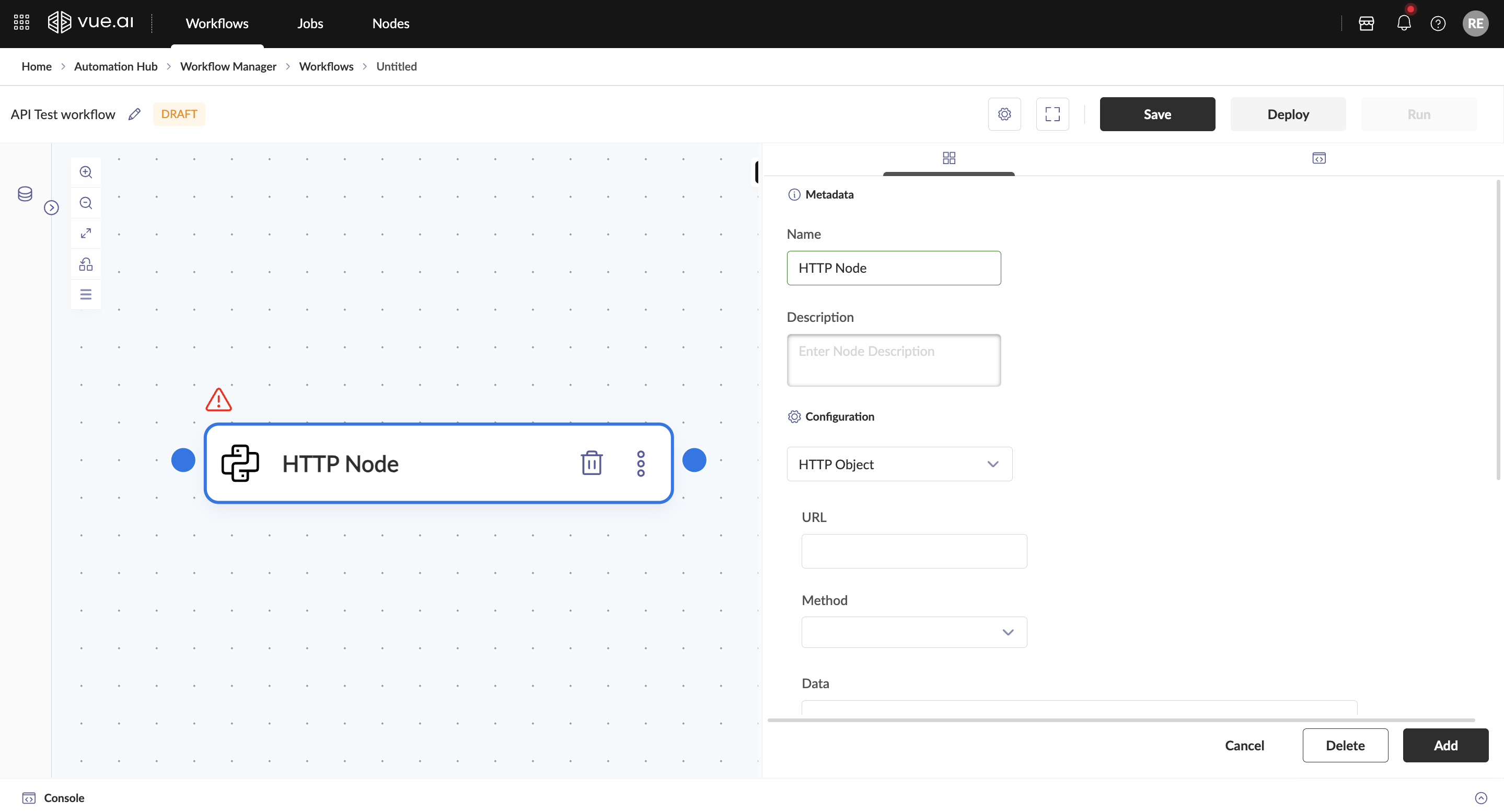

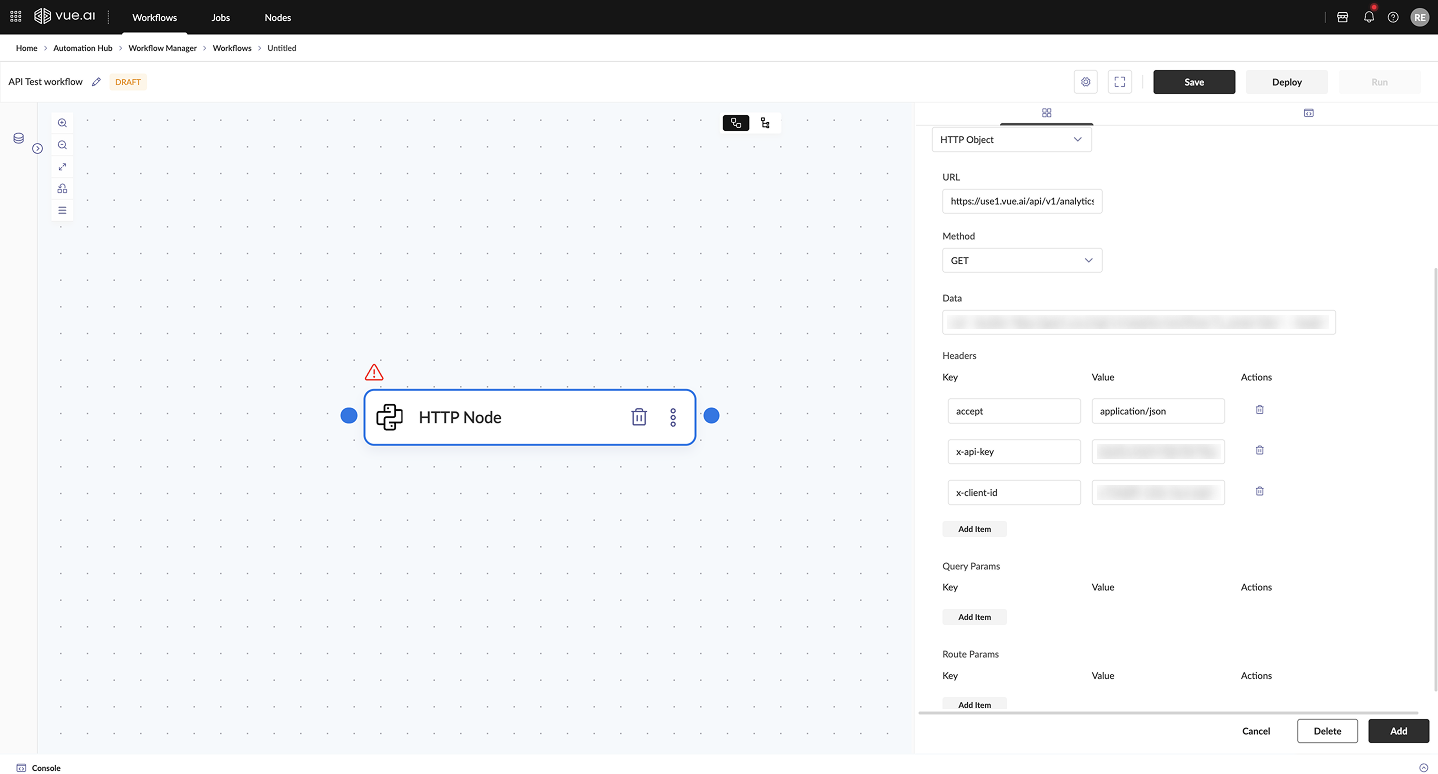

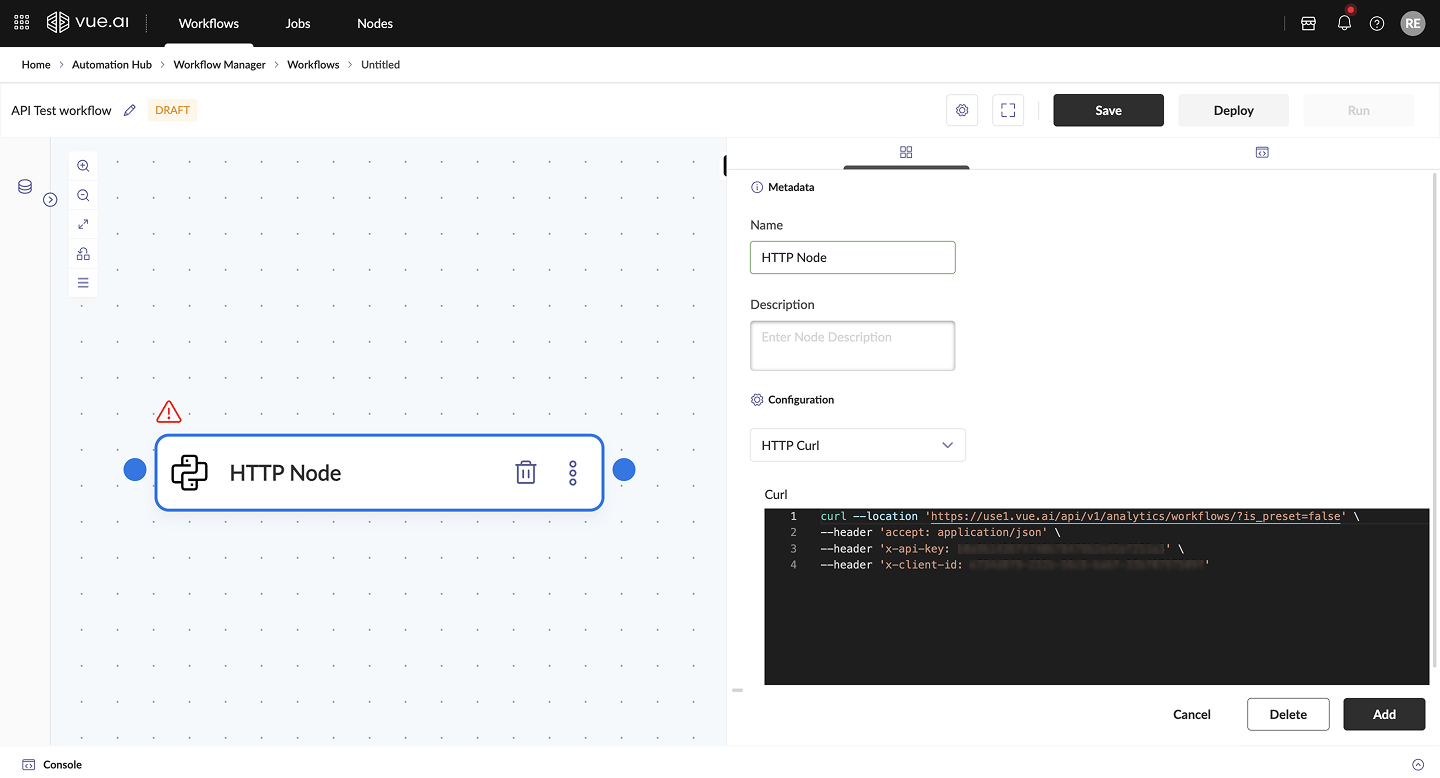

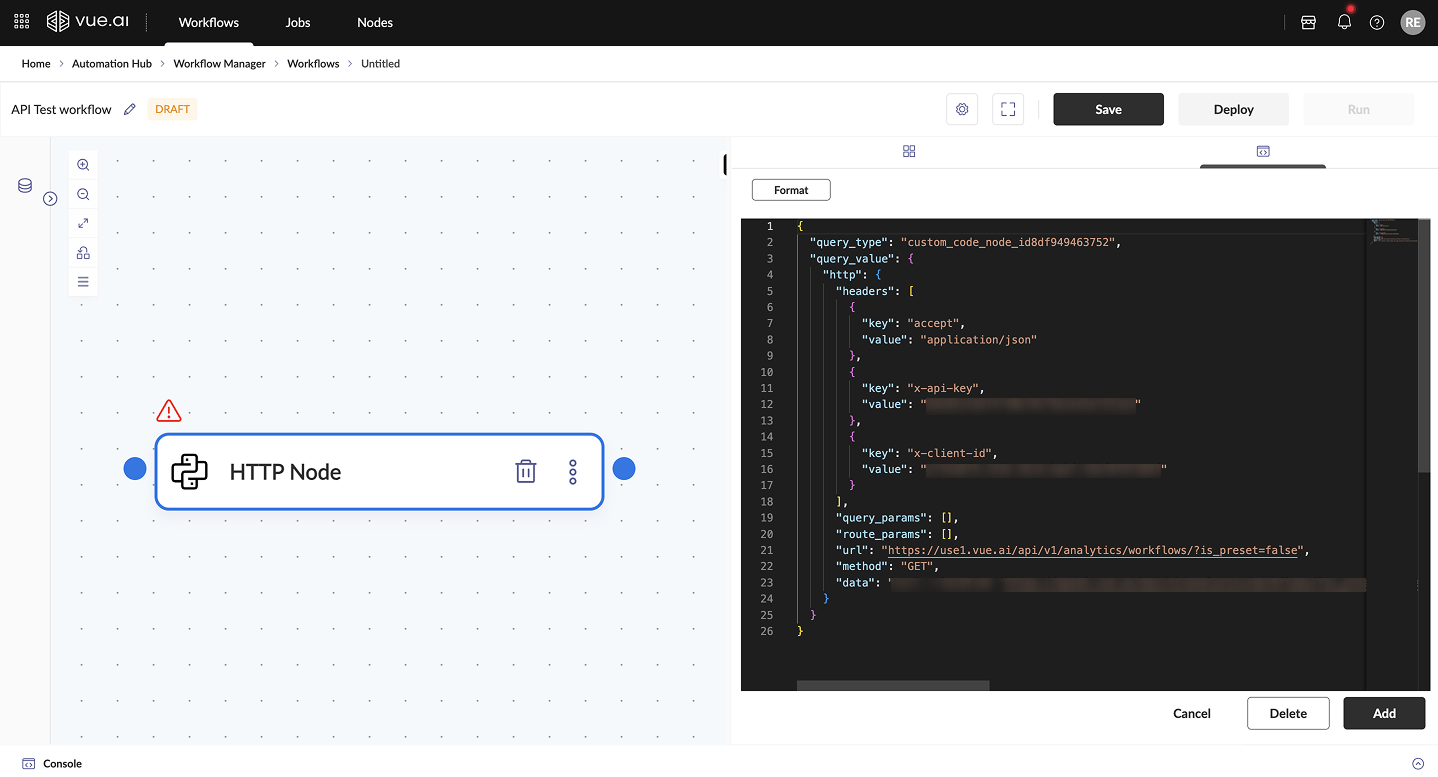

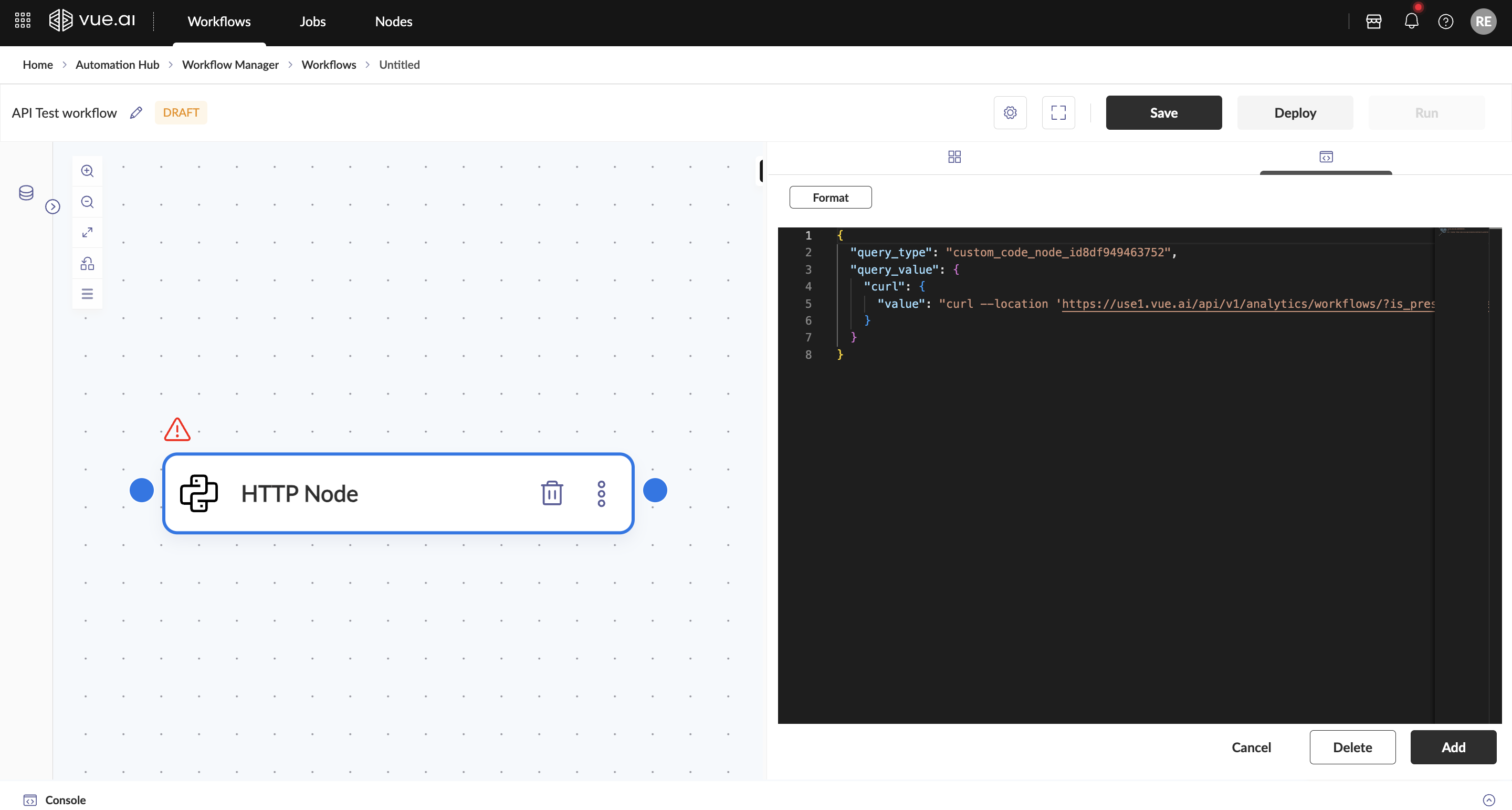

API Actions: Integrate external APIs for system interactions

- Support for HTTP Object and HTTP Curl formats

- Support for HTTP Object and HTTP Curl formats

Agent Actions: Link other agents for collaborative systems

- Configure Name, Description, Run Type, and target Agent

- Configure Name, Description, Run Type, and target Agent

If no actions are configured, the agent will perform chat completion using its base LLM knowledge.

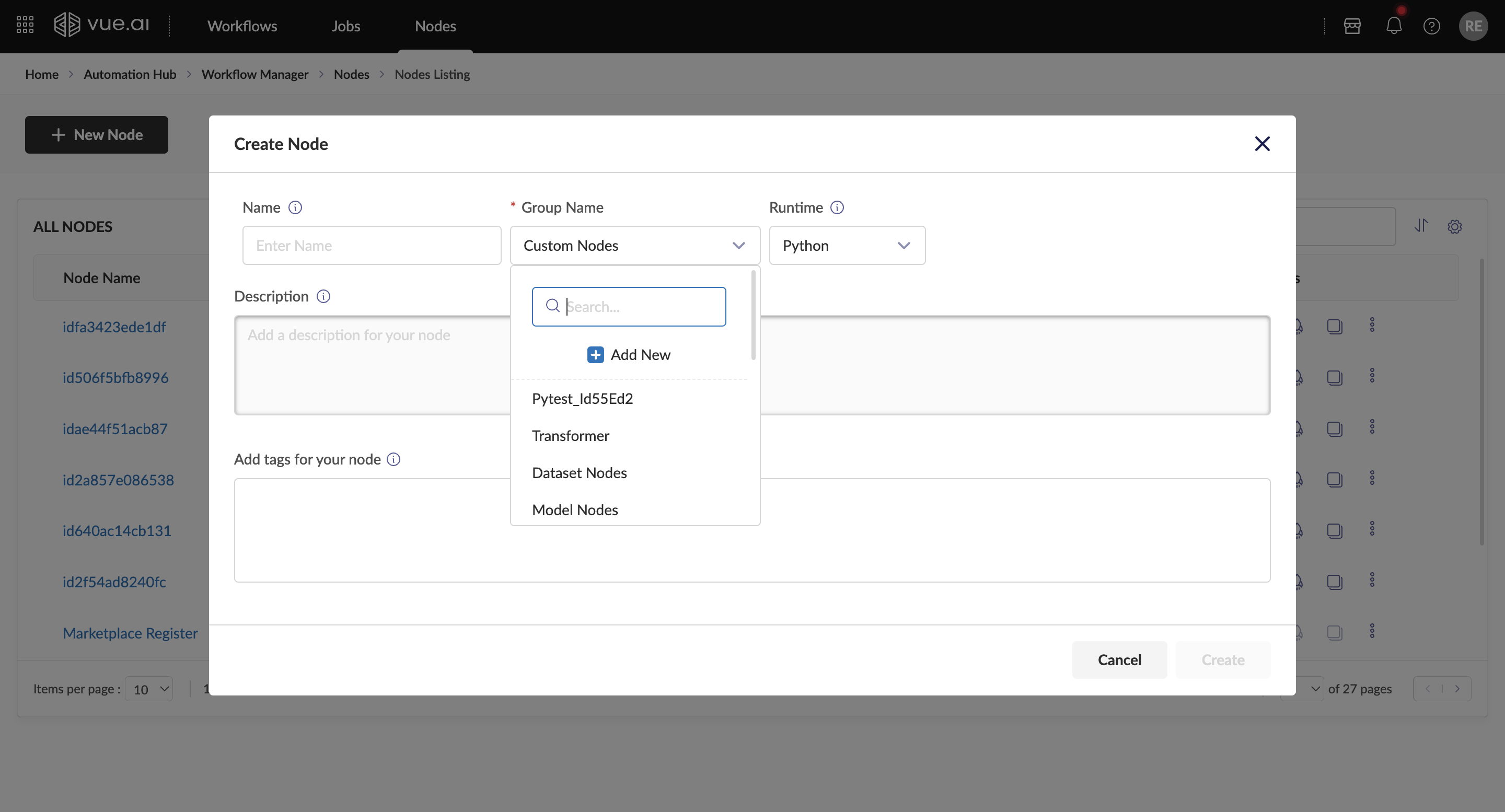

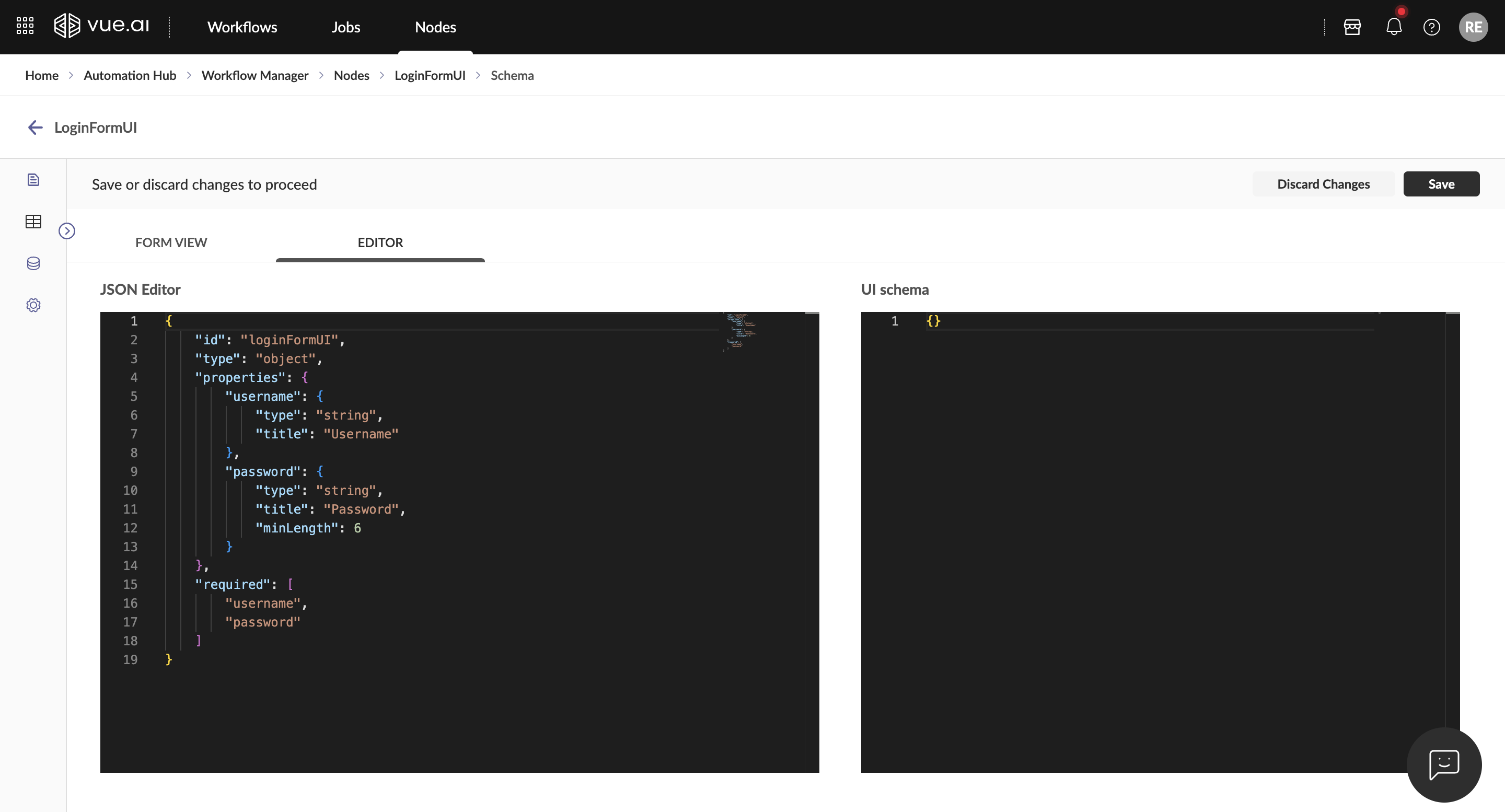

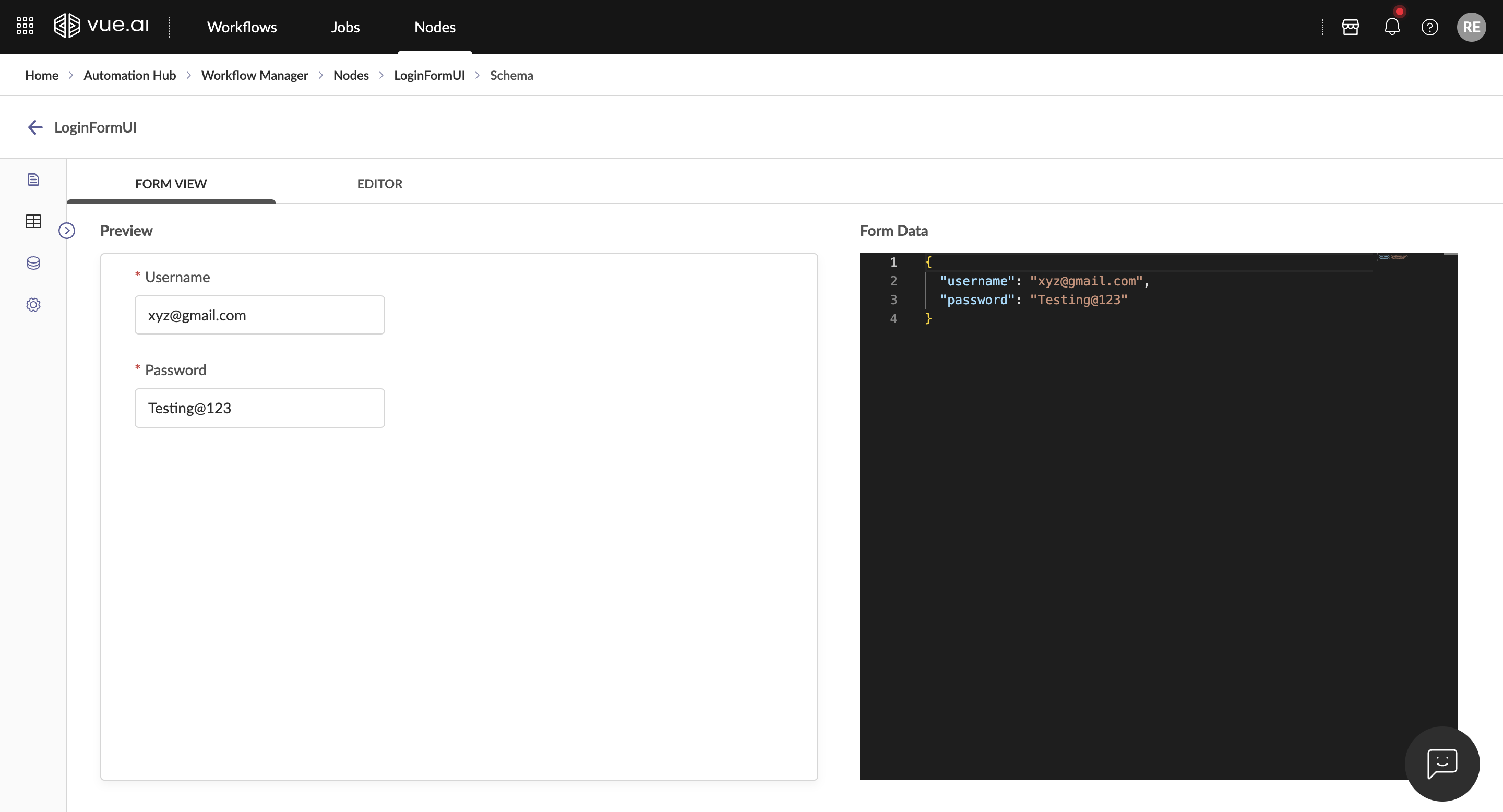

Workflow Manager

The Workflow Manager provides comprehensive tools for creating, deploying, and managing automated workflows with an intuitive canvas-based interface.

Orchestration

Welcome to the Workflow Orchestration: A Guide to Utilizing the Workflow Canvas! This guide will assist in understanding the key functionalities of the Workflow Canvas and learning how to leverage the Workflow Canvas to create efficient workflows.

Who is this guide for? This guide is designed for users of the Workflow Canvas.

Ensure access to the Workflow Concepts documentation is available before starting.

Overview

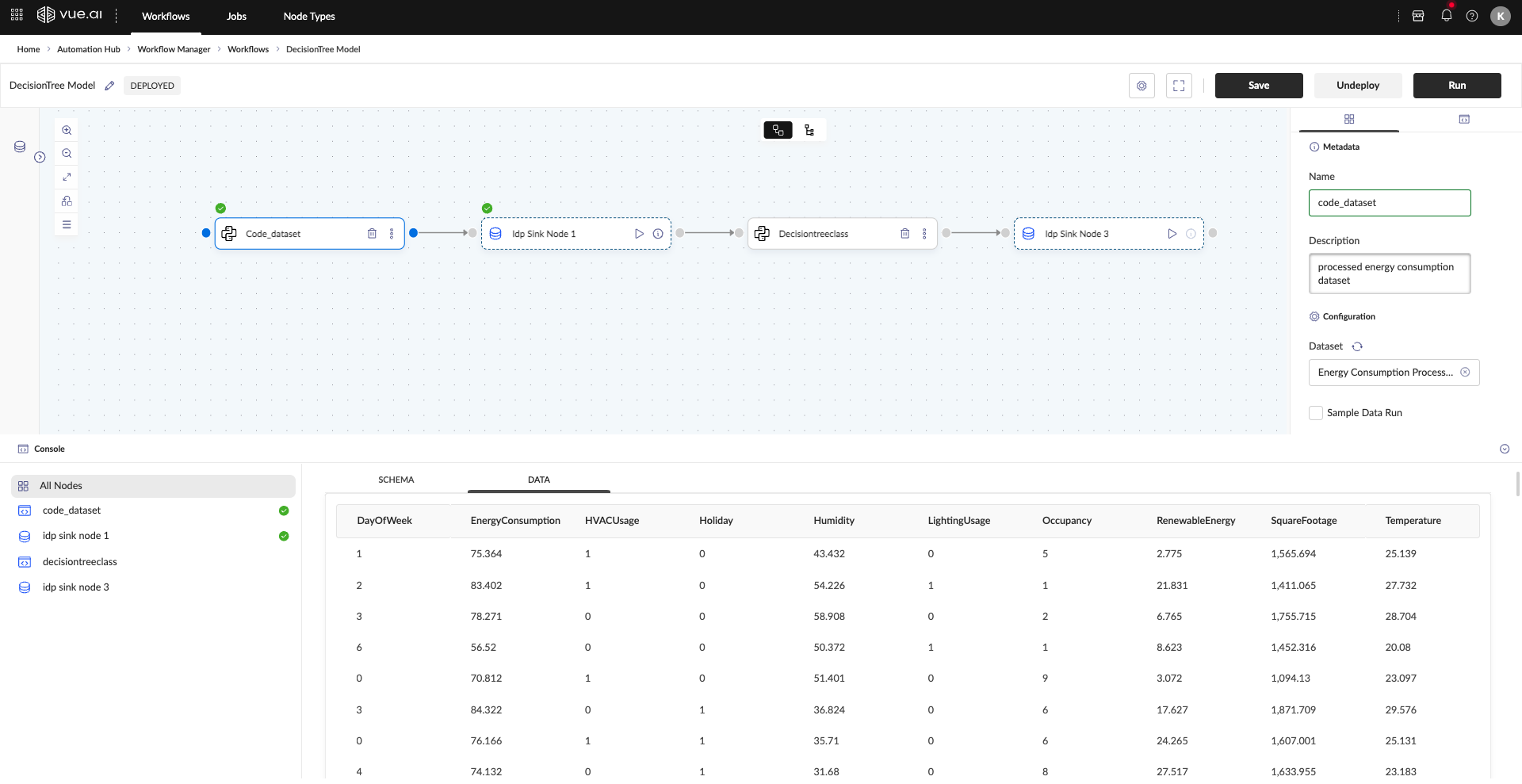

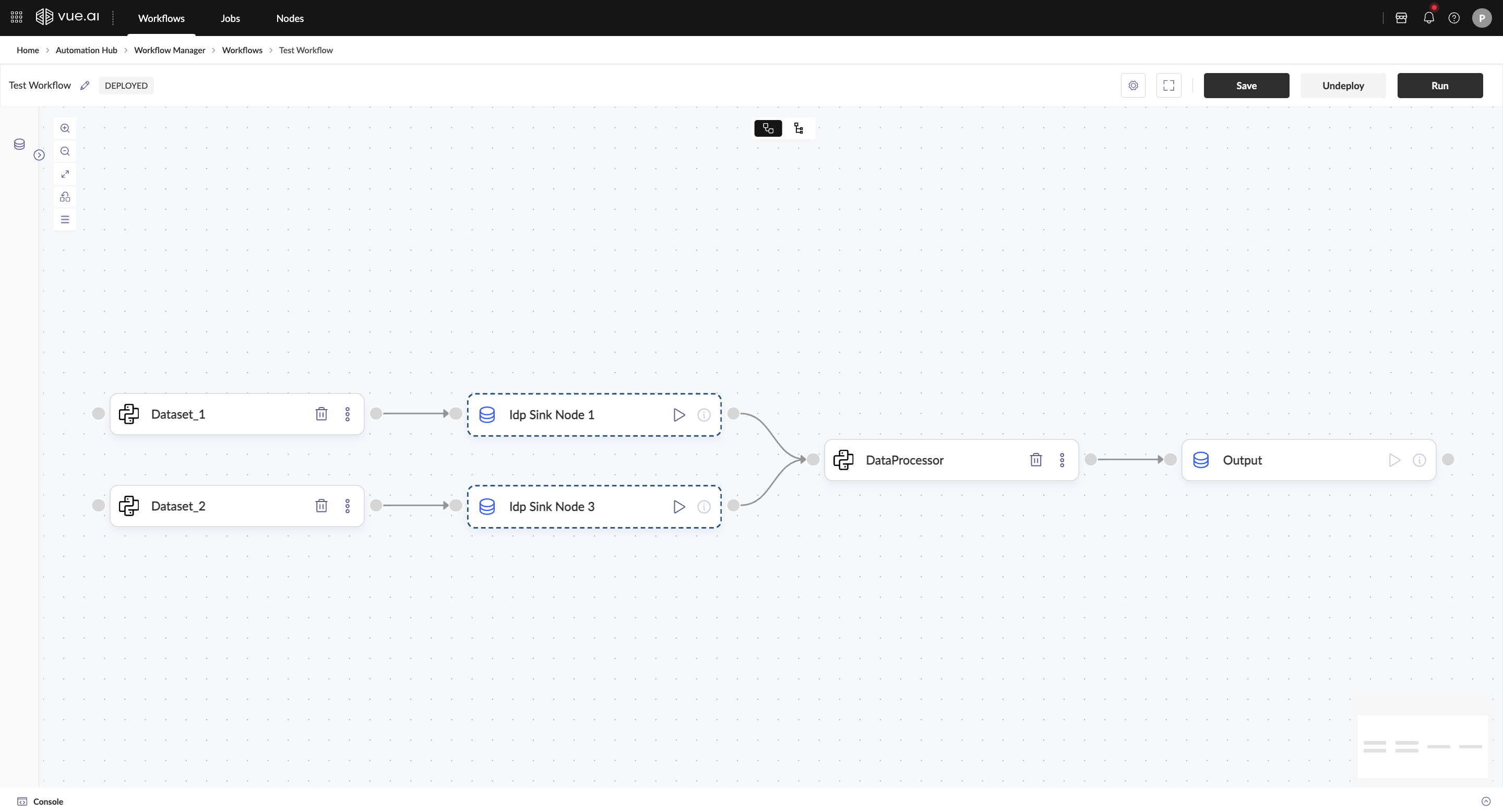

The Workflow Canvas provides a straightforward means for users to connect nodes, enabling seamless automation of tasks and data processing. It allows users to create workflows, configure settings, deploy them, and monitor executions in real-time.

Prerequisites Before beginning, ensure the following has been reviewed:

- Workflow Concepts documentation

Step-by-Step Instructions

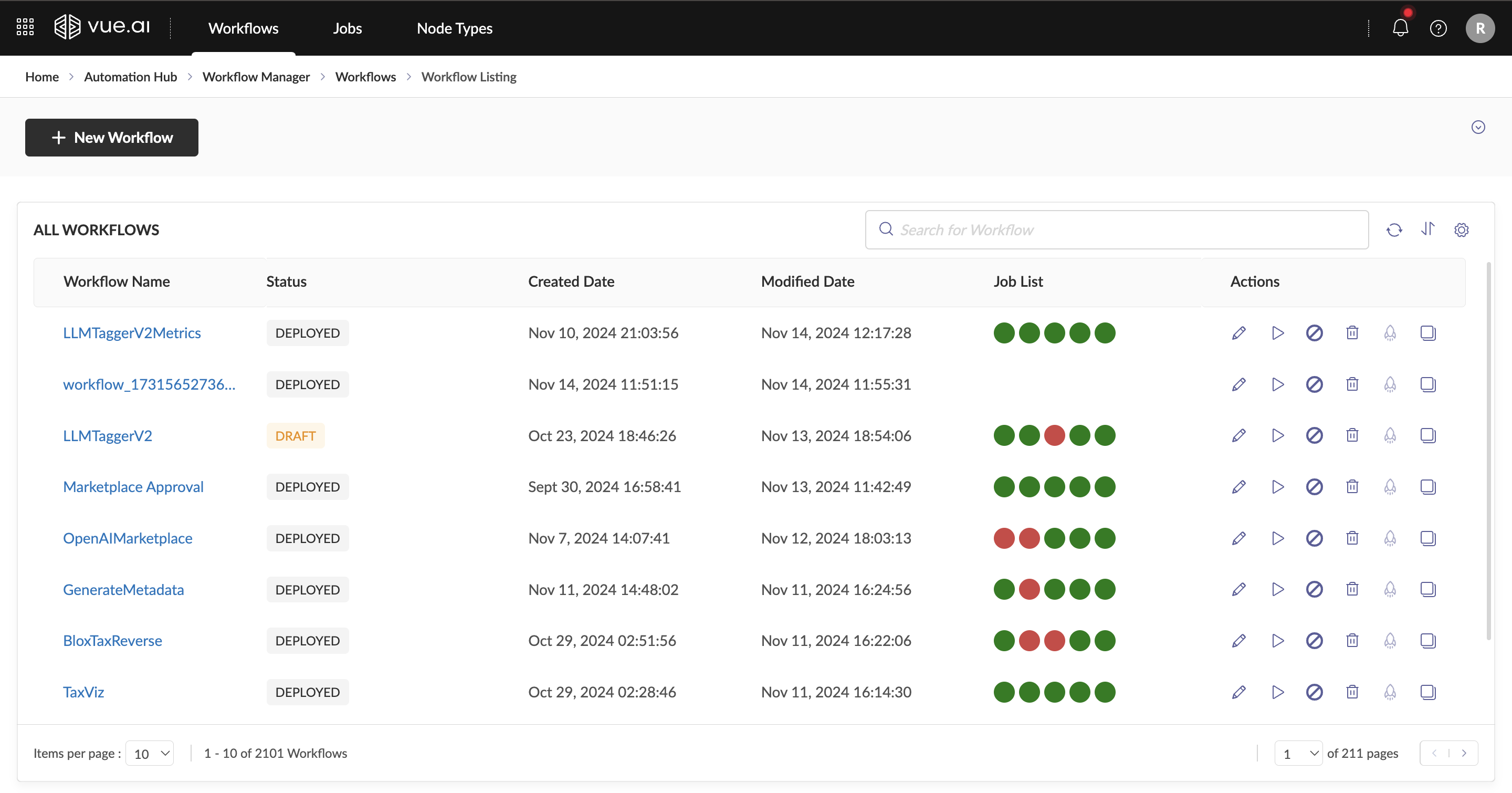

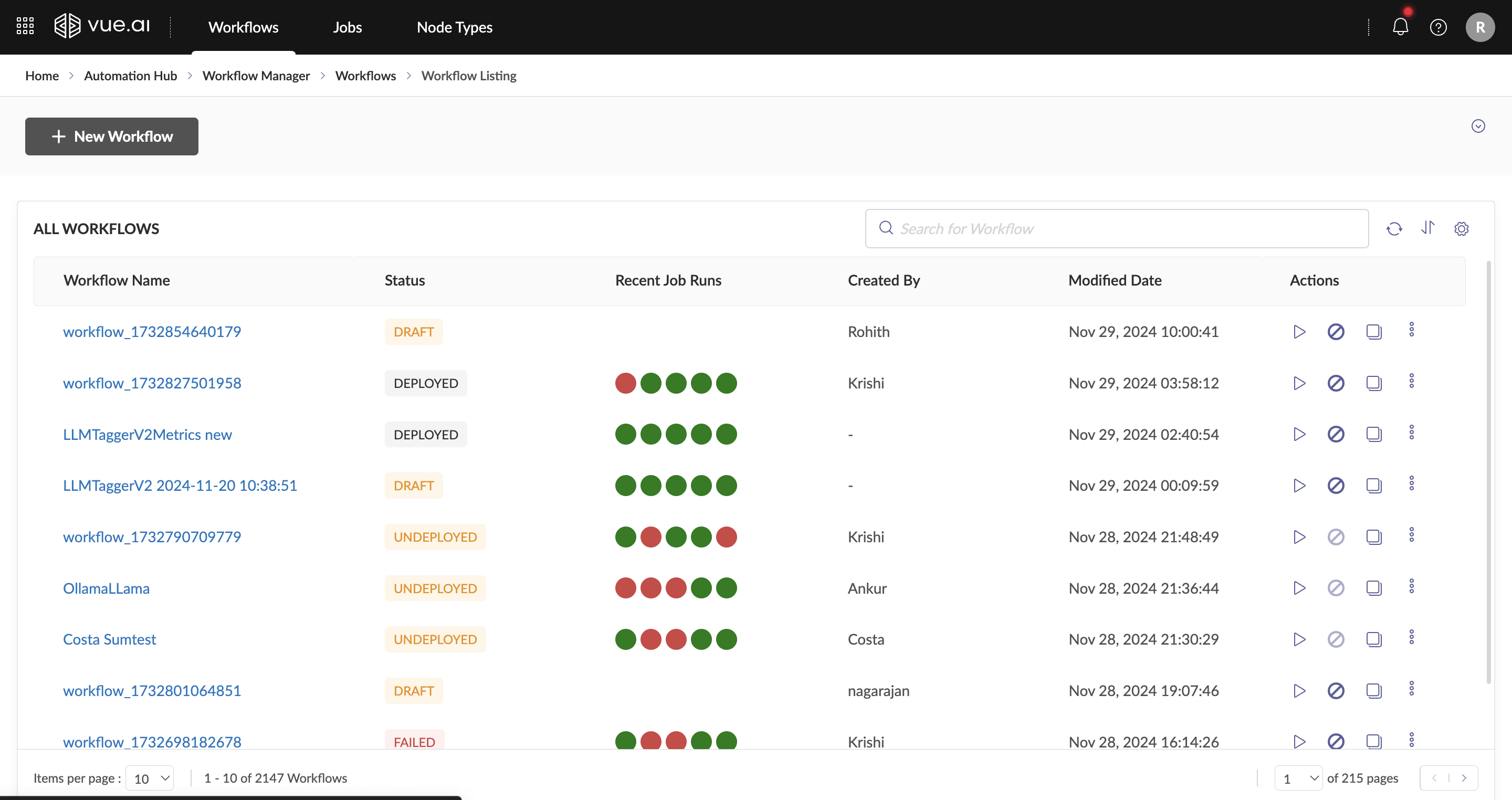

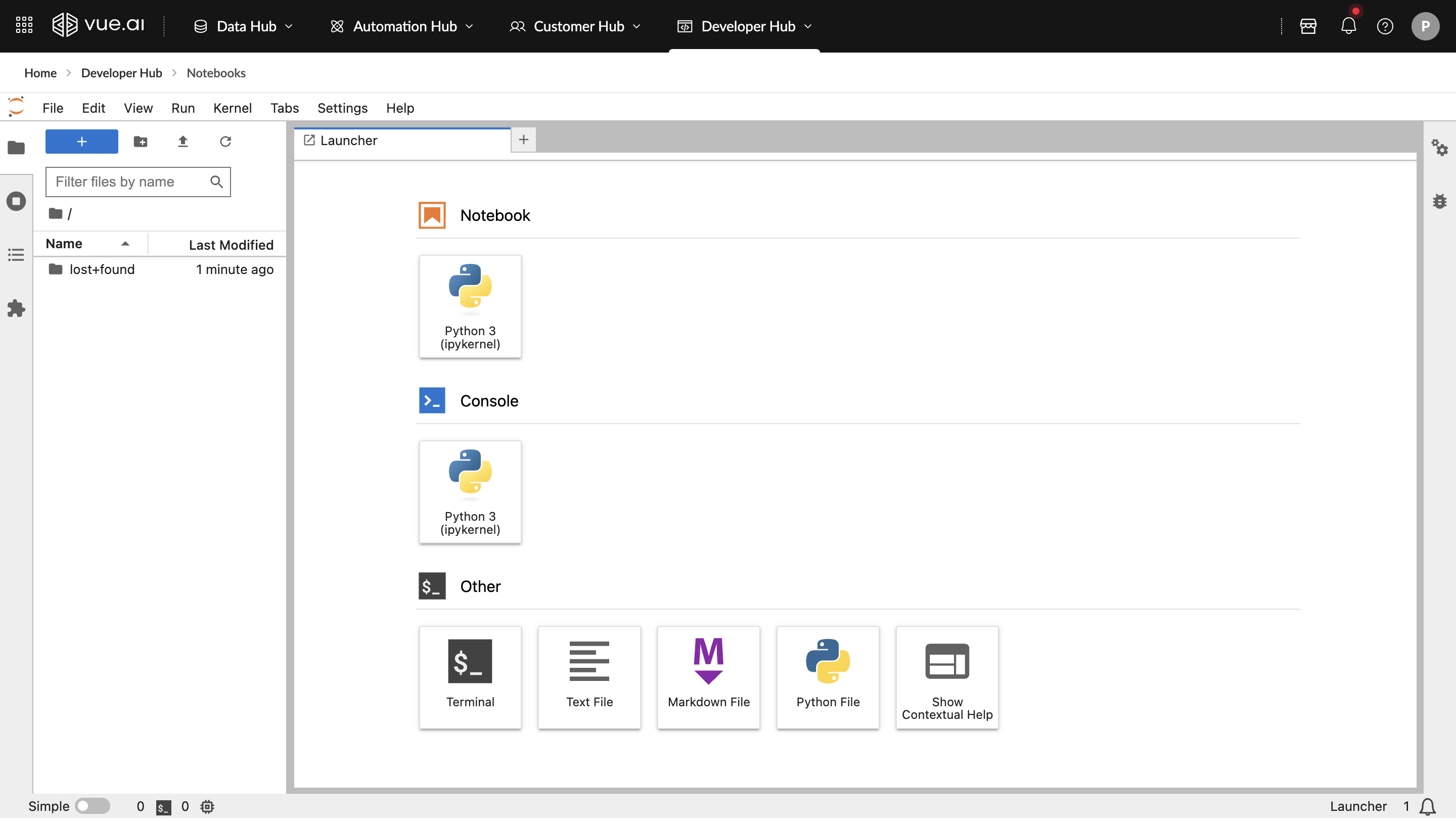

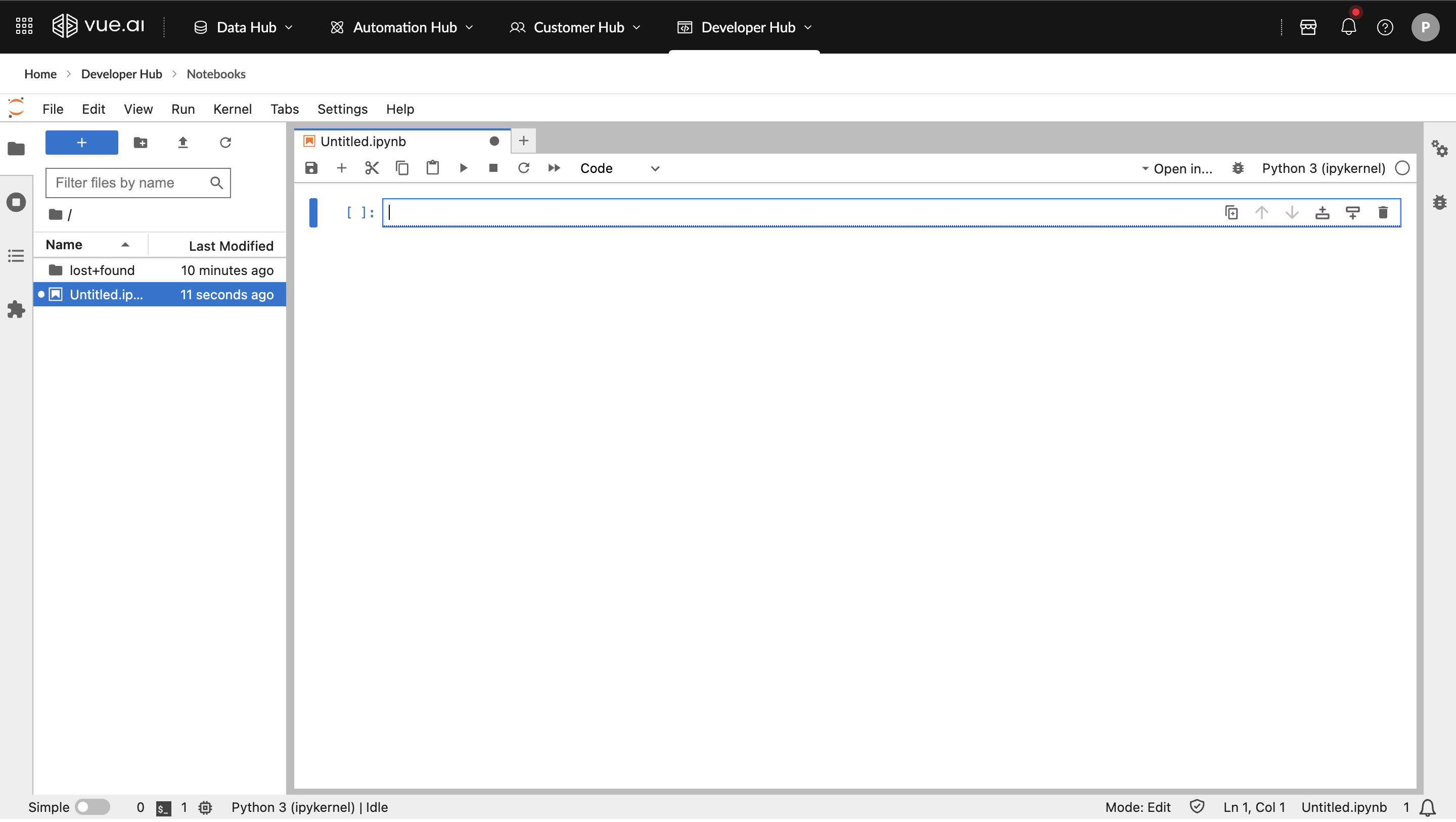

- Navigation Path: Home/Landing Page → Automation Hub → Workflow Manager → Workflows.

This path leads to the Workflows Listing Page, where existing workflows can be accessed and new workflows can be created.

- Creating a New Workflow

To start a new workflow:

- Click the New Workflow button at the top-left of the Workflows Listing screen. This will open the Workflow Canvas interface.

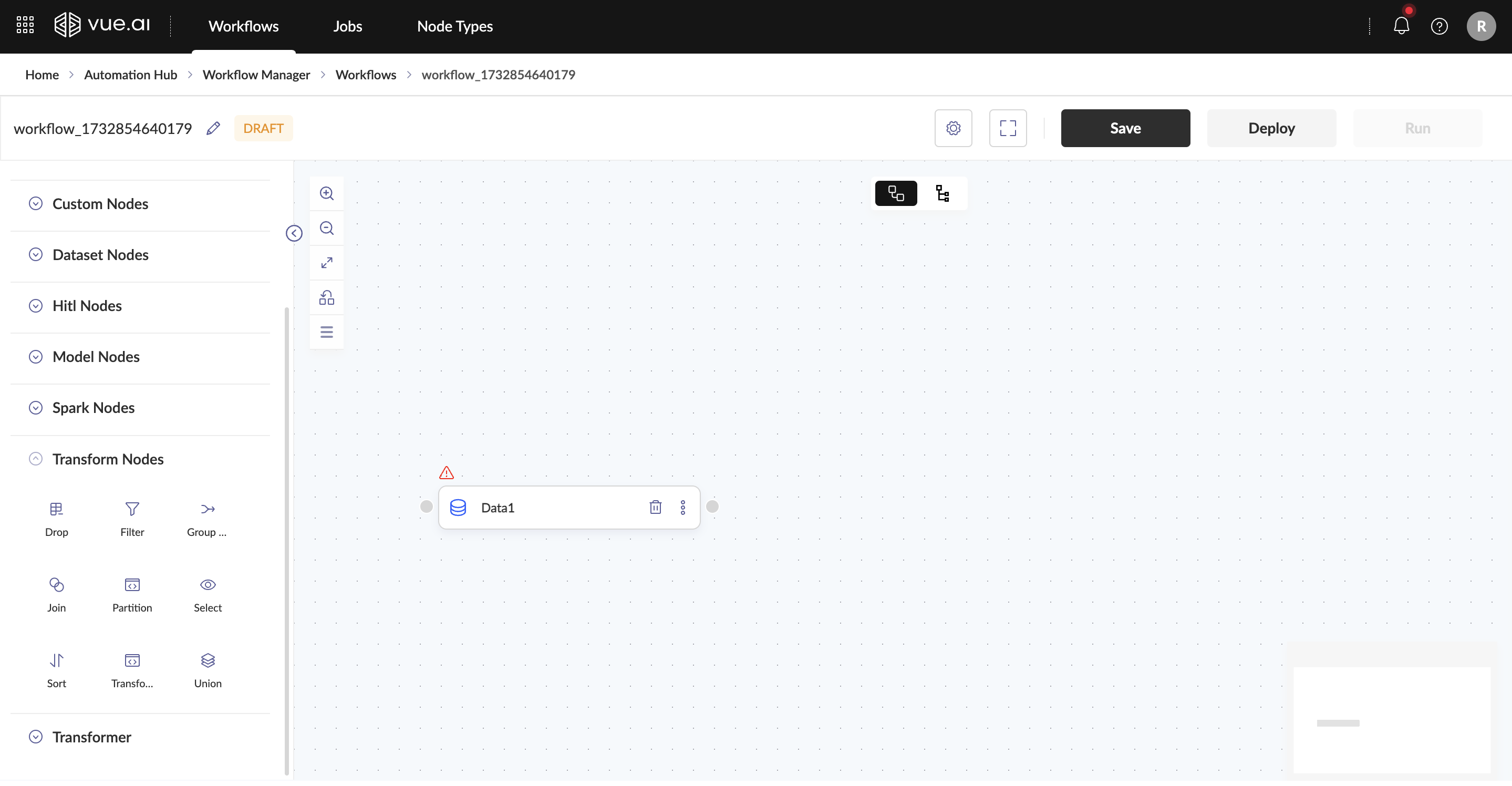

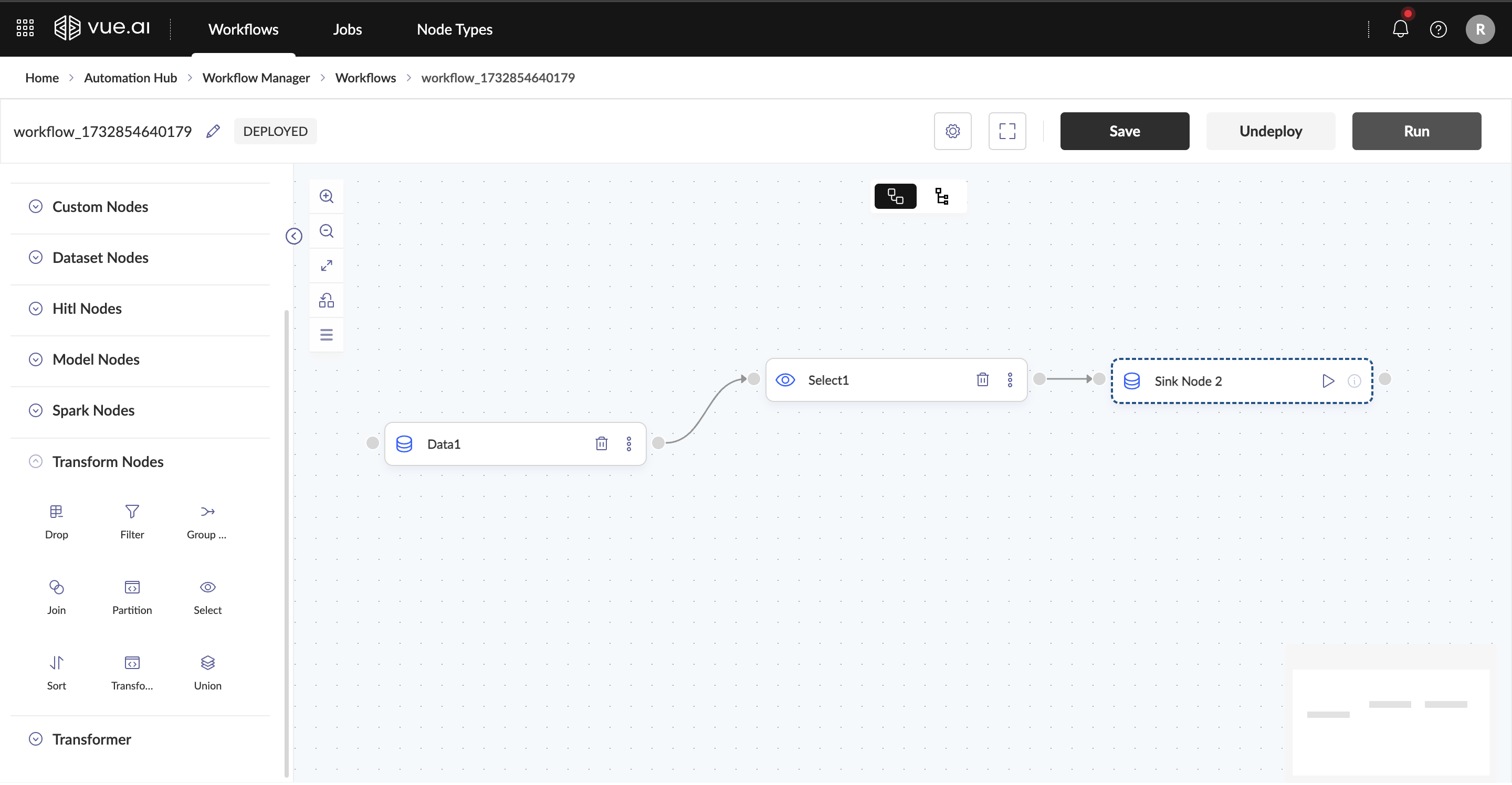

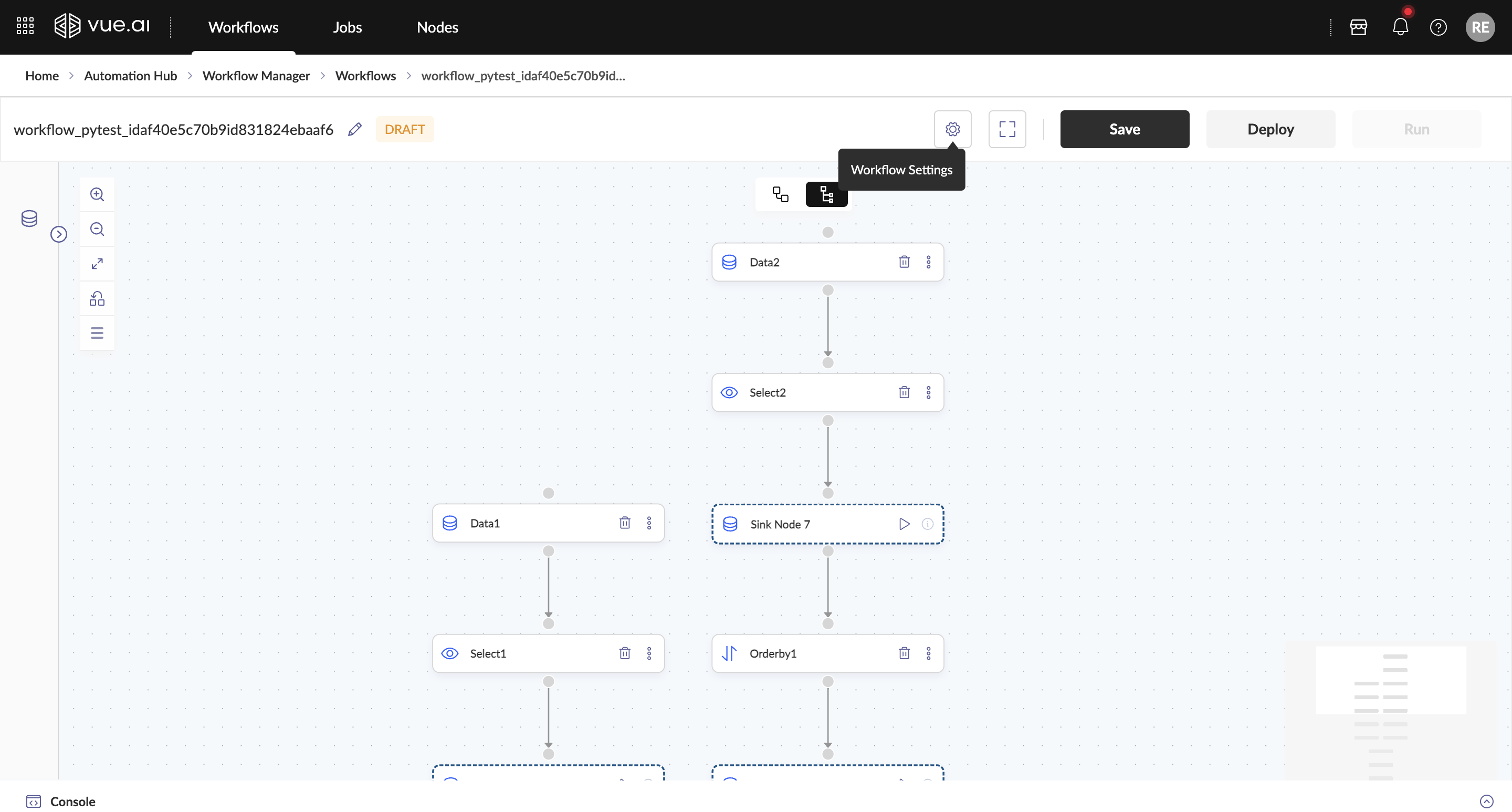

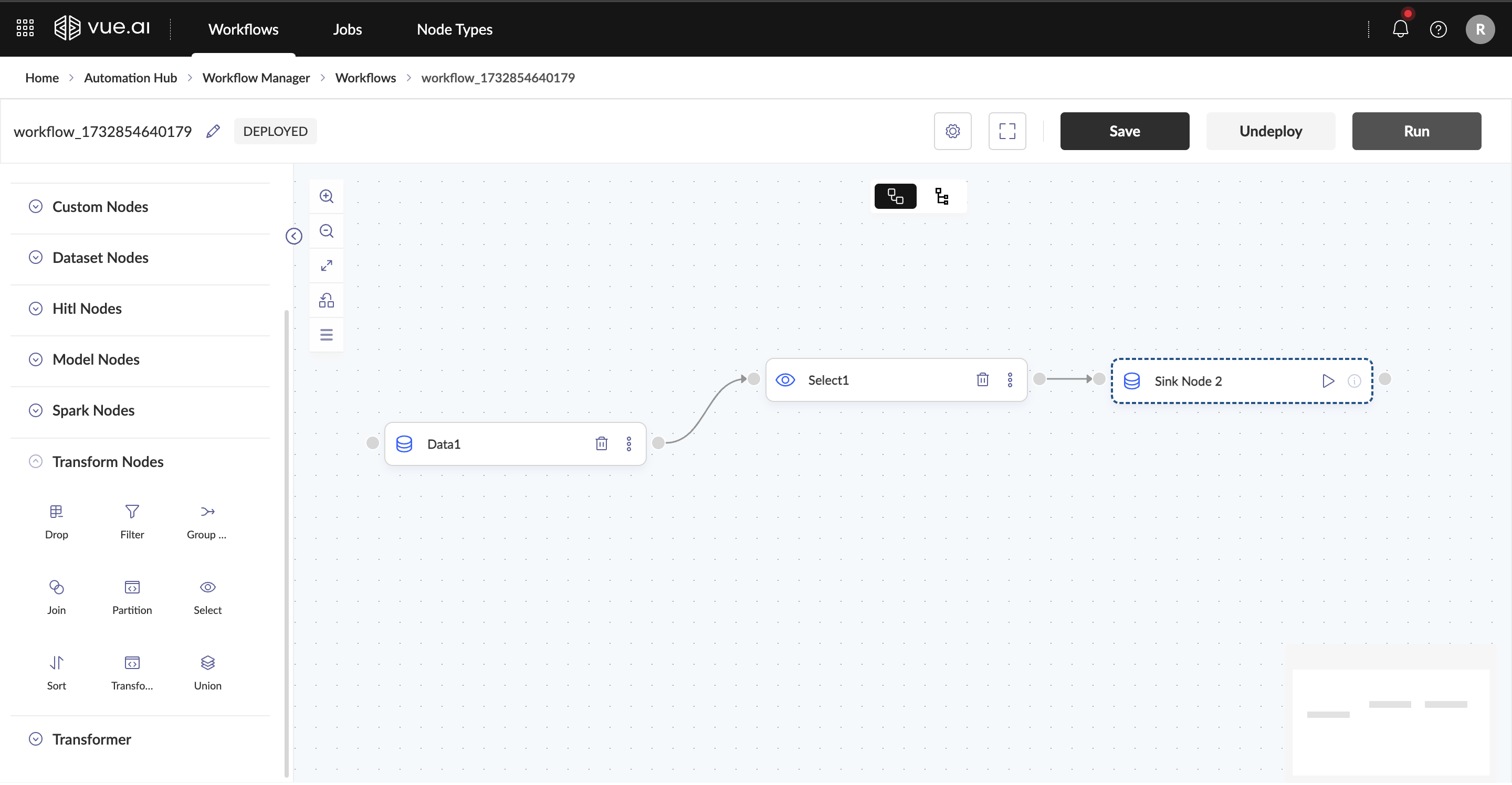

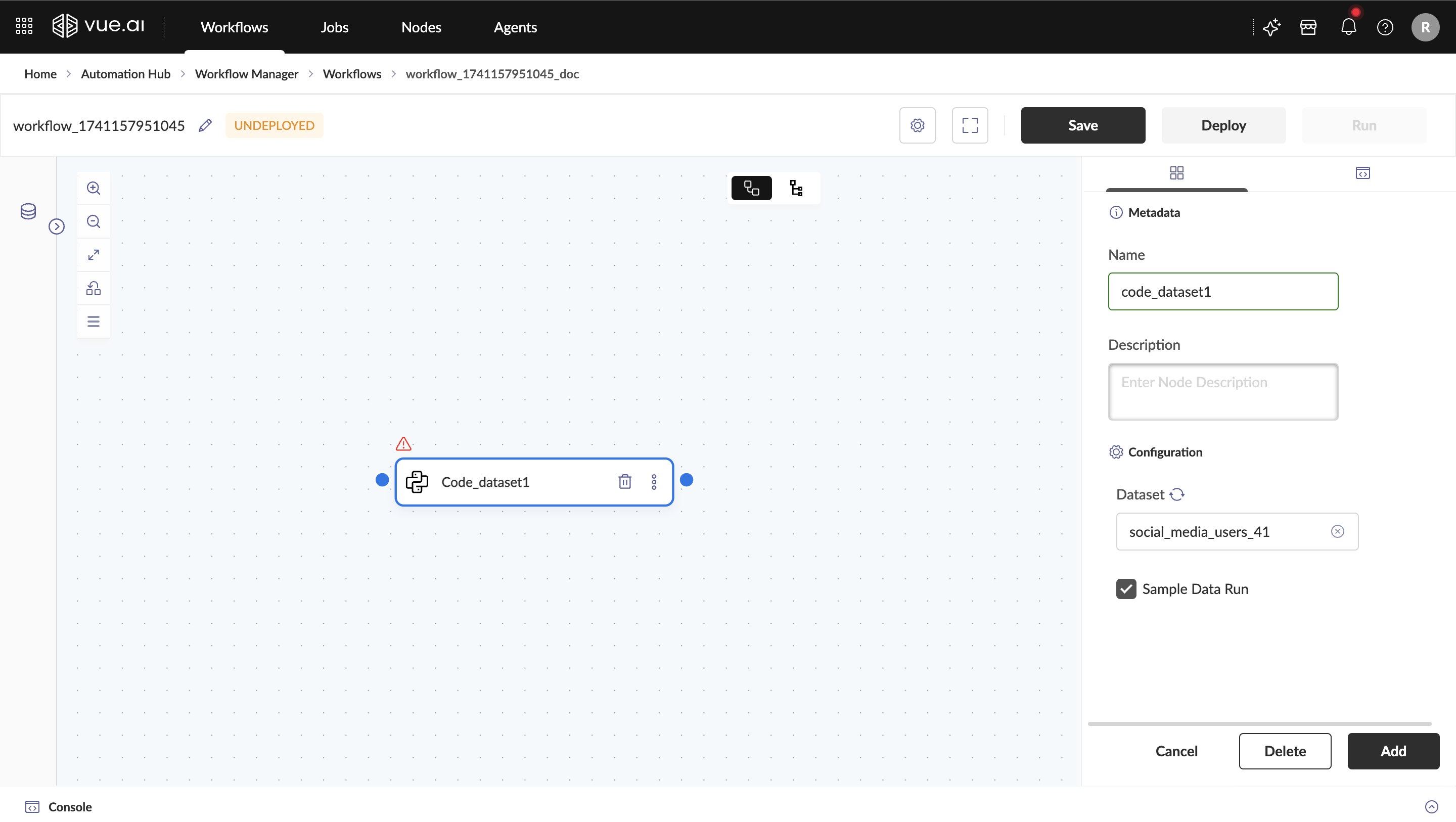

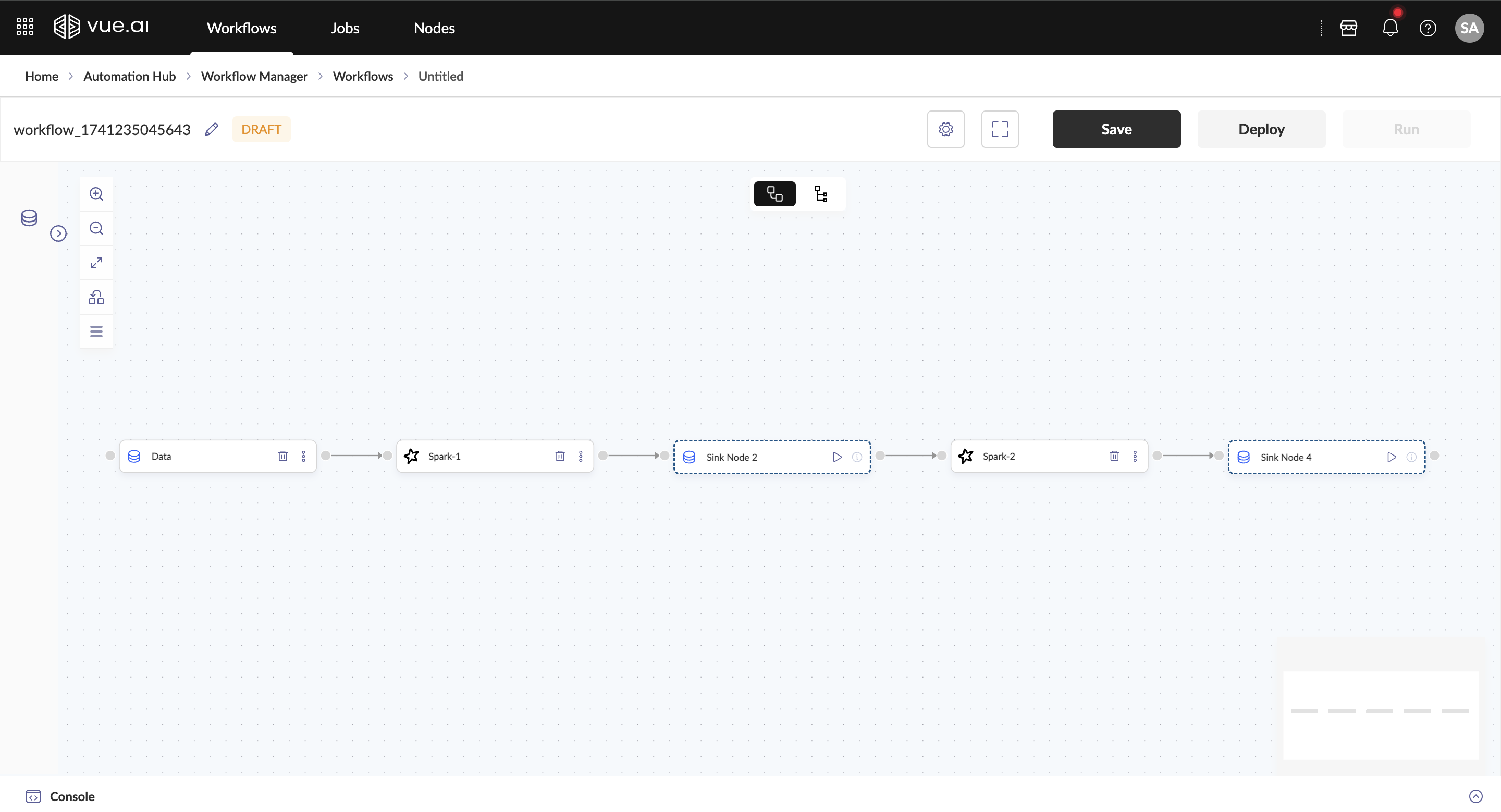

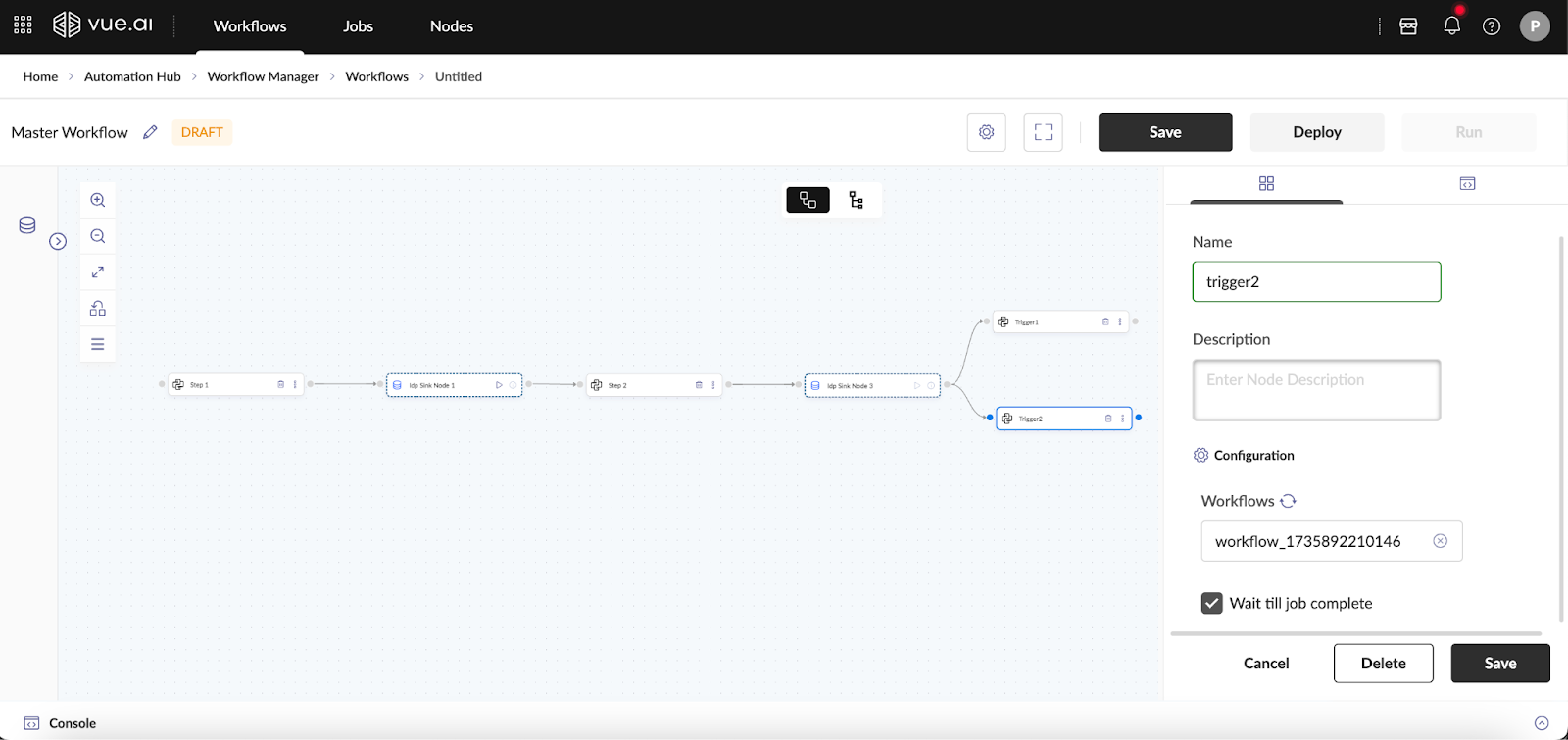

- The Workflow Canvas Top Bar

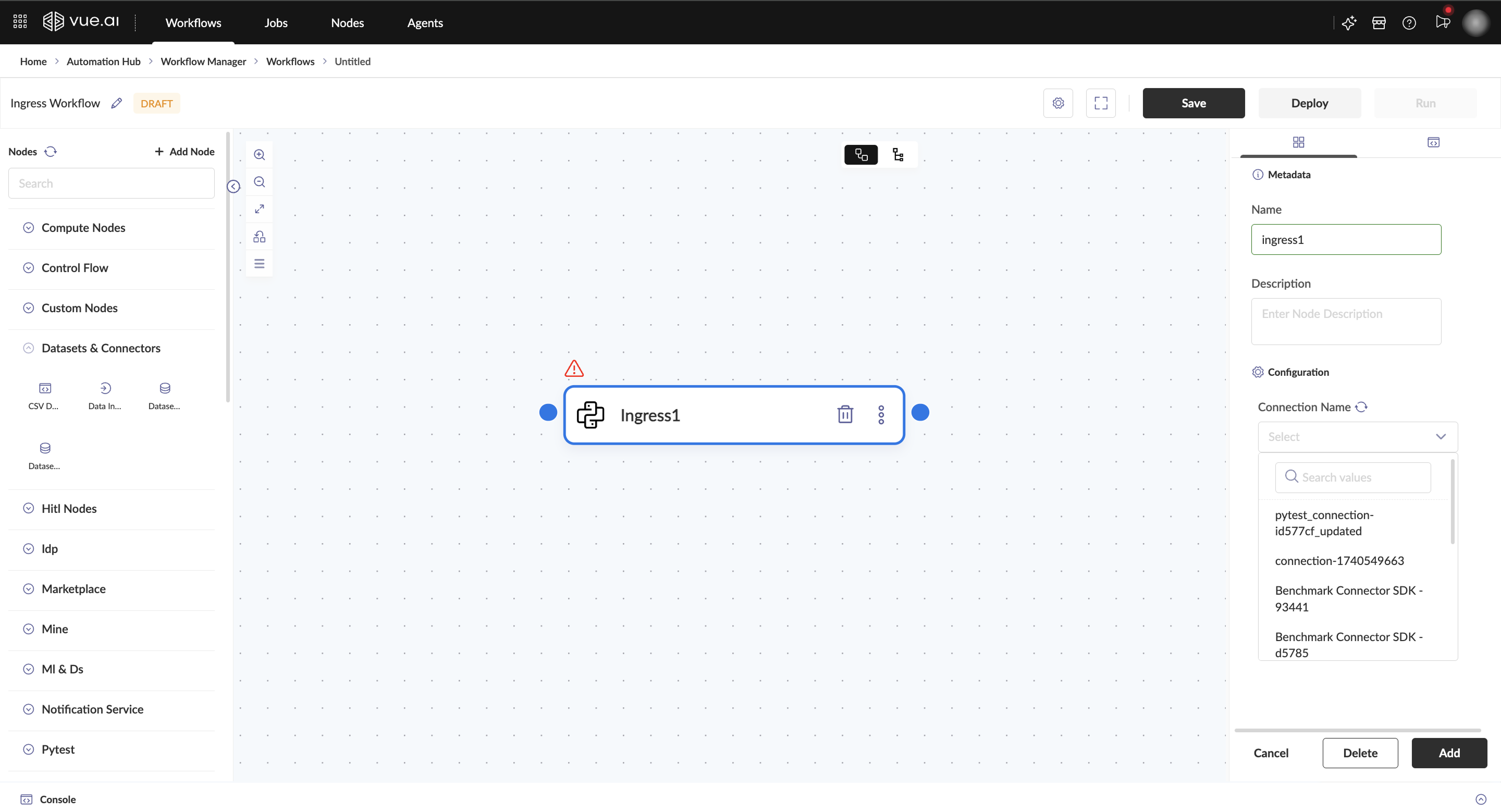

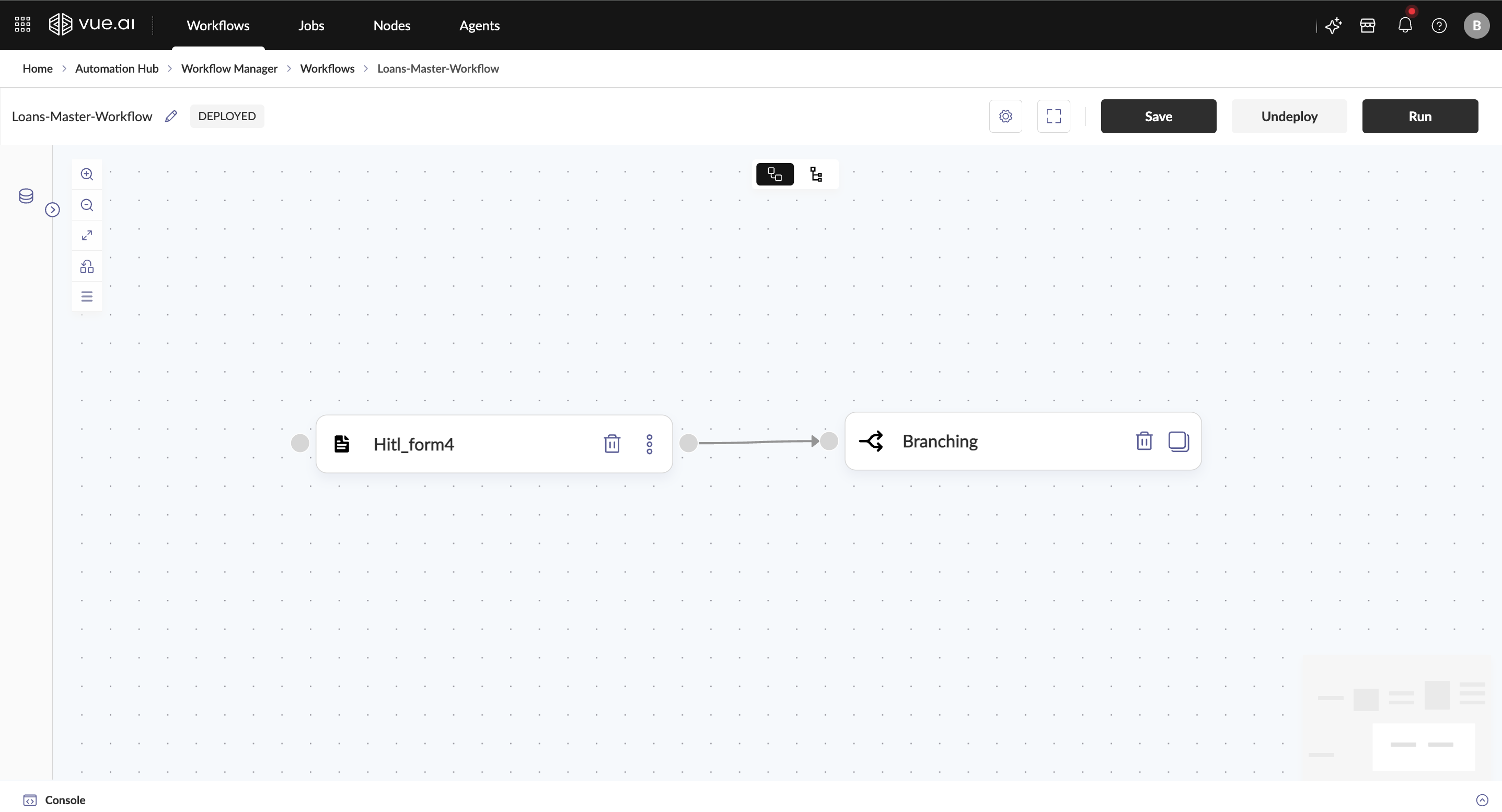

The top bar of the Workflow Canvas provides essential workflow information and controls, including:

- Workflow Name: Newly created workflows are named "workflow_#" by default. Use the edit button to give it a more meaningful name.

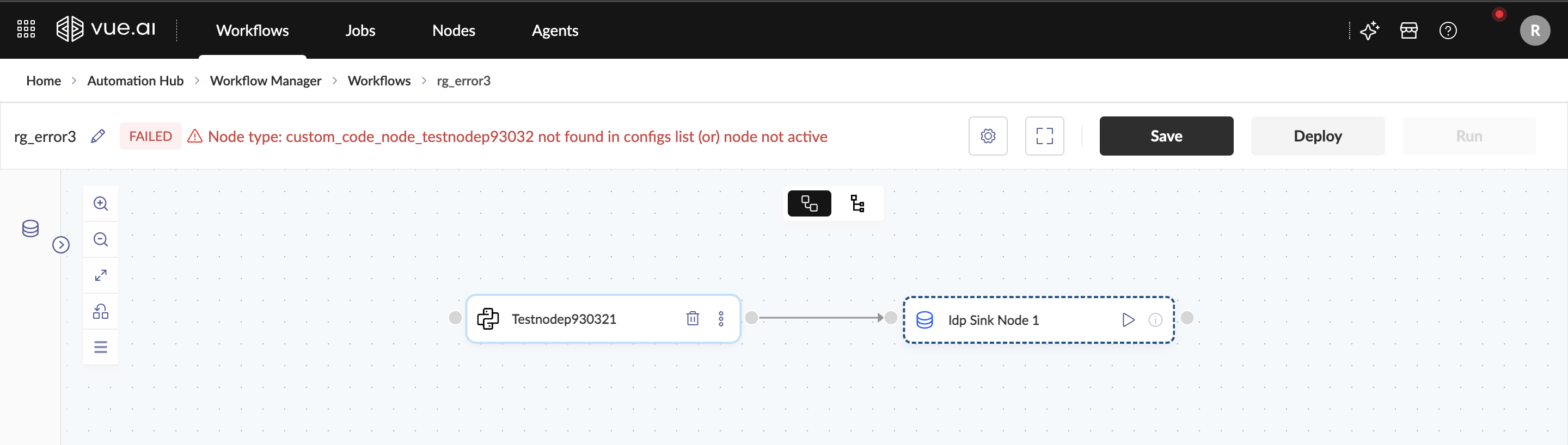

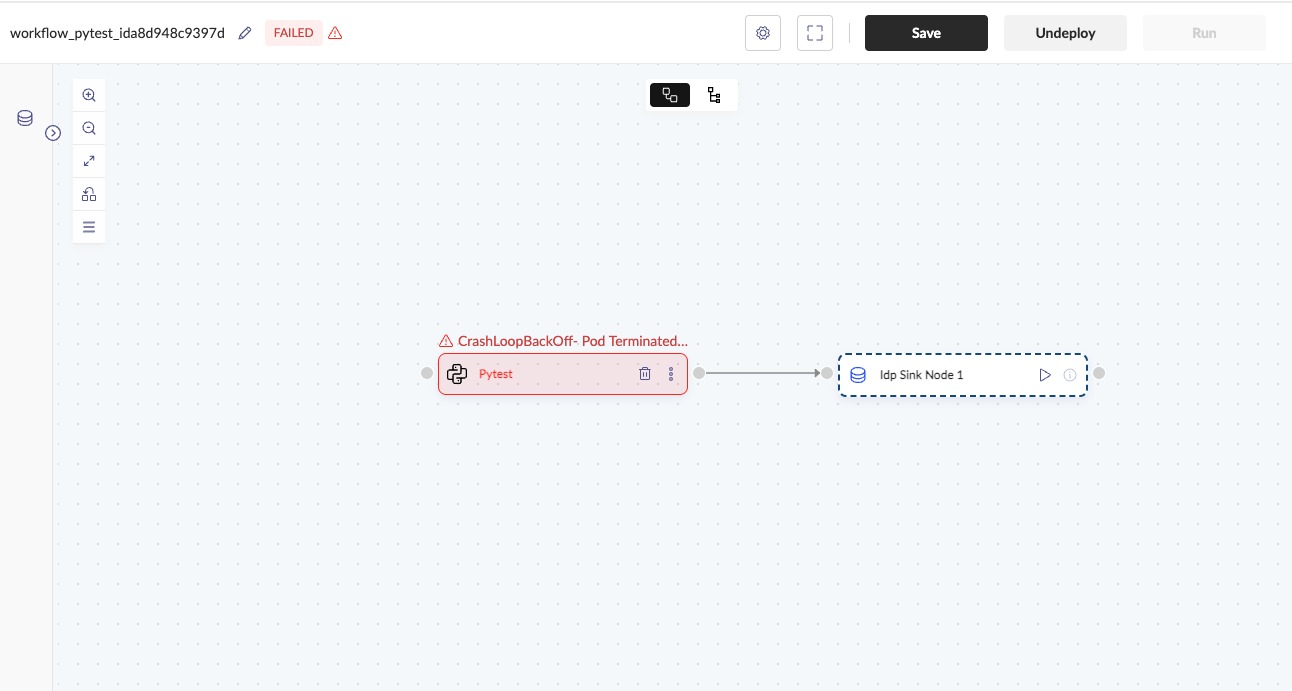

- Workflow Status: Indicates the current state of the workflow, with common statuses like DRAFT, DEPLOYING, DEPLOYED, and FAILED.

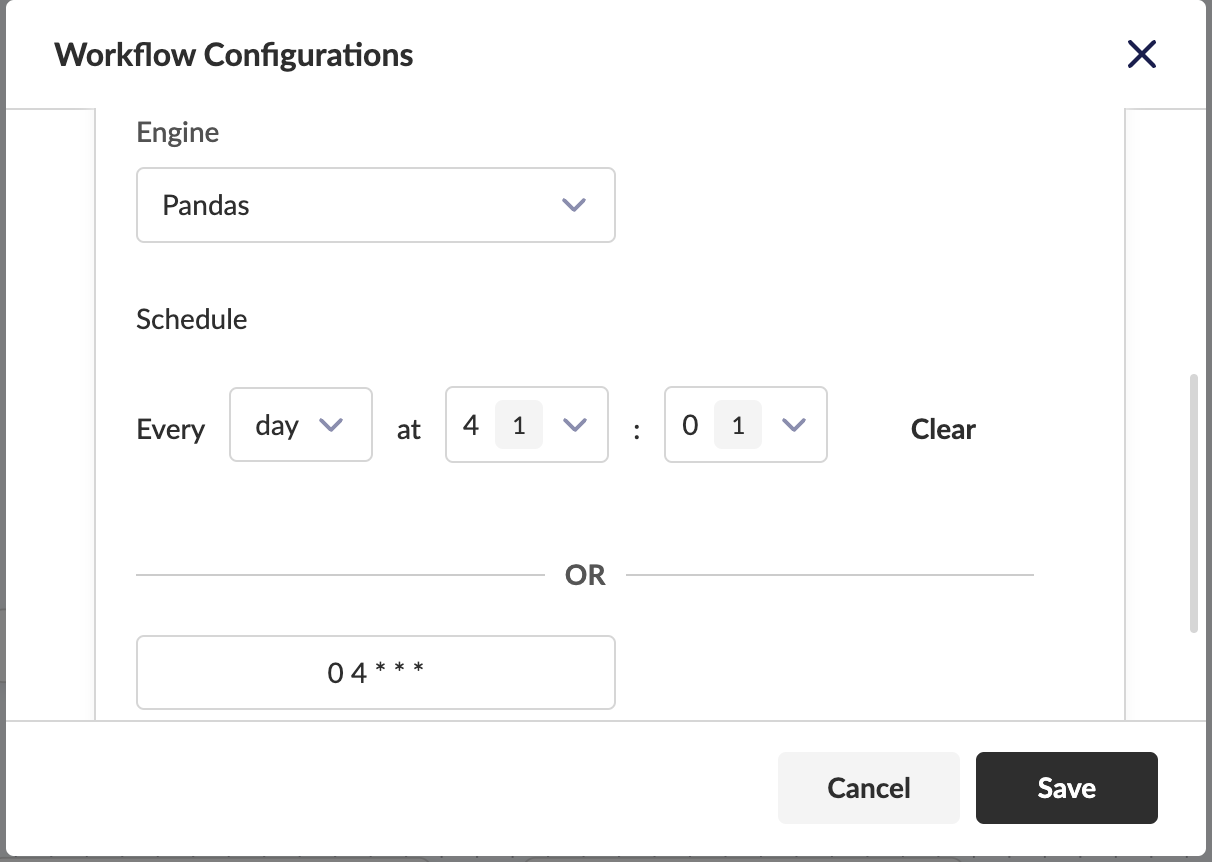

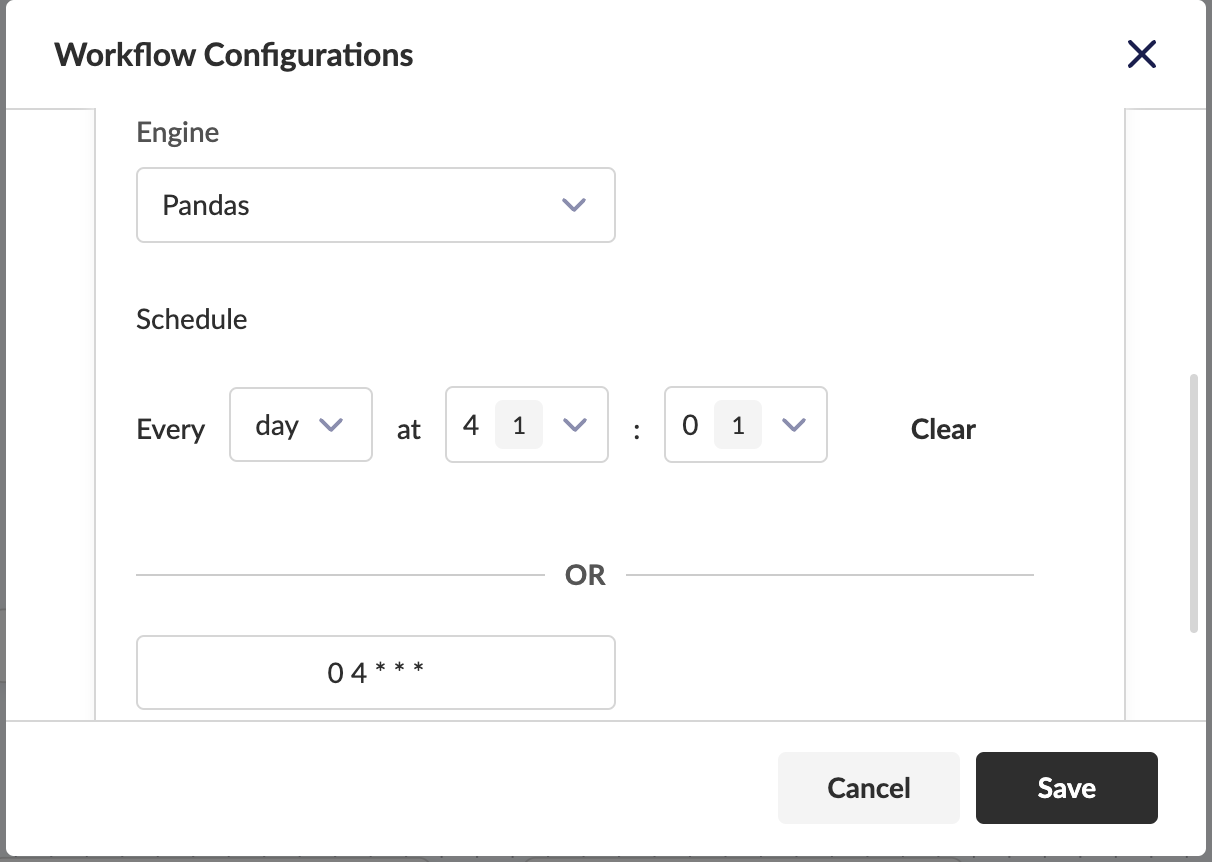

- Gear Icon - Workflow Configurations: Opens a settings menu where you can specify the workflow's runtime engine, schedule it, or choose to run it on sample data or the full dataset.

![]()

- Full Screen Icon: Switches the canvas to full-screen mode for a more focused view.

- Save Button: Saves the workflow manually, though autosave is also enabled.

- Deploy Button: Deploys the workflow to the selected engine.

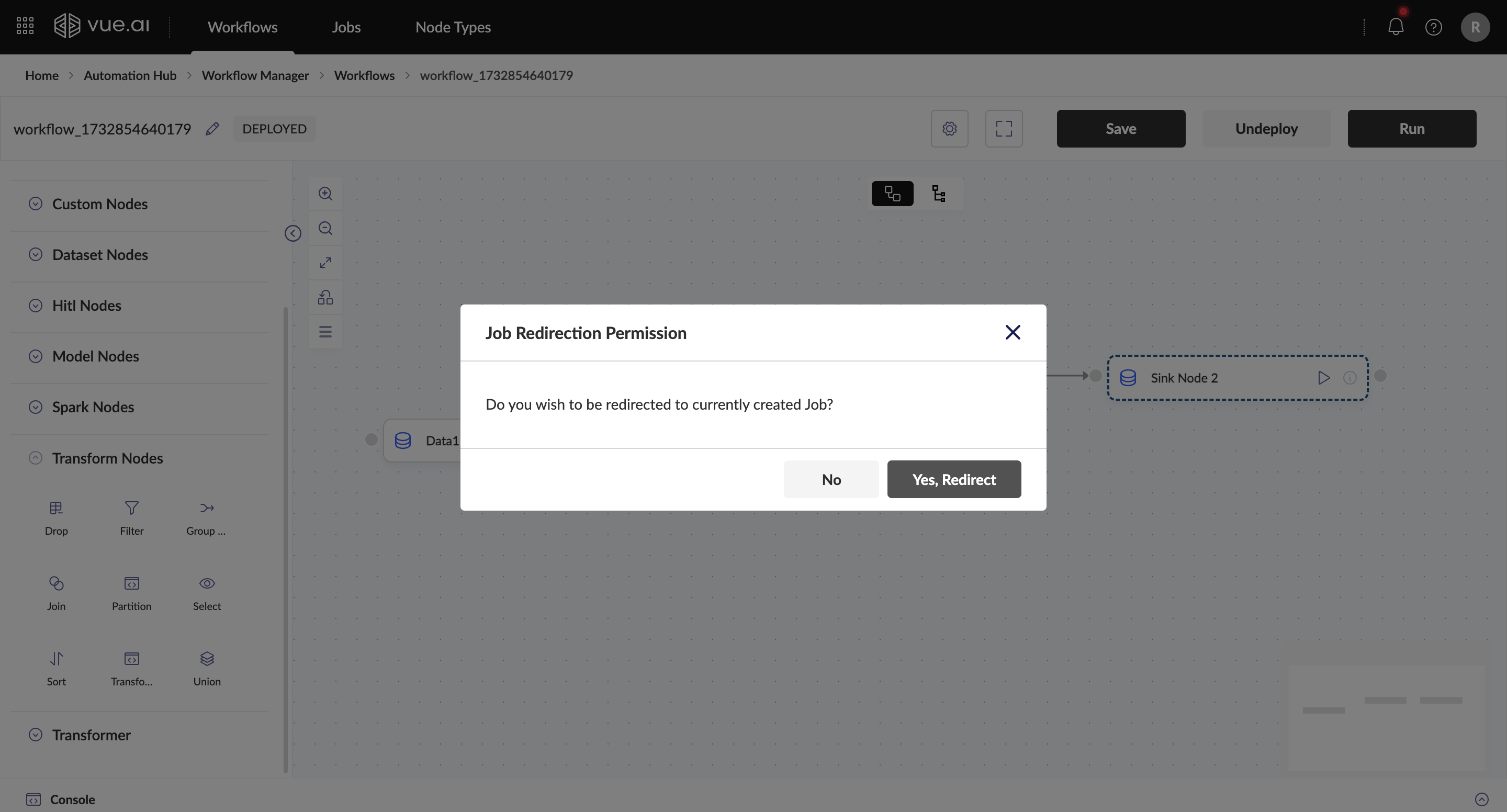

- Run Button: Becomes active after deployment is successful, initiating a job that can be viewed in real time.

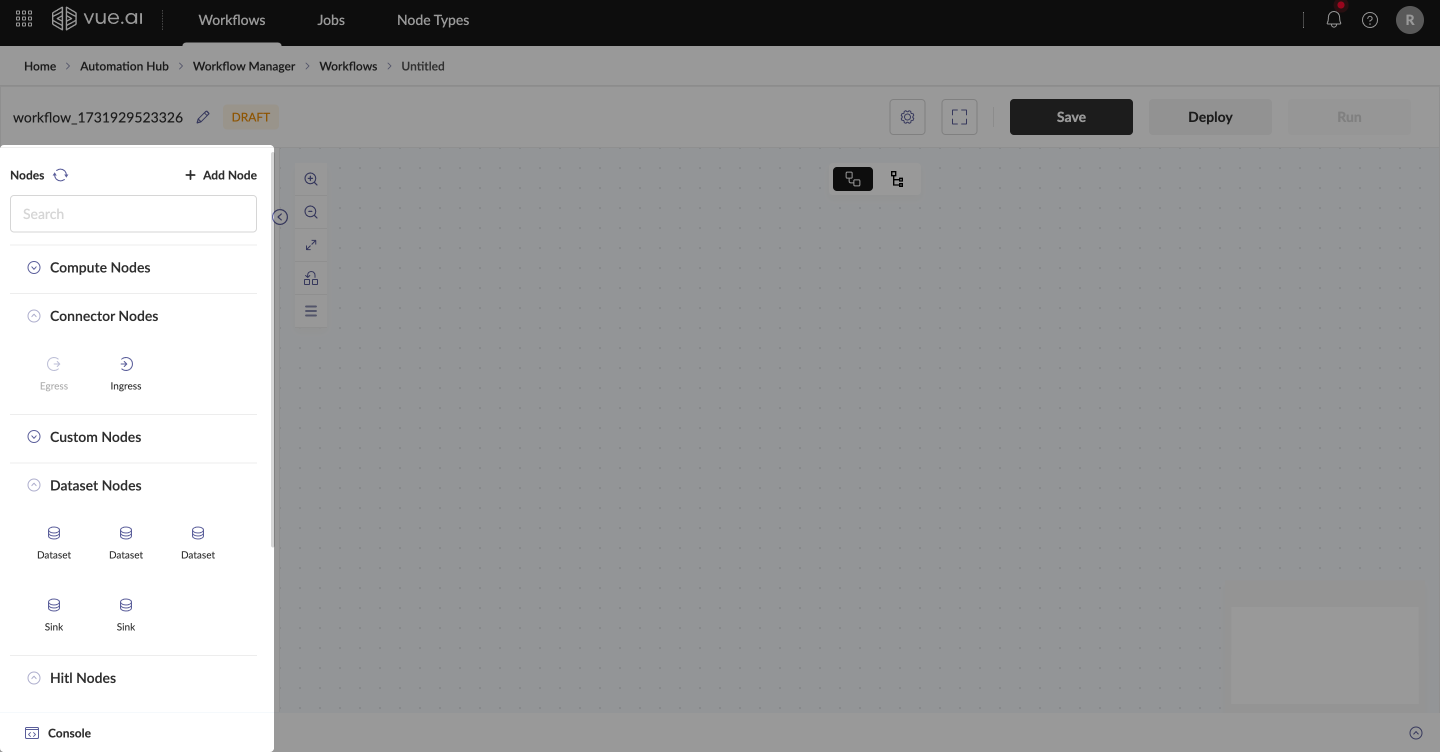

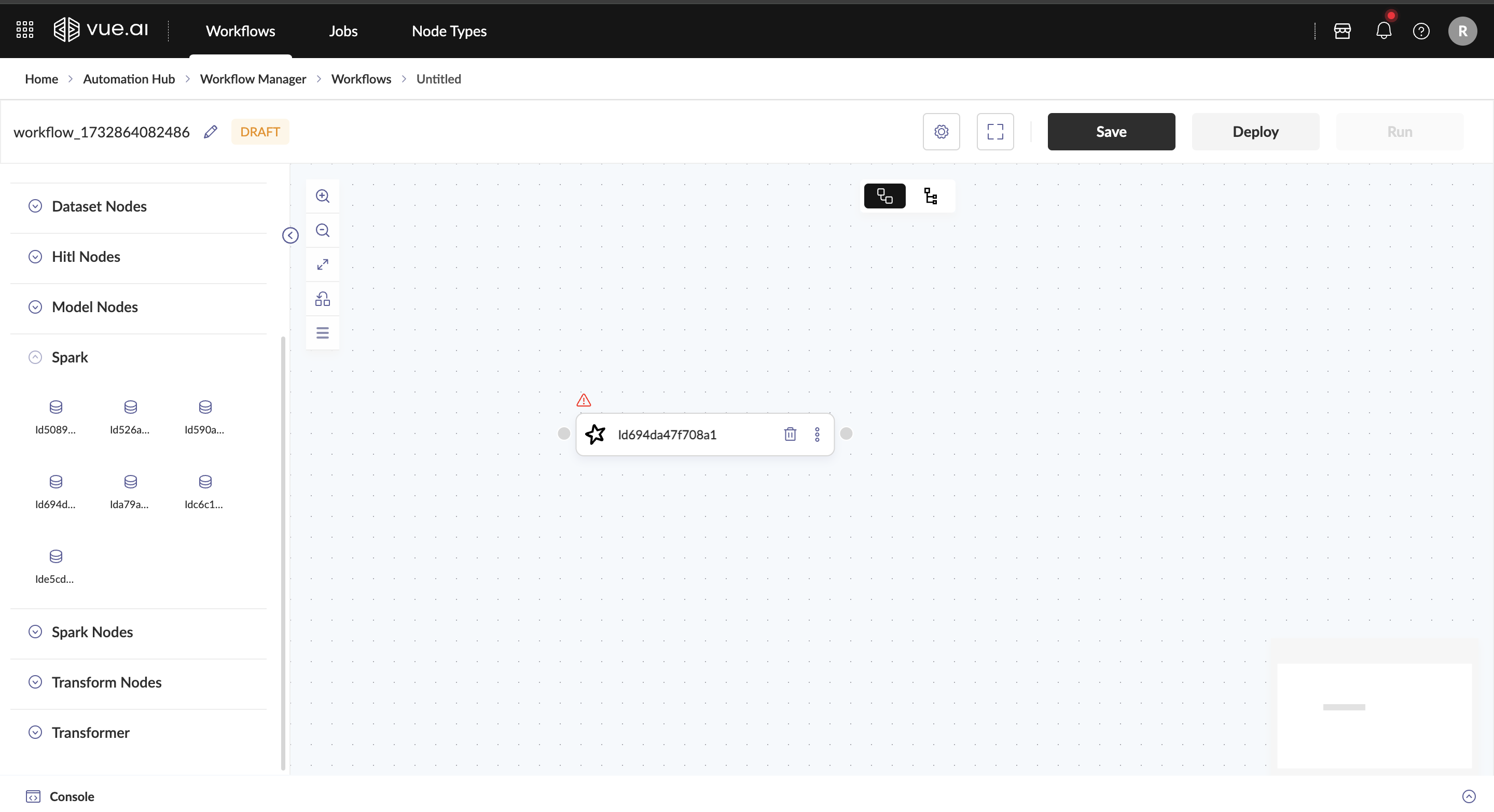

- Workflow Left Pane (Node Sidebar)

The left sidebar is where all nodes are located, offering various functionalities for building your workflow:

- Search: Quickly locate a specific node by name.

- Refresh Icon: Updates the node list, especially useful when new nodes have been added.

- Add Node: Opens a node creation page for building custom nodes.

- Drag & Drop: Drag nodes onto the Workflow Canvas to start connecting and building data pipelines. Each node has a unique Node Configuration panel displayed in the right pane when selected.

- Additional Sidebar Functions

- Zoom Controls: Zoom in/out, fit the view to screen, auto-arrange nodes, or use the outline view to see all nodes on the canvas at once.

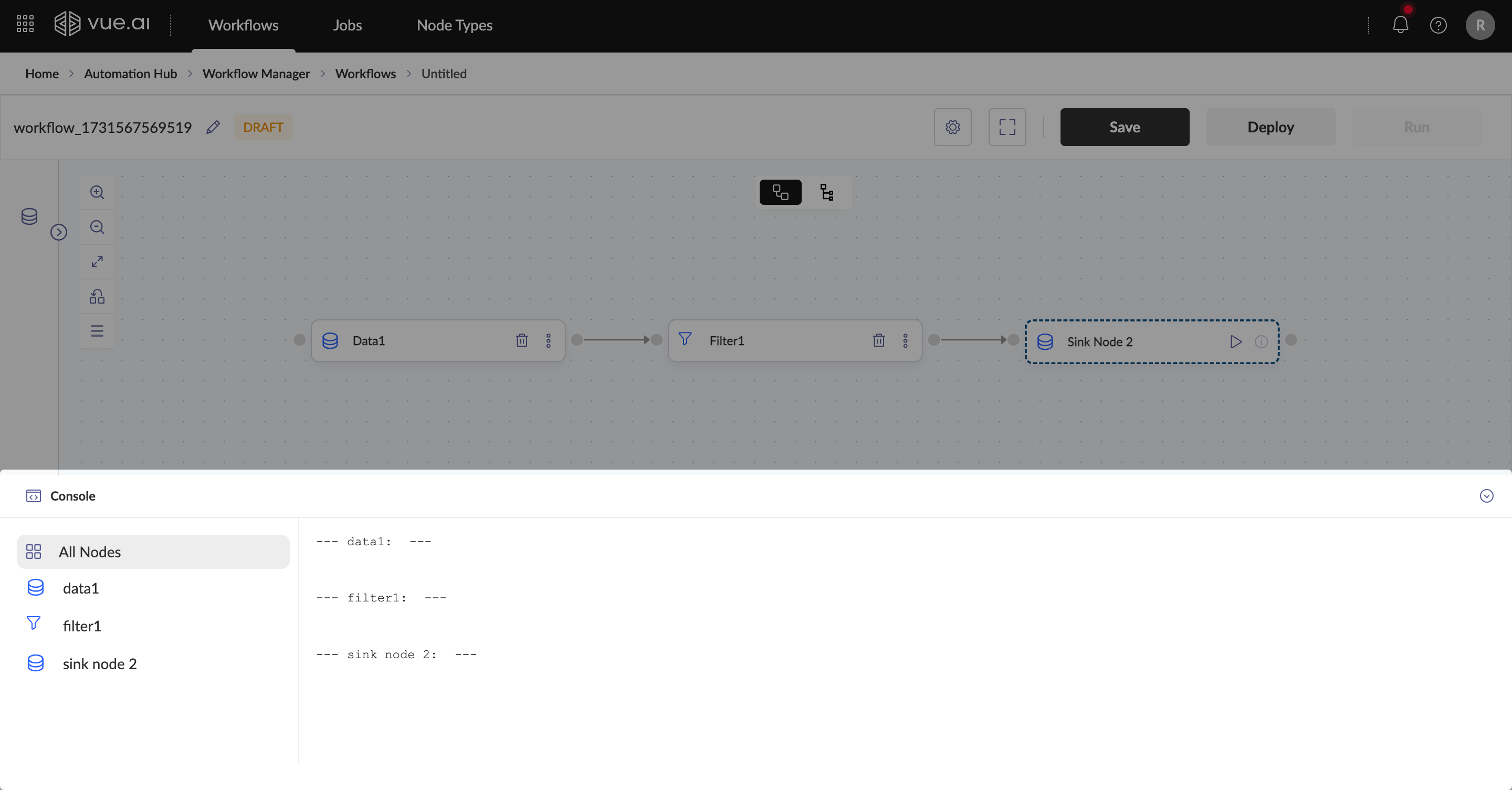

- Using the Console

The Console at the bottom of the screen shows output and error messages, specifically at the node level. This feature is valuable for debugging, allowing you to trace issues back to the specific node that encountered an error.

- Additional Workflow Canvas Features

- Workflow Arrangement Options: Choose between horizontal or vertical layout for workflow arrangement.

- Mini-Map View: Located at the bottom-right, this provides a consolidated view of the entire workflow, highlighting the visible section on your screen to help you navigate larger workflows.

Troubleshooting

Common Issues and Solutions

Problem 1: Debugging Issues Cause: Errors traced back to their respective nodes. Solution:

- Use the Error Console to trace errors back to their respective nodes.

Problem 2: Deployment Failures Cause: Incorrect configurations. Solution:

- Ensure all configurations are set correctly before deployment.

Problem 3: Workflow Not Running Cause: Workflow not successfully deployed. Solution:

- Confirm the workflow is successfully deployed before execution.

Additional Information

The Workflow Canvas allows workflows to be scheduled for automated execution. Workflow configurations can also be adjusted to optimize performance.

- The workflow's control flow follows the sequence in which nodes are added to the canvas.

- Ensure appropriate node usage: Transform nodes and Custom Code nodes cannot be used together.

- Keep node names concise and clear for better readability on the canvas, ensuring smoother workflow deployment.

FAQs

How do I create a new workflow?

- Navigate to Automation Hub → Workflow Manager → Workflows

- Click the "New Workflow" button at the top-left of the Workflows Listing screen

- This will open the Workflow Canvas interface where you can start building your workflow

How do I save my workflow?

Workflows can be saved in two ways:

- Automatically through the autosave feature

- Manually by clicking the Save button in the top bar of the Workflow Canvas

What are the different workflow statuses?

Common workflow statuses include:

- DRAFT: Initial state of a new workflow

- DEPLOYING: Workflow is in the process of being deployed

- DEPLOYED: Workflow has been successfully deployed

- FAILED: Deployment or execution has failed

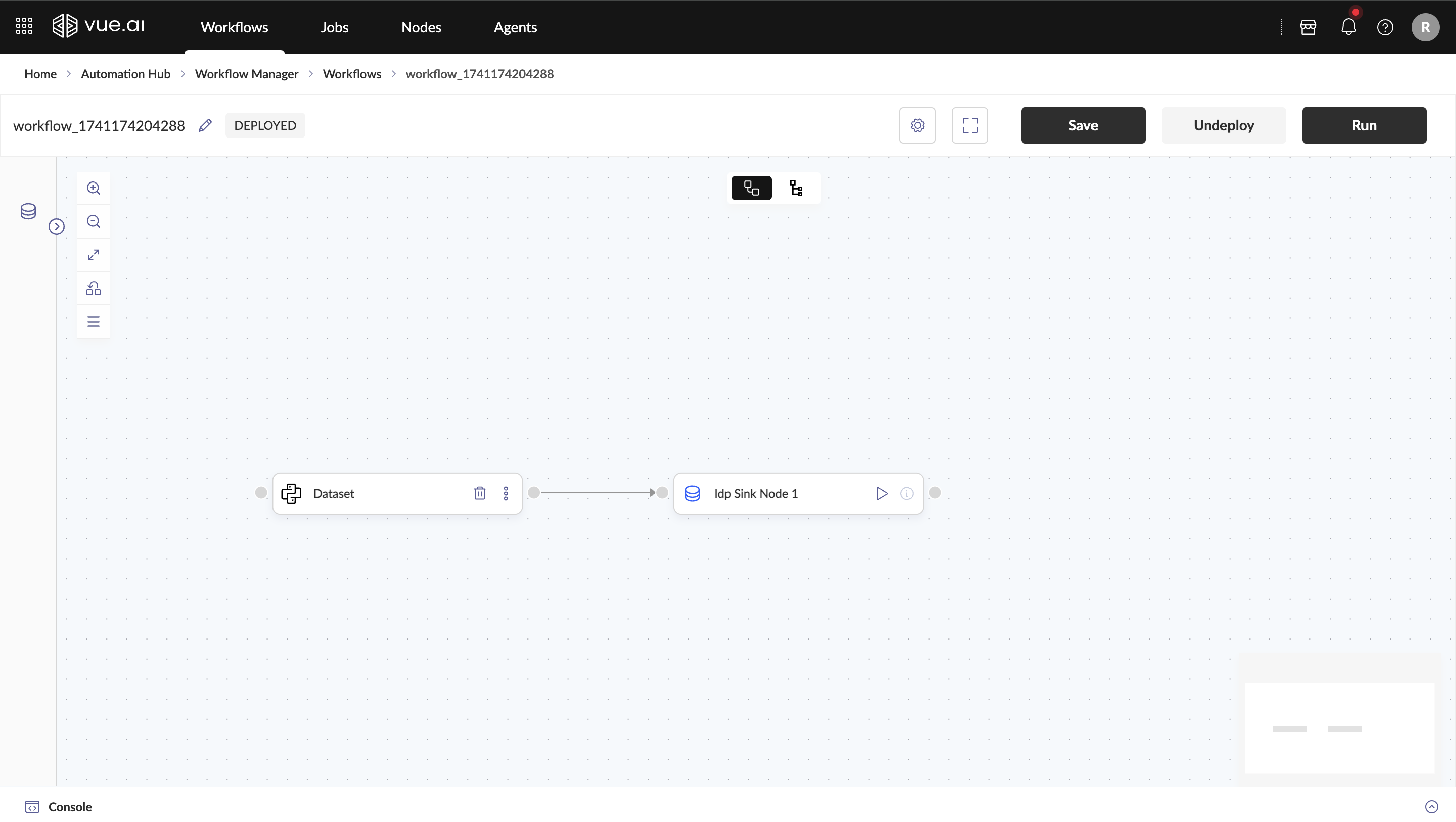

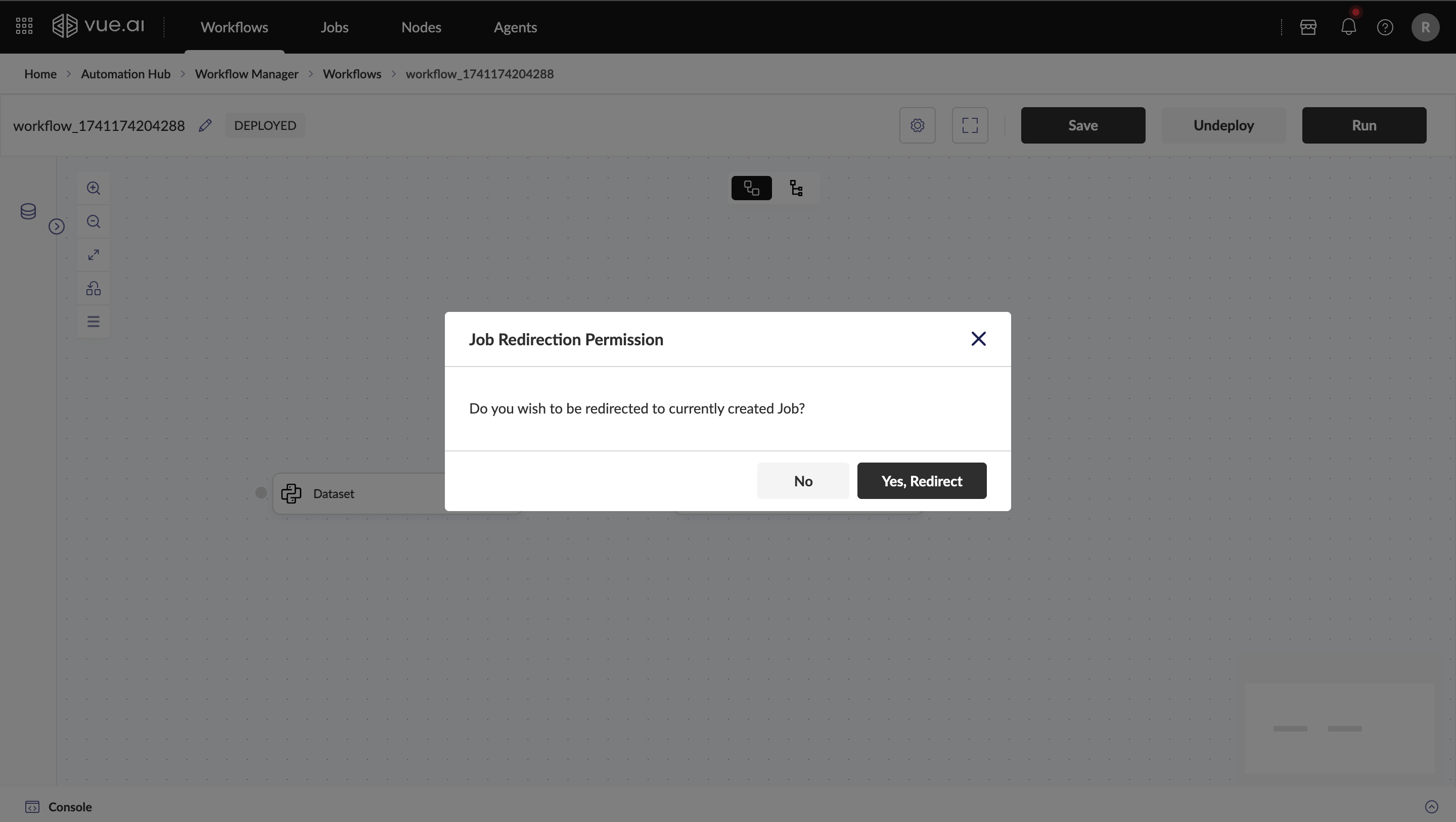

How do I deploy and run a workflow?

- Click the Deploy button in the top bar

- Wait for the status to change to DEPLOYED

- Once deployed, the Run button will become active

- Click Run to initiate the workflow job

How can I debug issues in my workflow?

You can use the Console at the bottom of the screen which shows:

- Output messages from nodes

- Error messages at the node level

- Specific node-related issues for debugging

How do I add nodes to my workflow?

There are two ways to add nodes:

- Drag & Drop: Drag nodes from the left sidebar onto the Workflow Canvas

- Right-click on the canvas and select nodes from the context menu

How can I navigate large workflows?

You can use several navigation features:

- Zoom controls to zoom in/out

- Fit to screen option

- Mini-Map view at the bottom-right

- Auto-arrange nodes feature

- Outline view to see all nodes

Can I schedule workflows?

Yes, you can schedule workflows through the Workflow Configurations menu (gear icon) in the top bar, where you can specify when and how often the workflow should run.

Summary

- Summarized the key points covered in the guide:

- Navigating to the Workflows Listing page

- Creating a new workflow and accessing the Workflow Canvas

- An overview of key Workflow Canvas features and functionalities

- Deployment, execution, and debugging techniques

With these insights, users are now equipped to create, deploy, and manage workflows efficiently using the Workflow Canvas.

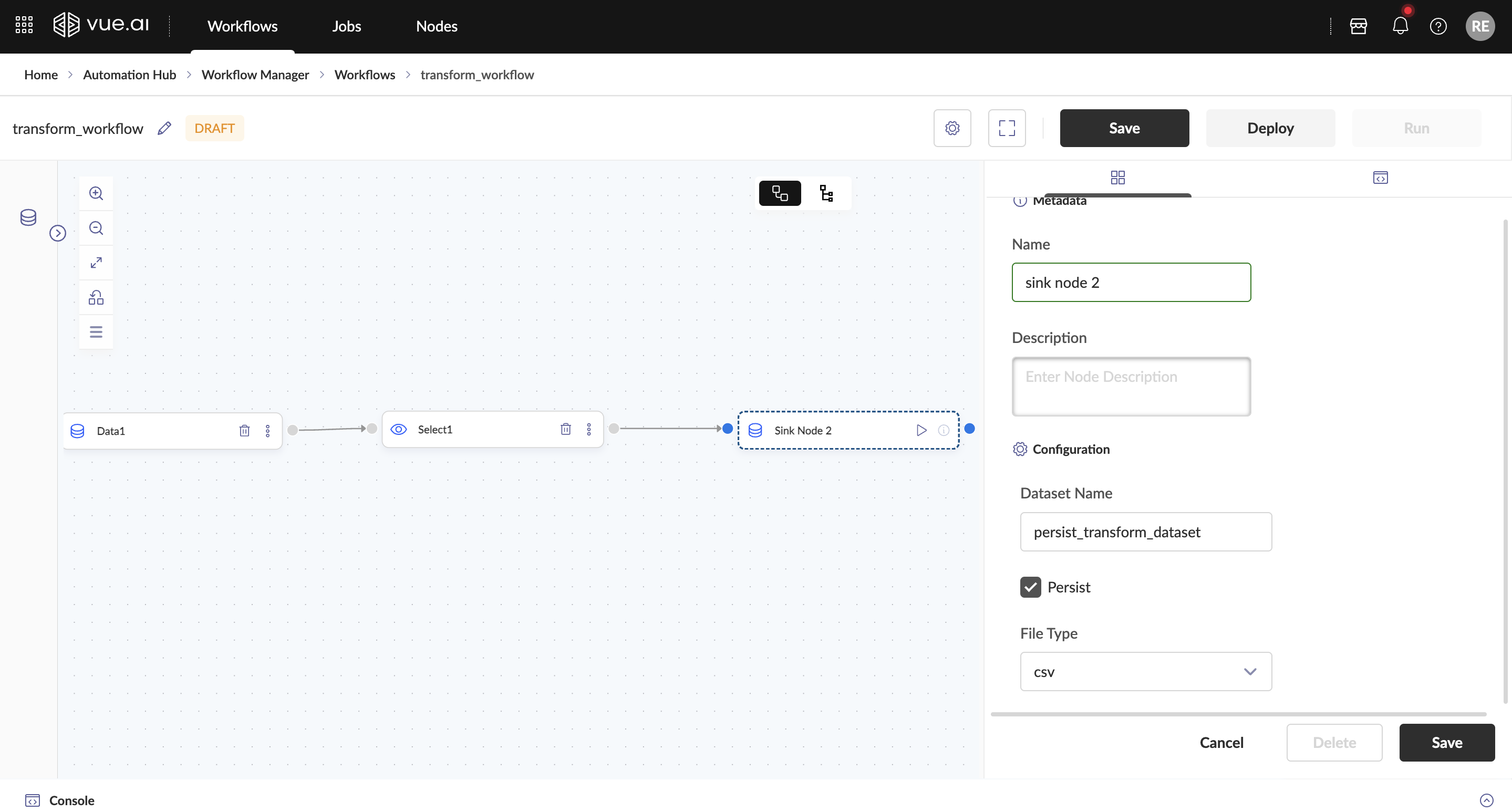

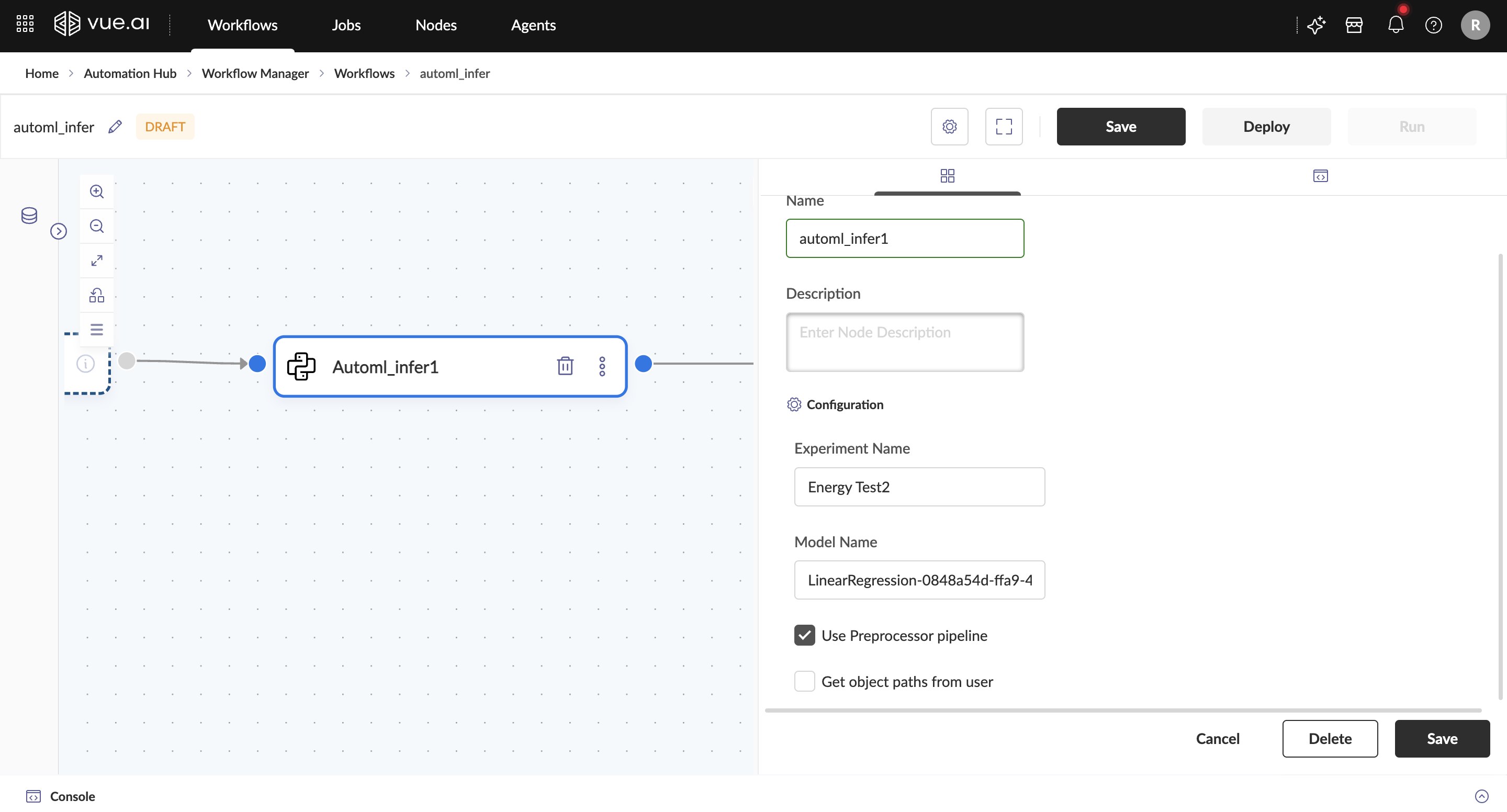

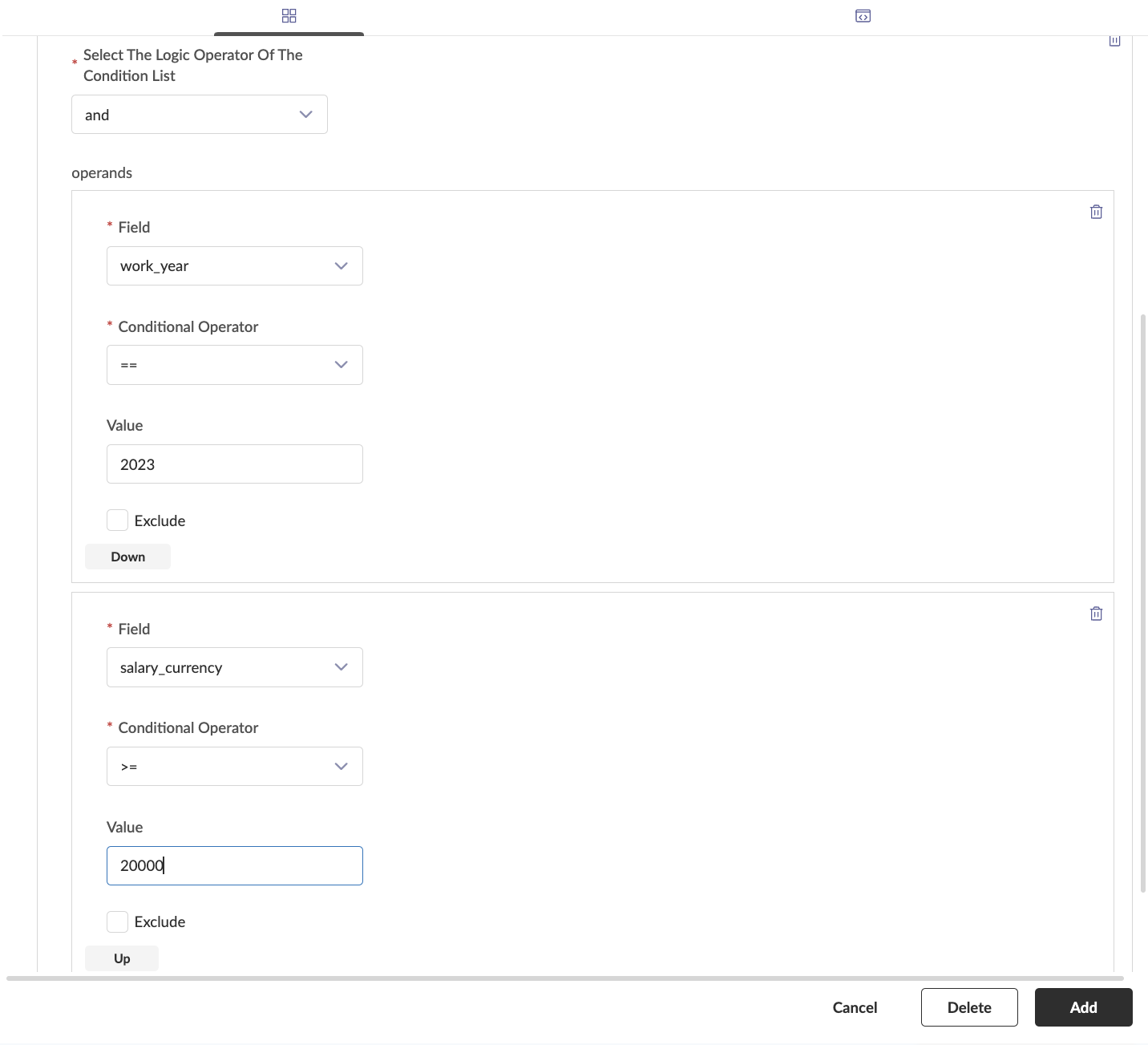

Transform Node Workflows

Welcome to the Transform Node Workflows Overview! This guide will help users understand the features and benefits of Transform Node Workflows and learn how to create, configure, and deploy these workflows effectively.

Who is this guide for? This guide is designed for users of the Vue.ai platform.

Ensure access to the Vue.ai platform and familiarity with basic workflow concepts before starting.

Overview

Transform Node Workflows enable users to:

- Create automated data processing pipelines.

- Perform operations like filtering, joining, aggregating, and restructuring data.

Prerequisites Before beginning, ensure that:

- The Vue.ai platform is accessible.

- For more basic information on Workflows, please review the Getting Started with Workflows documentation.

Step-by-Step Instructions

Creating a Transform Node Workflow

Follow these steps to create a Transform Node Workflow:

Navigate to the Workflows Listing Page

- Go to Automation Hub → Workflow Manager → Workflows.

- Go to Automation Hub → Workflow Manager → Workflows.

Create a New Workflow

- Click + New Workflow to create a new workflow canvas.

- To rename the workflow, click the Edit button and modify the name.

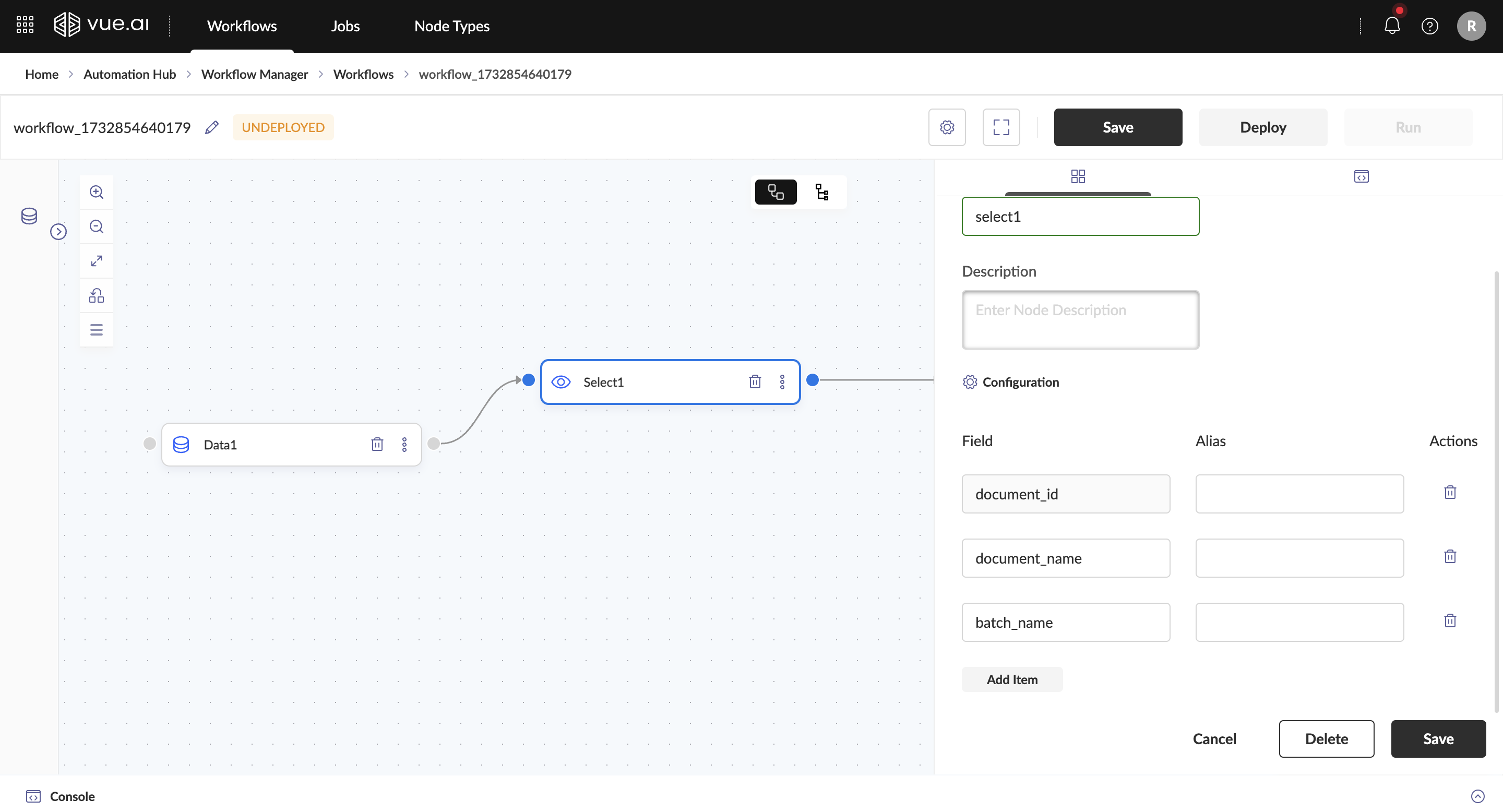

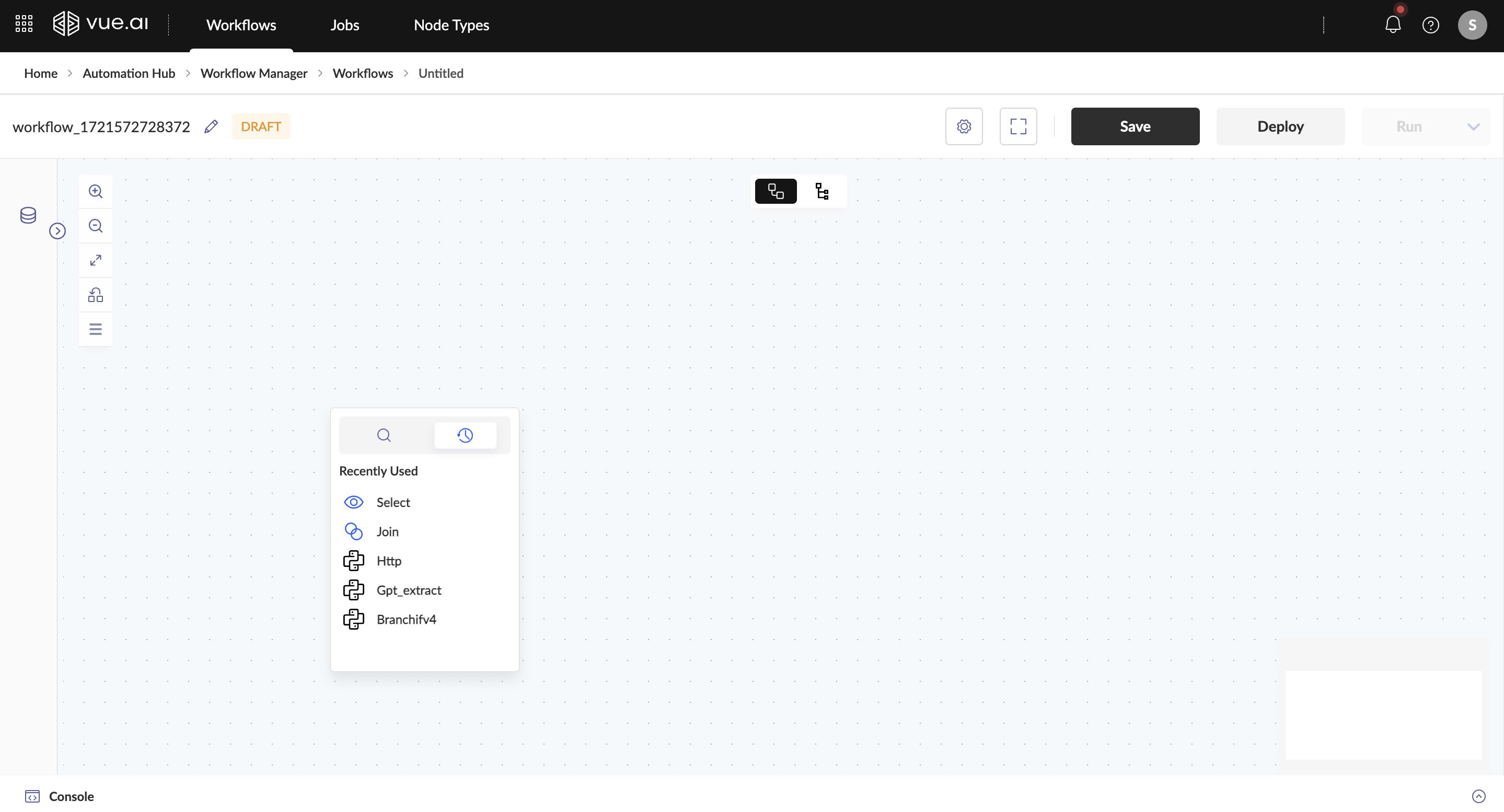

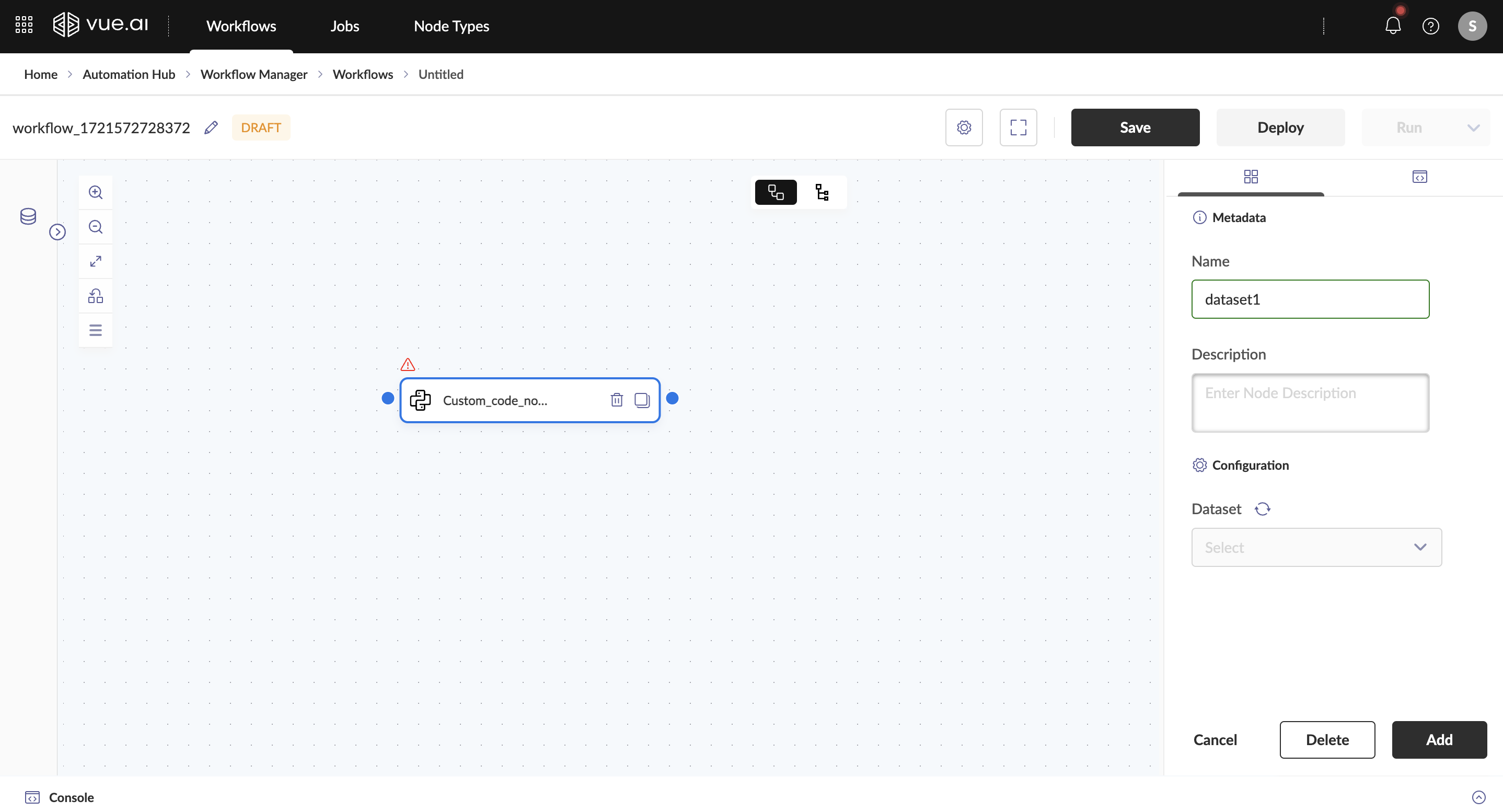

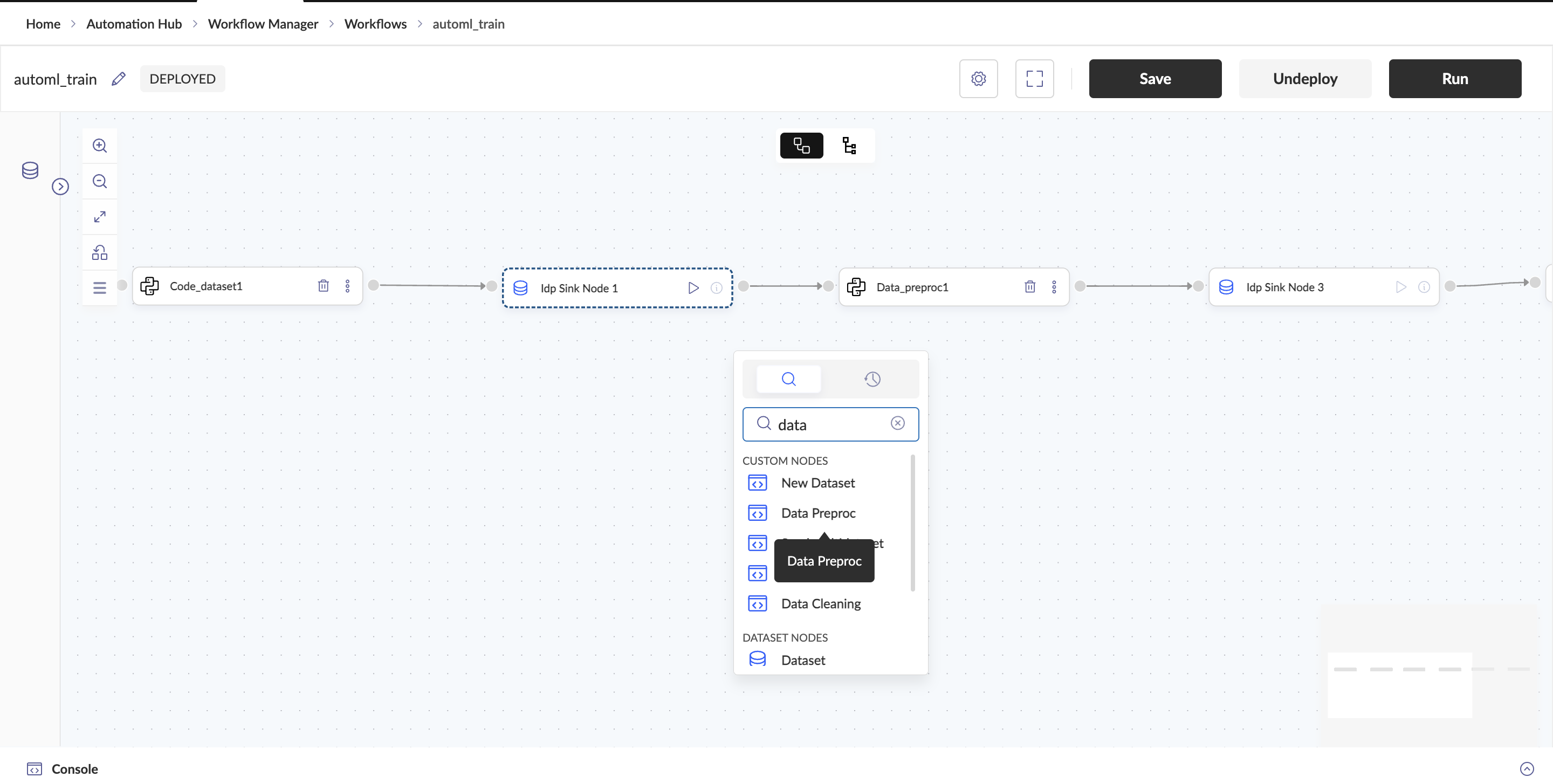

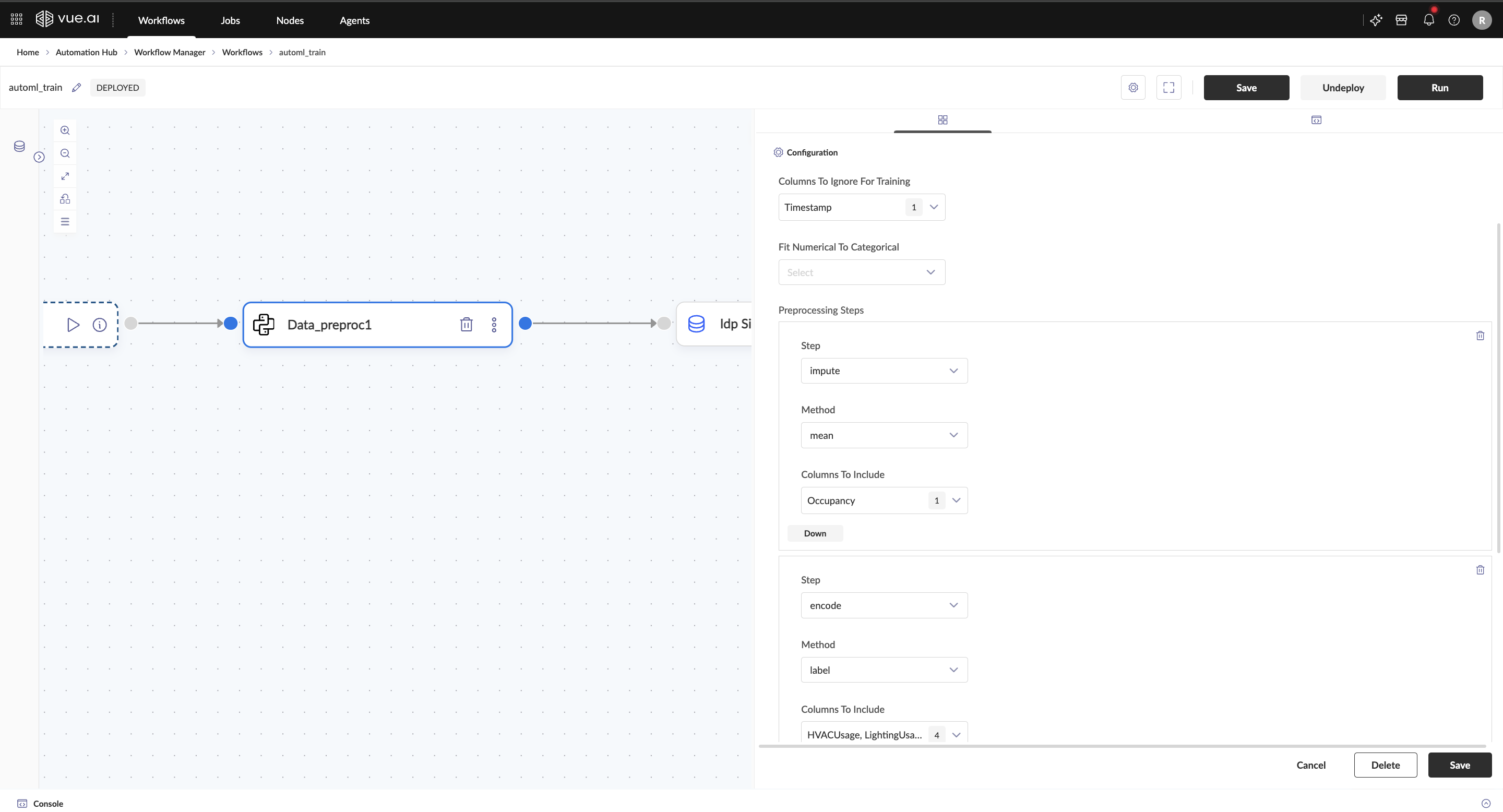

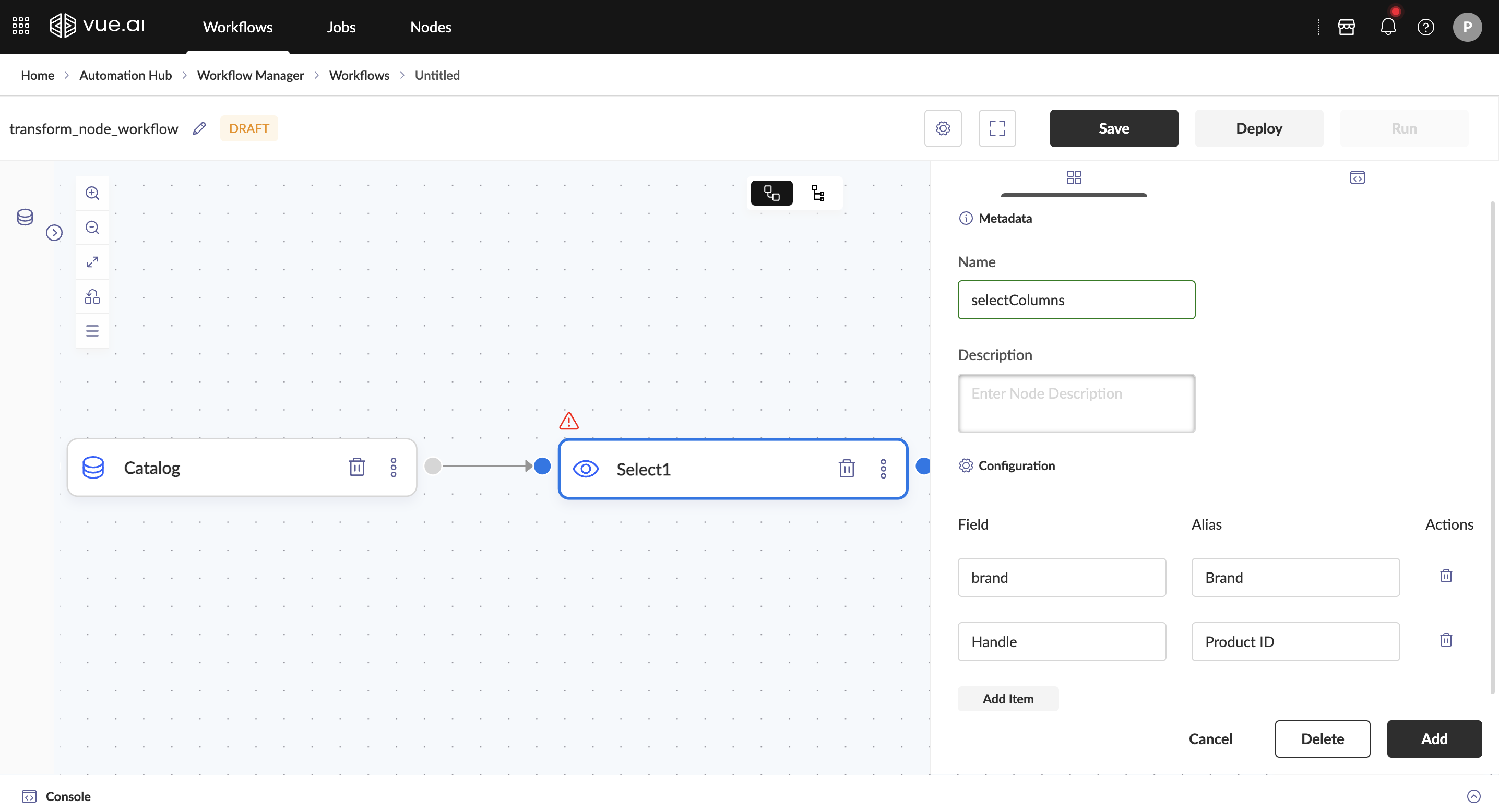

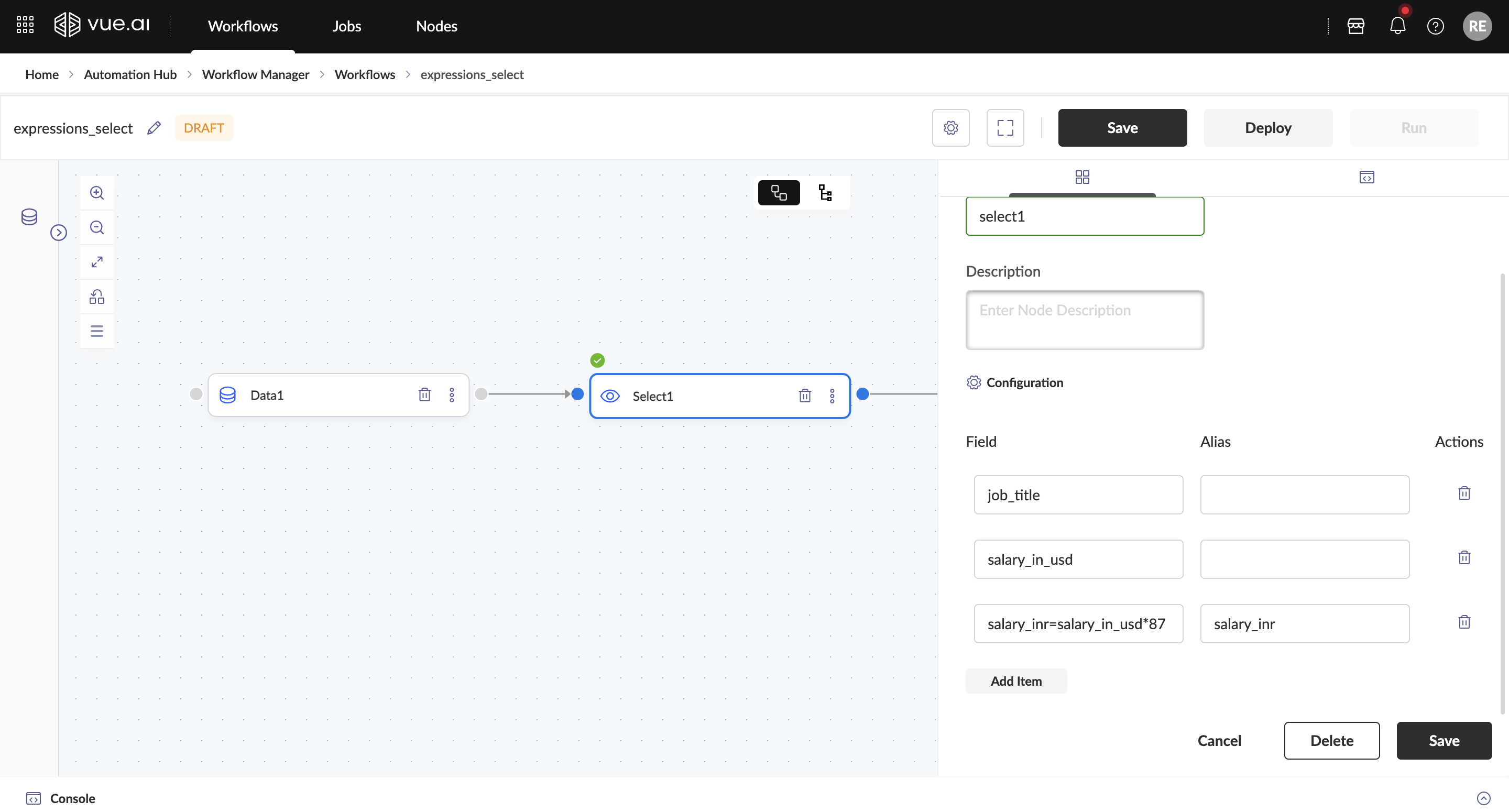

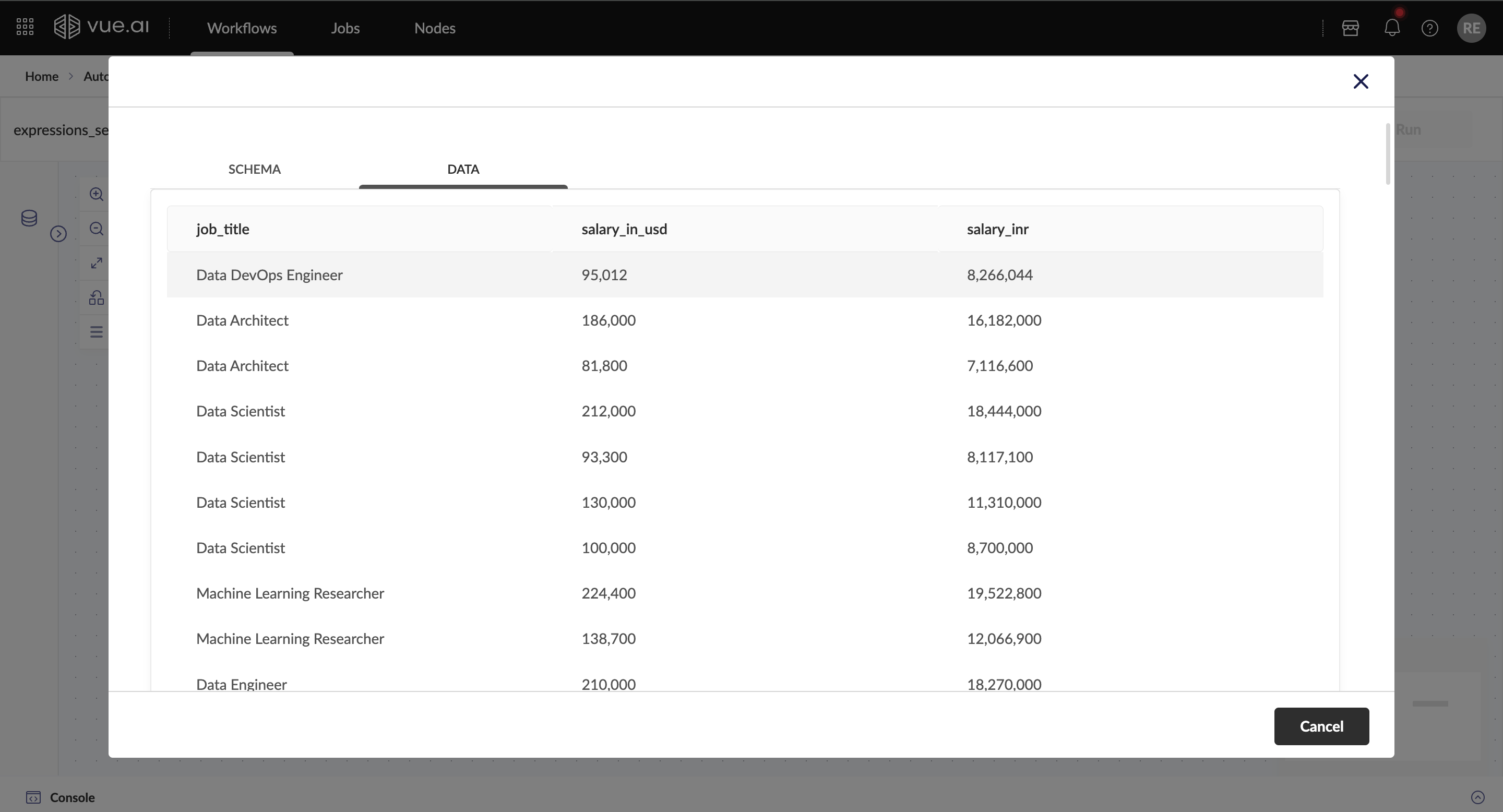

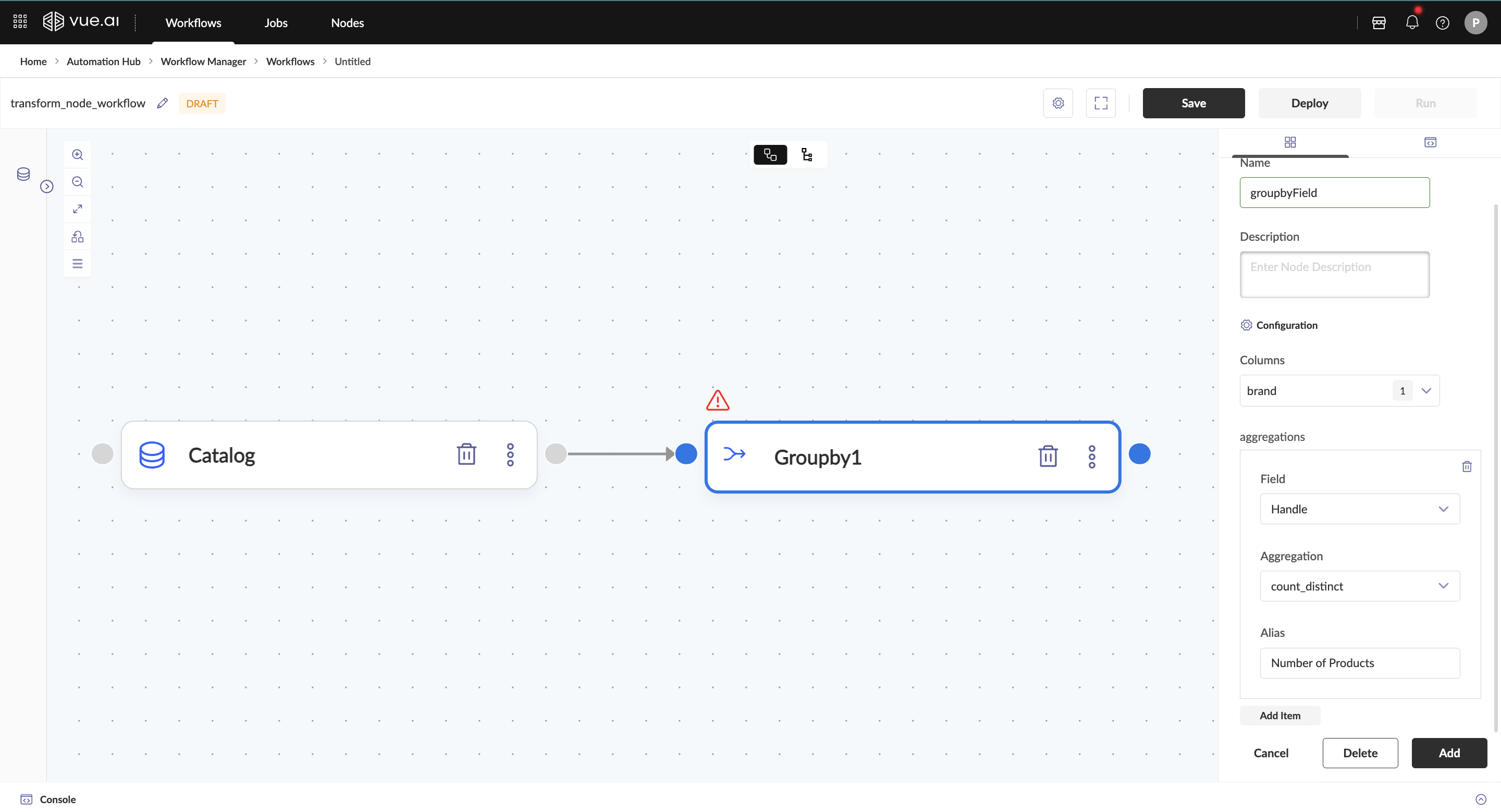

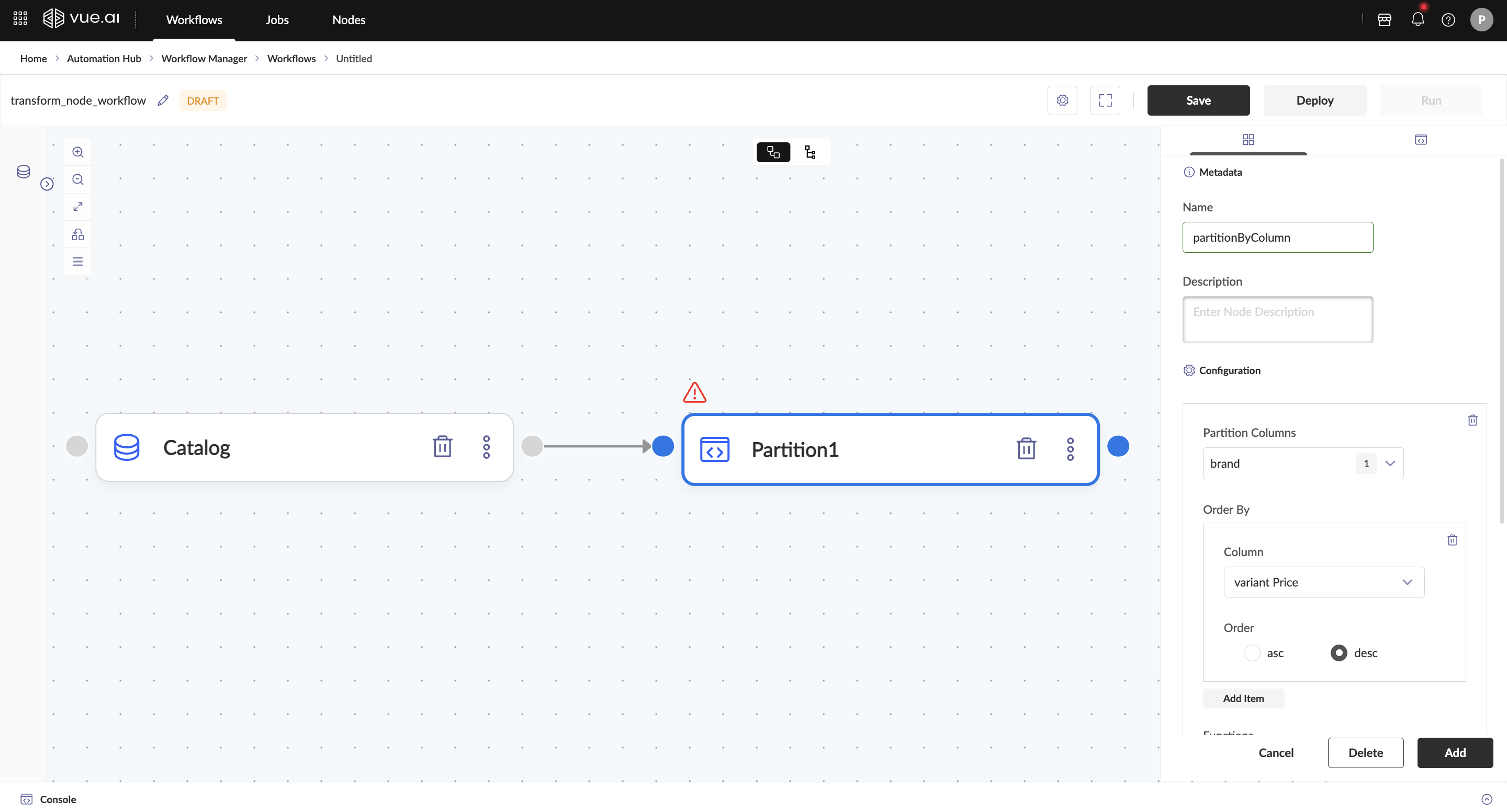

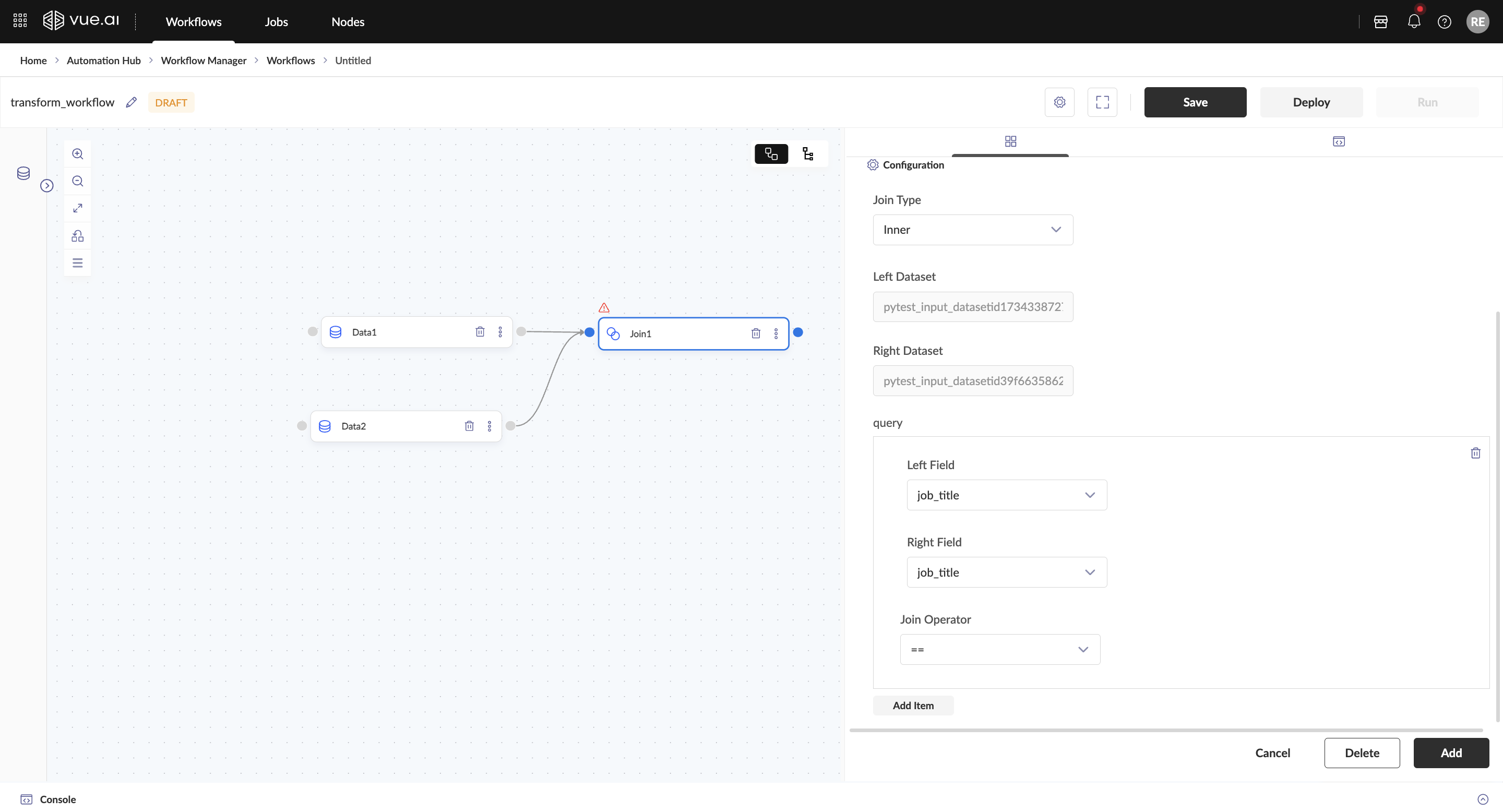

Build the Workflow

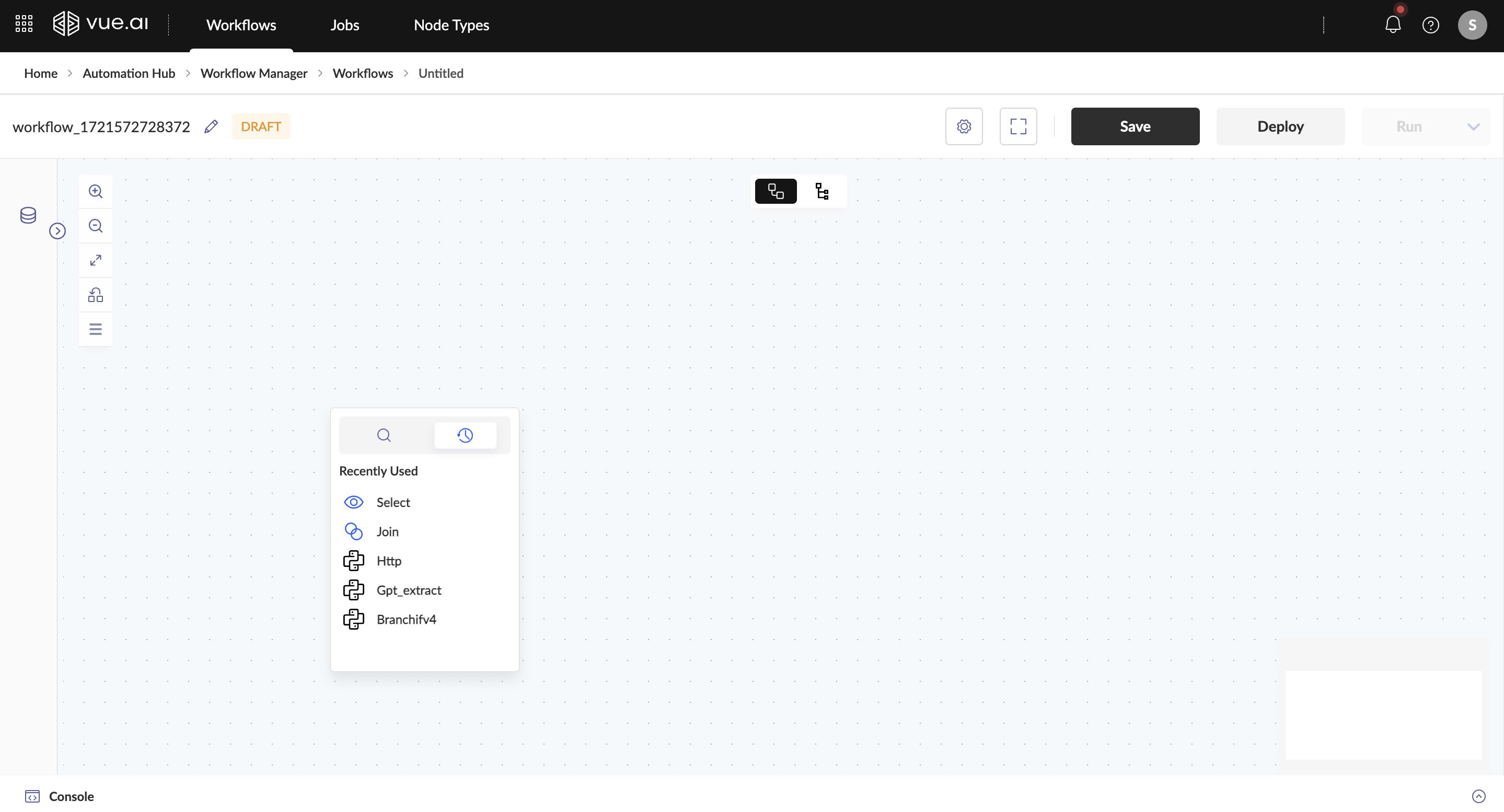

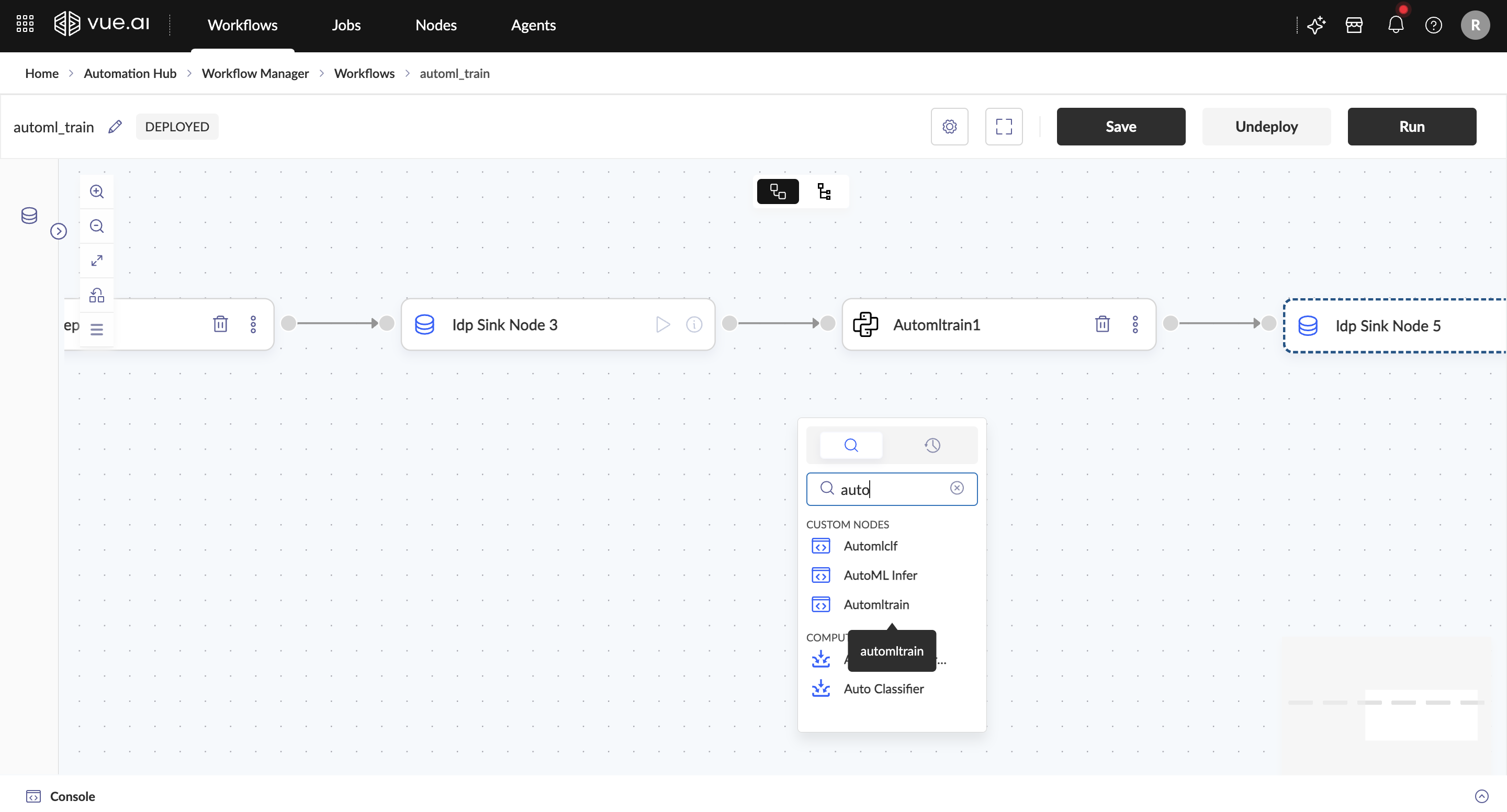

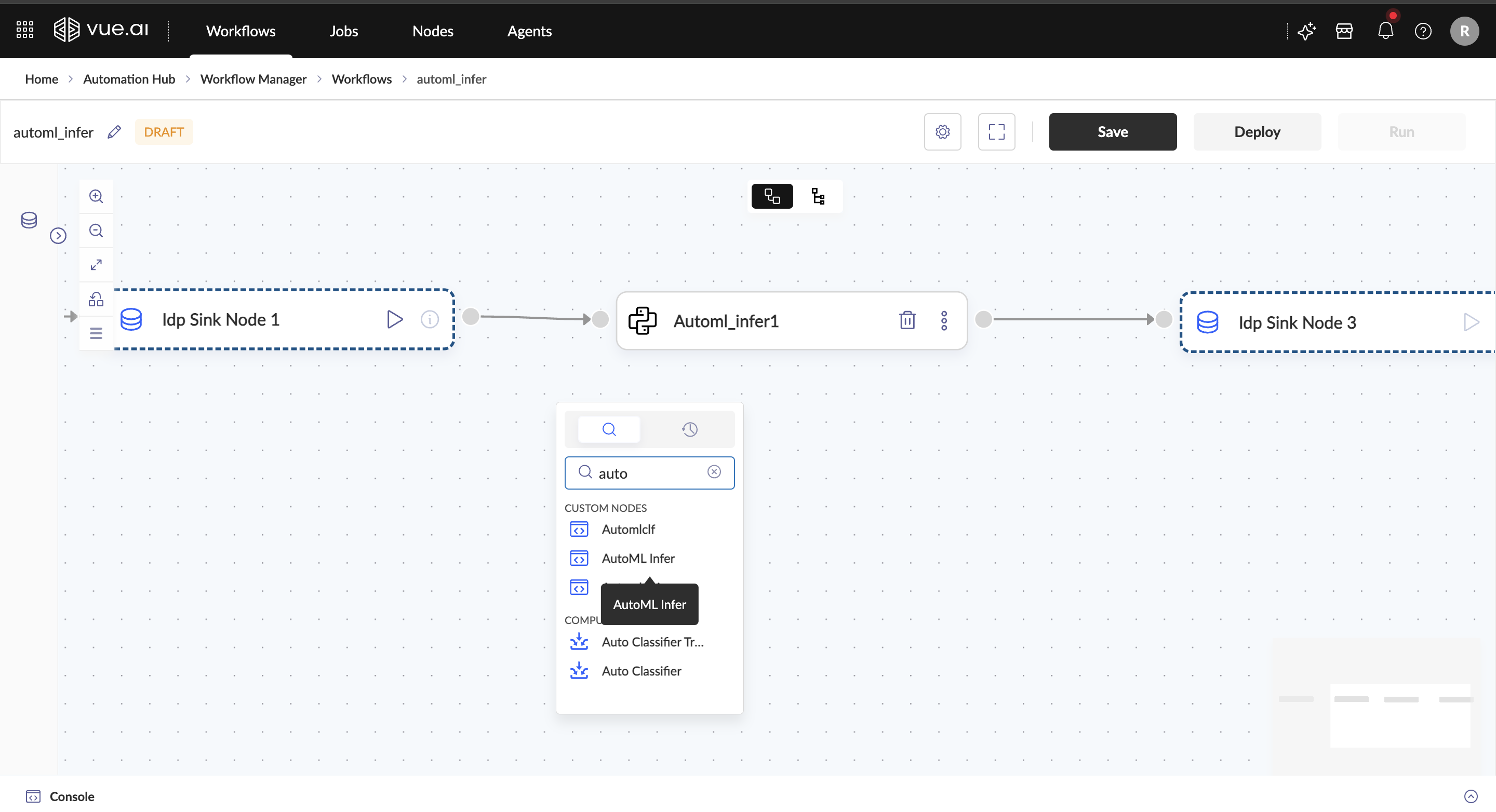

Nodes can be added in two ways:

- Drag & Drop: Hover over the Nodes section, search for the required node, and drag it into the workflow canvas.

- Right-Click: Right-click on the workflow canvas, search for the node, and add it.

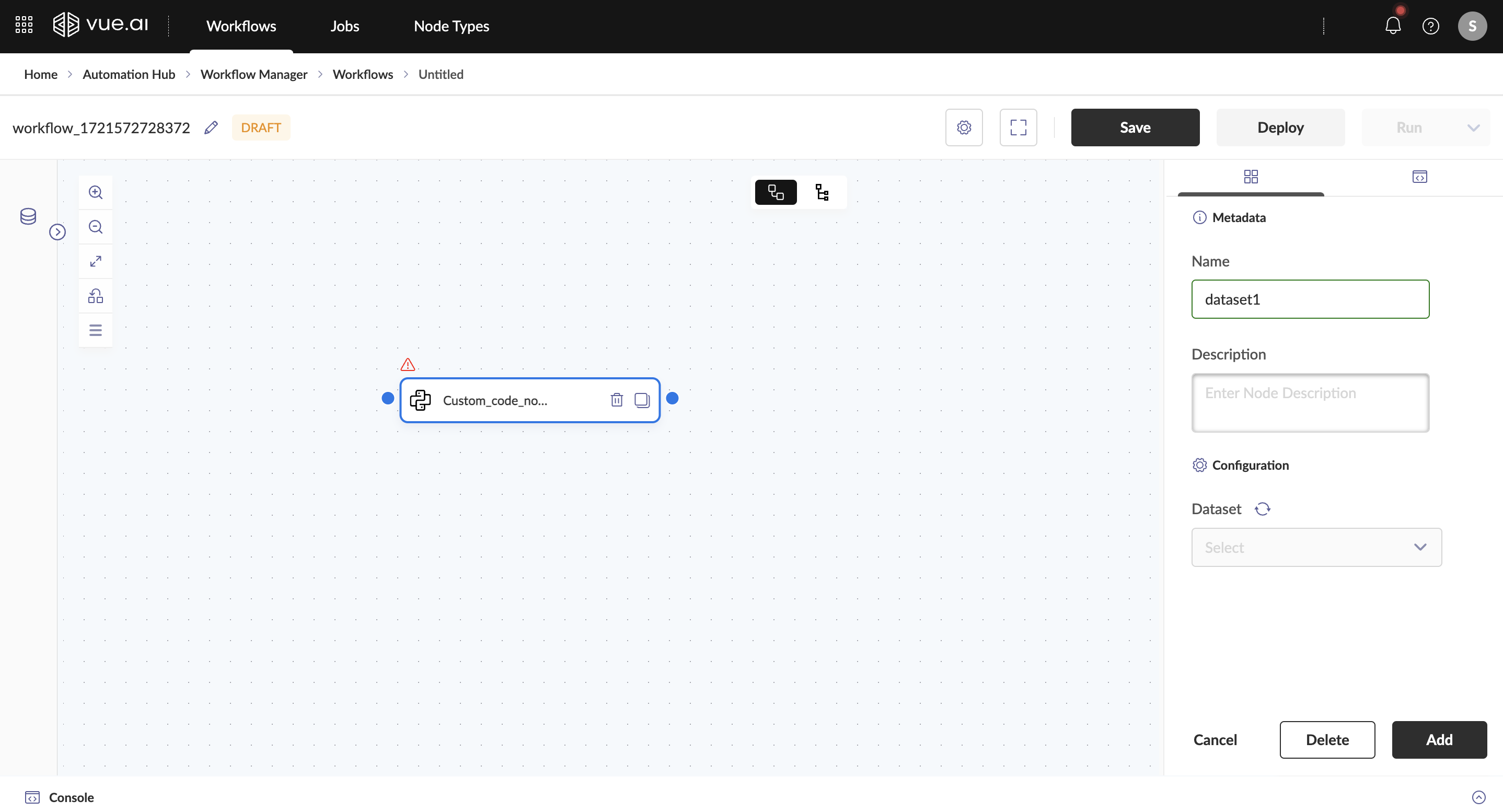

Load a dataset by adding the Dataset Reader Node to the workspace.

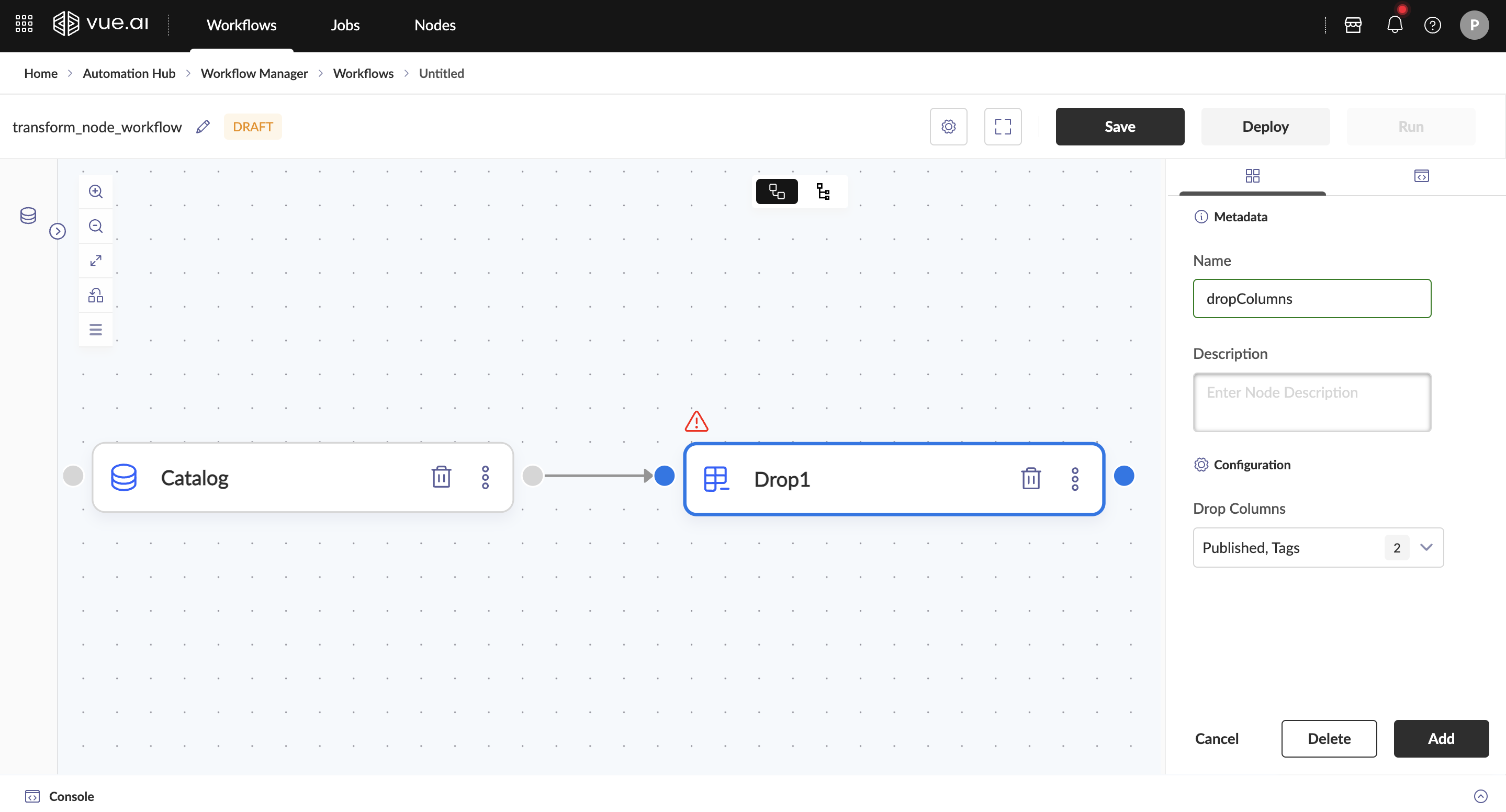

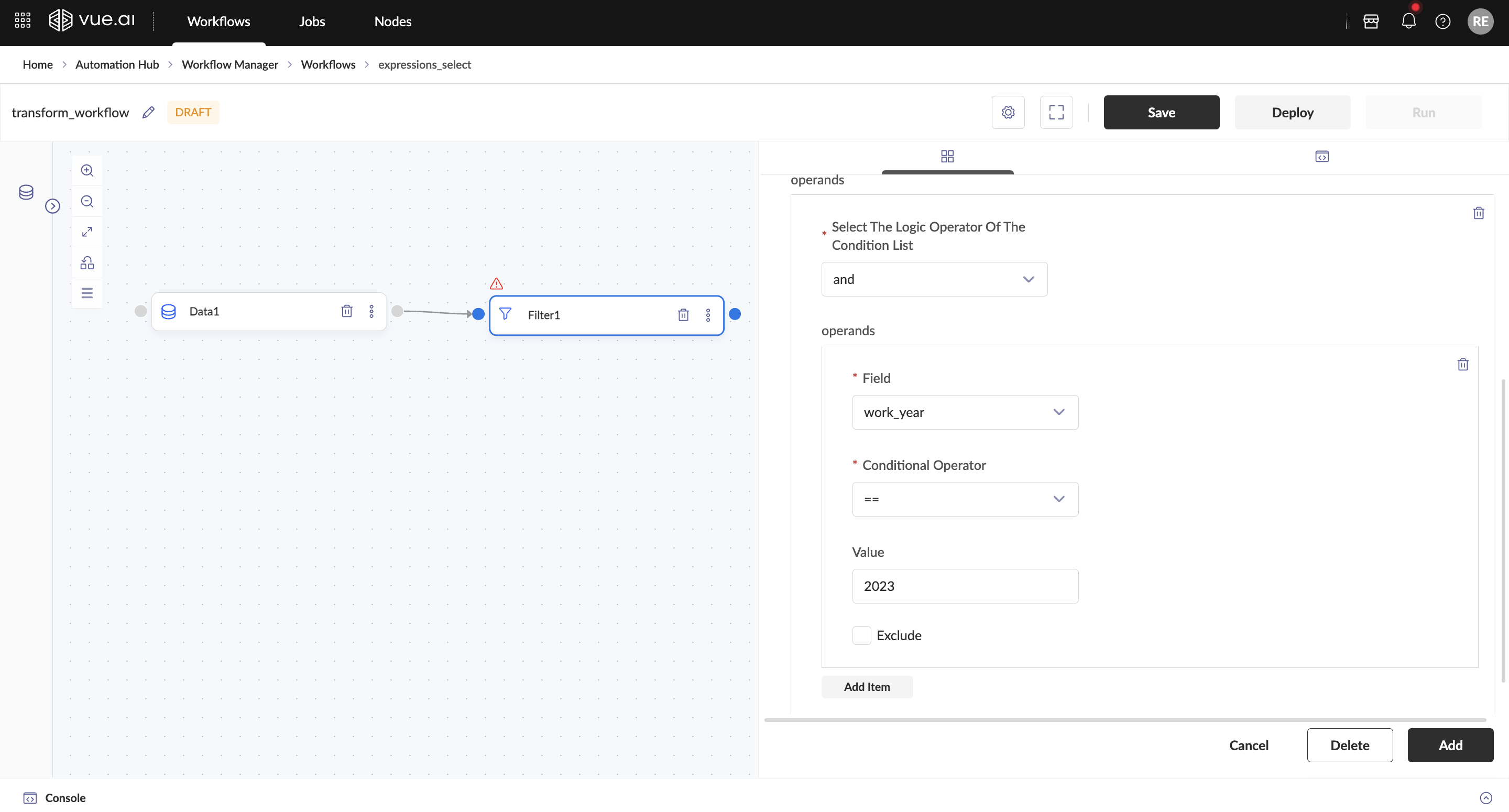

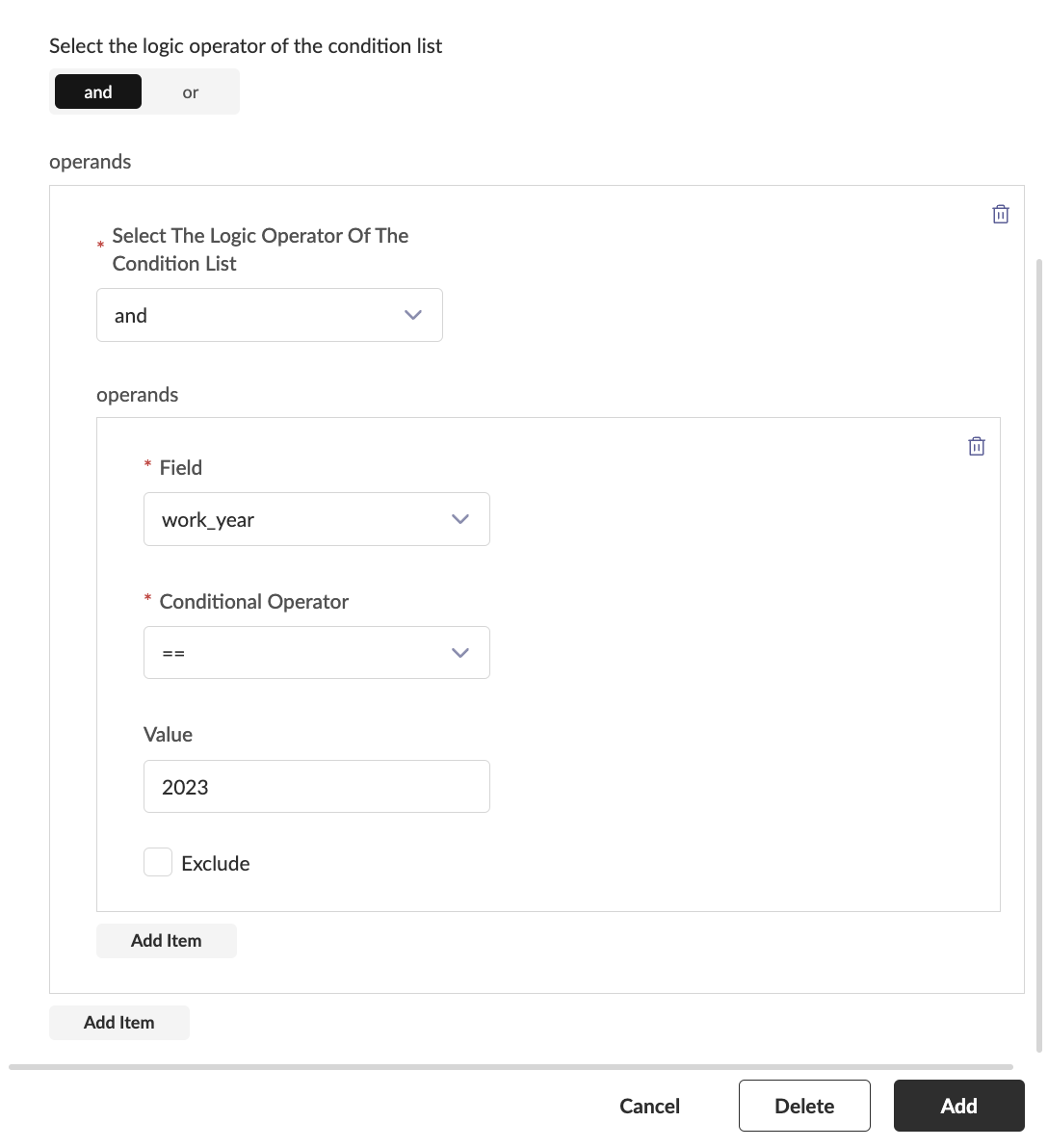

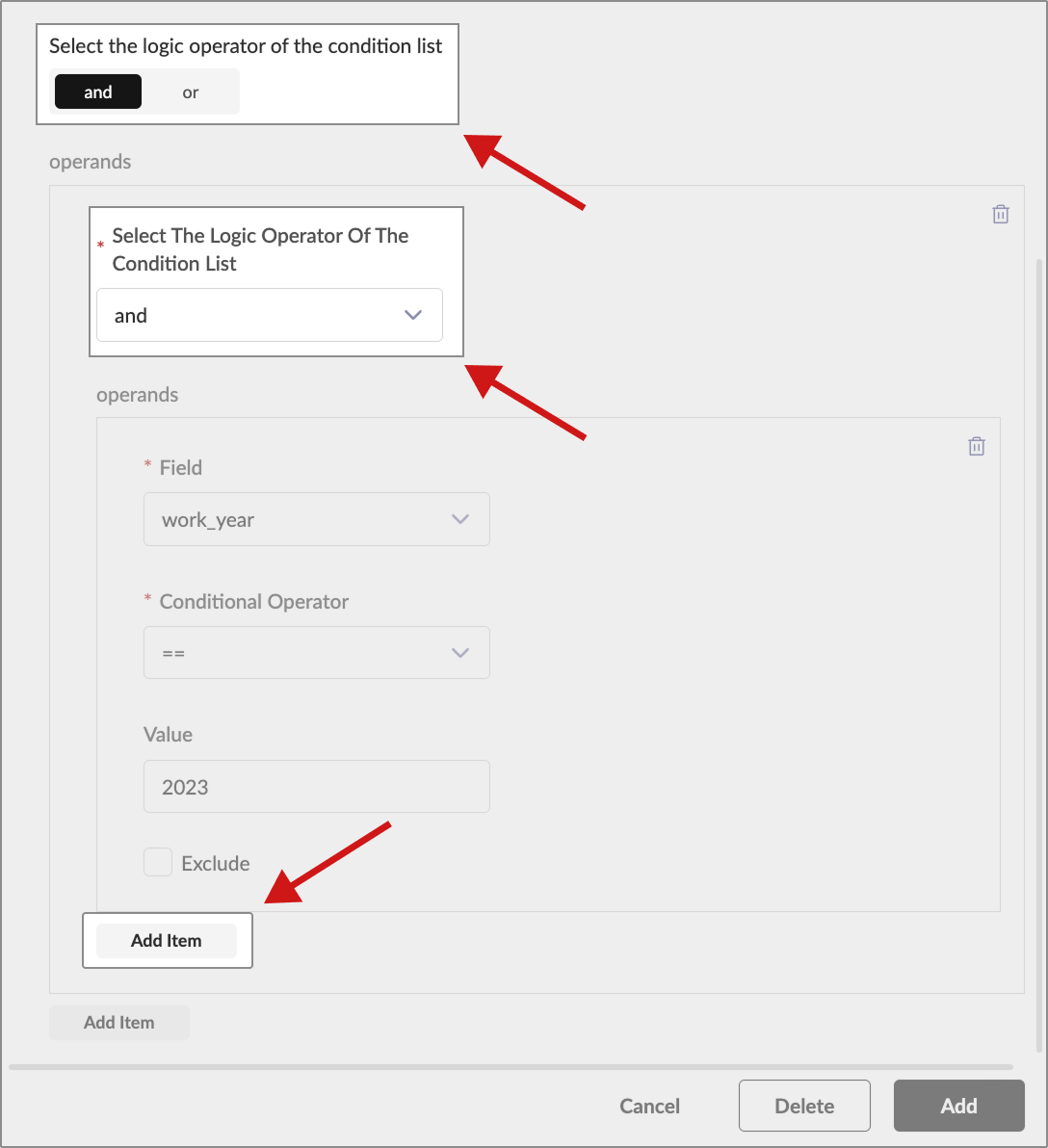

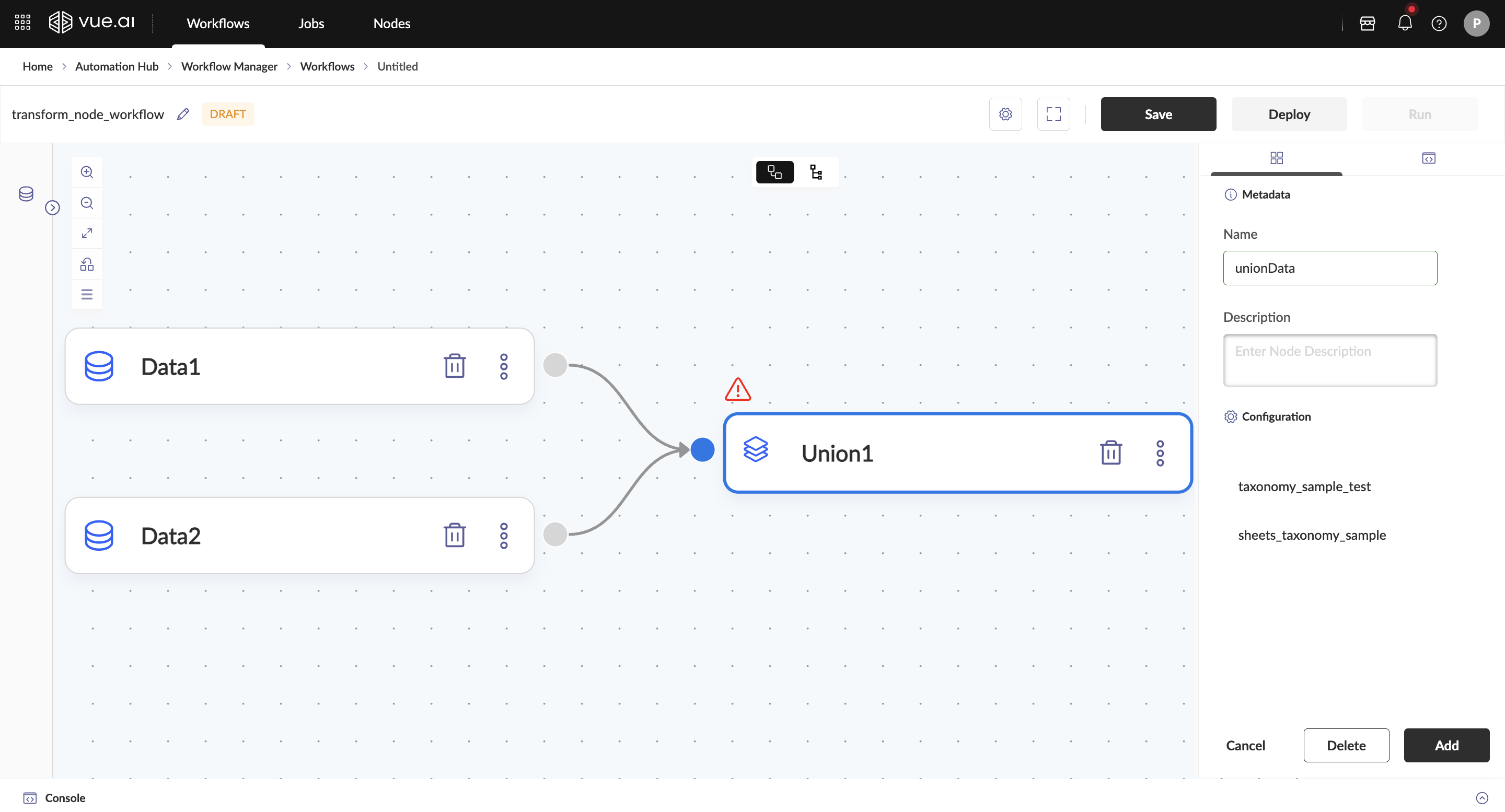

Transform Nodes include: SELECT, JOIN, GROUP BY, UNION, PARTITION, DROP, SORT.

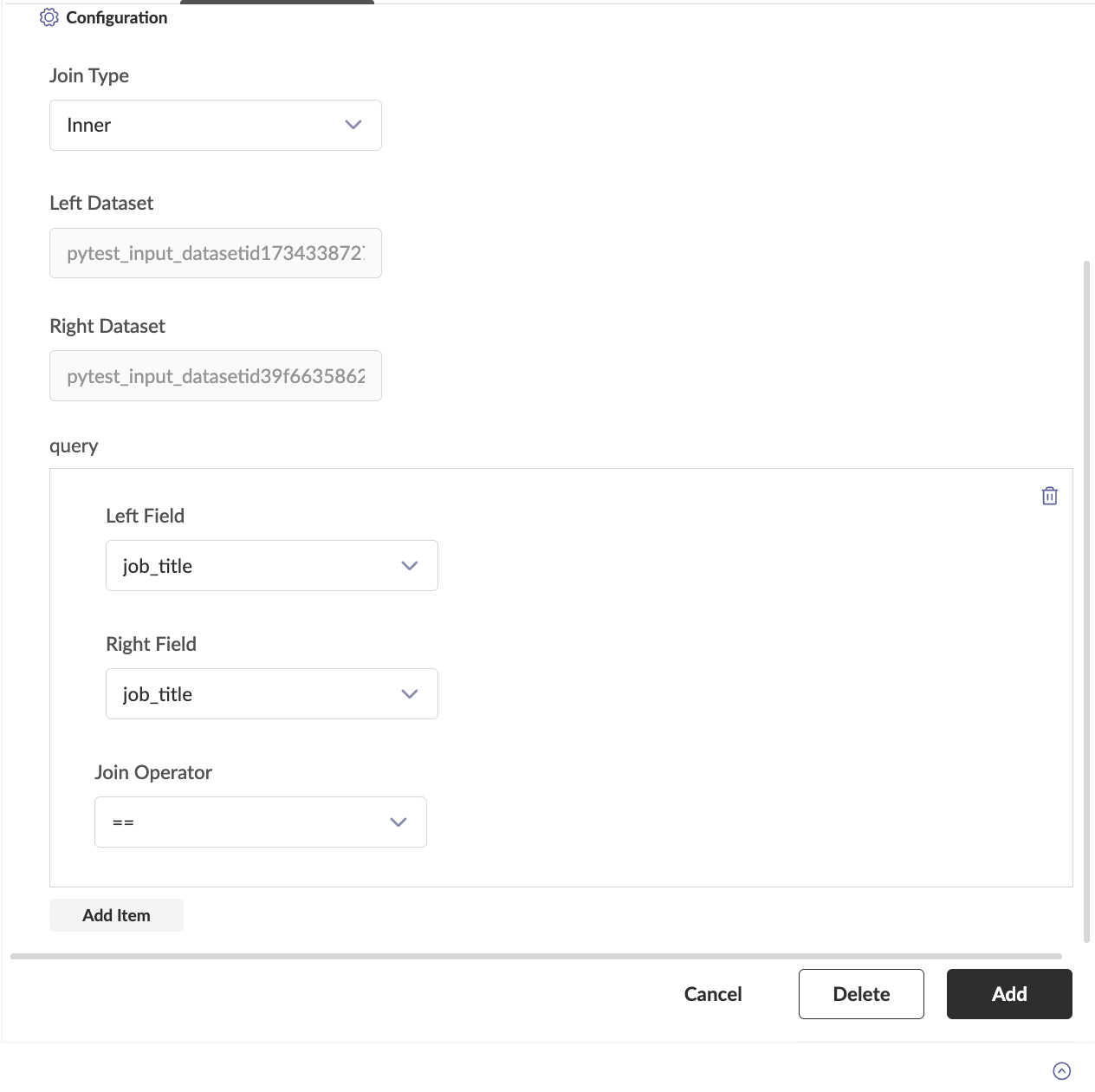

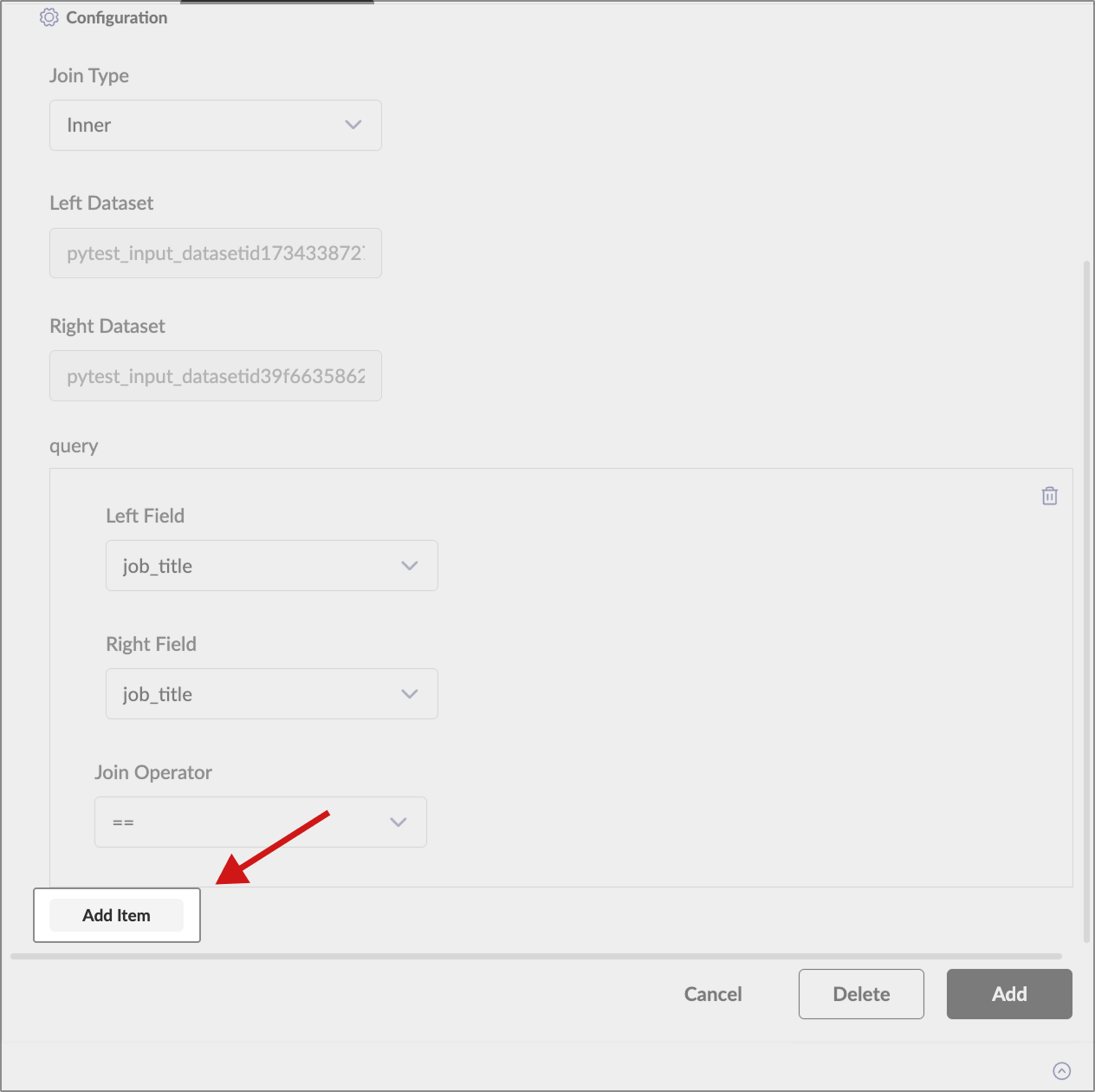

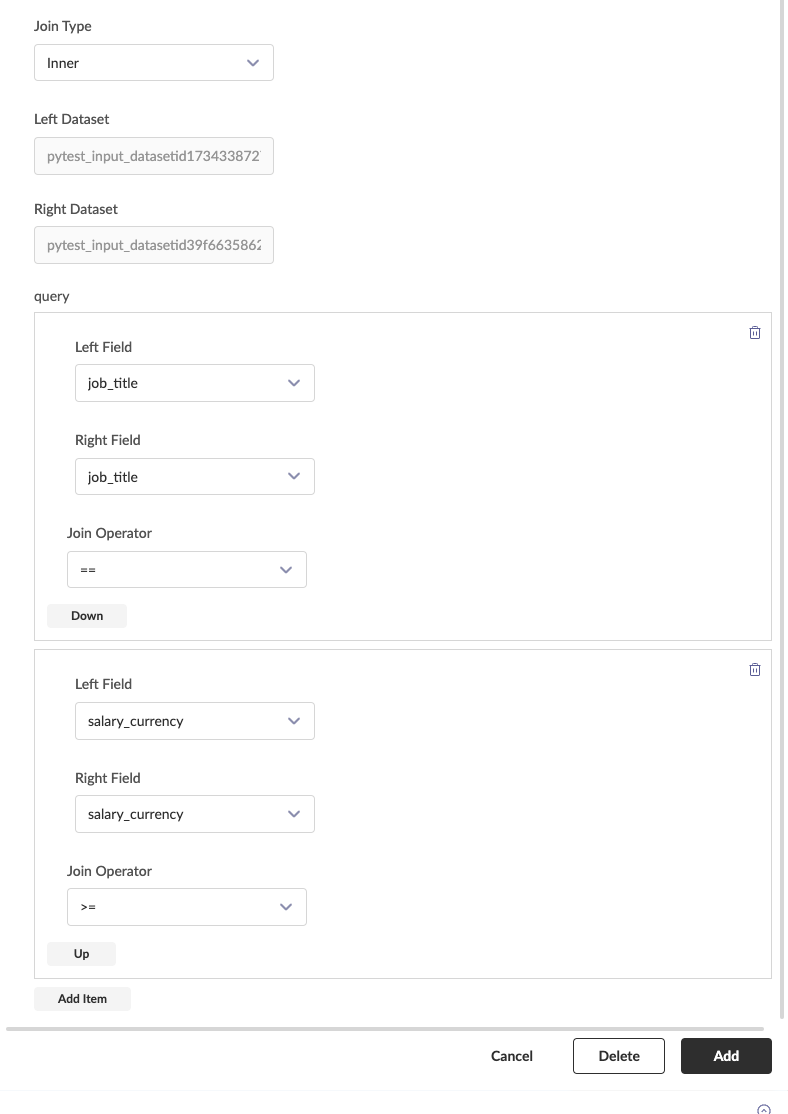

SELECT: Extract specific columns or rows based on criteria.JOIN: Merge rows from multiple tables using a related column.GROUP BY: Group rows by specified columns, often used with aggregate functions.UNION: Combine result sets from multiple queries, eliminating duplicates.PARTITION: Divide the result set into partitions for window function operations.DROP: Permanently remove a table, view, or database object.SORT: Arrange the result set in ascending or descending order based on specified columns.

Click on the node after adding it to the workflow canvas. Define the parameters for that node.

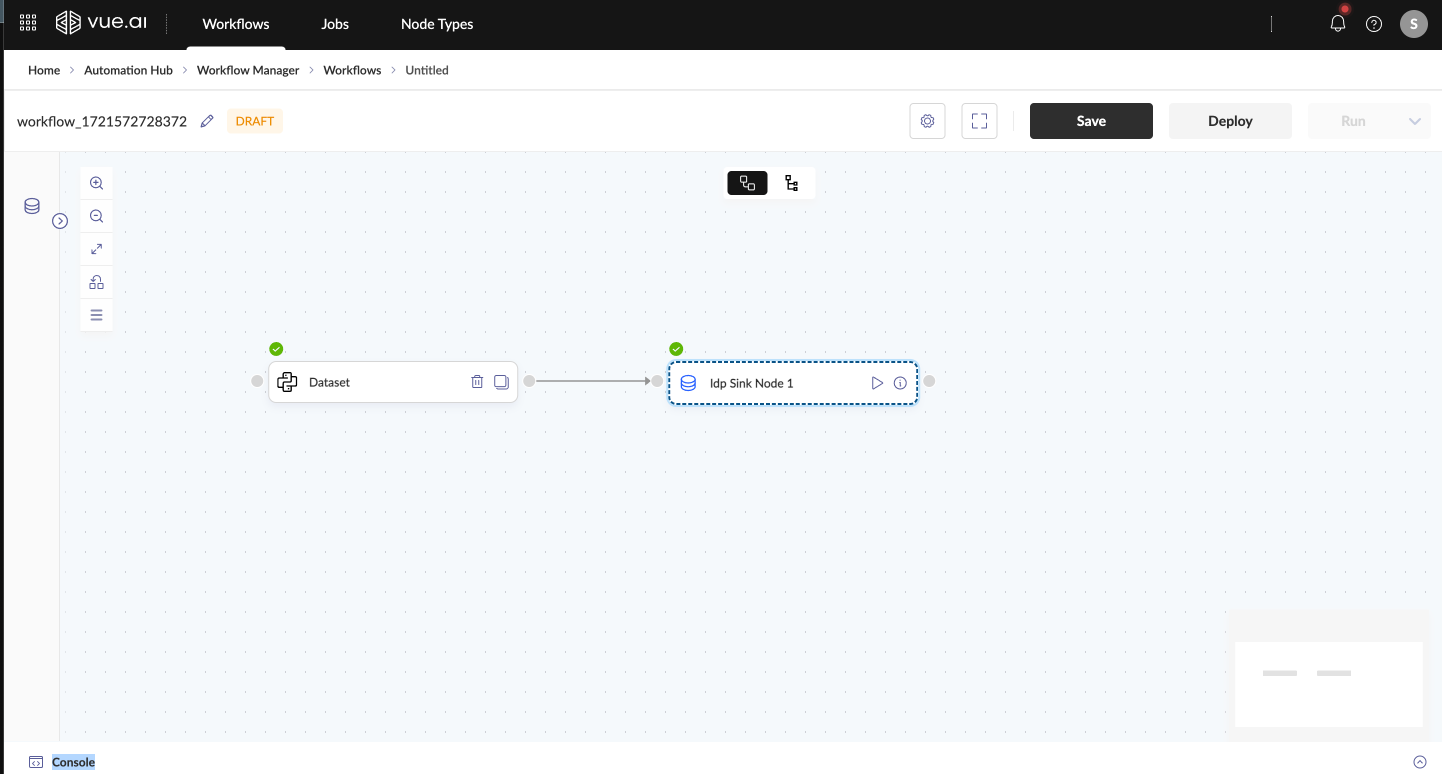

Drag the end of one node to connect it to the start of another.

Expected Outcome: Once all nodes are added and linked, the workflow structure is complete.

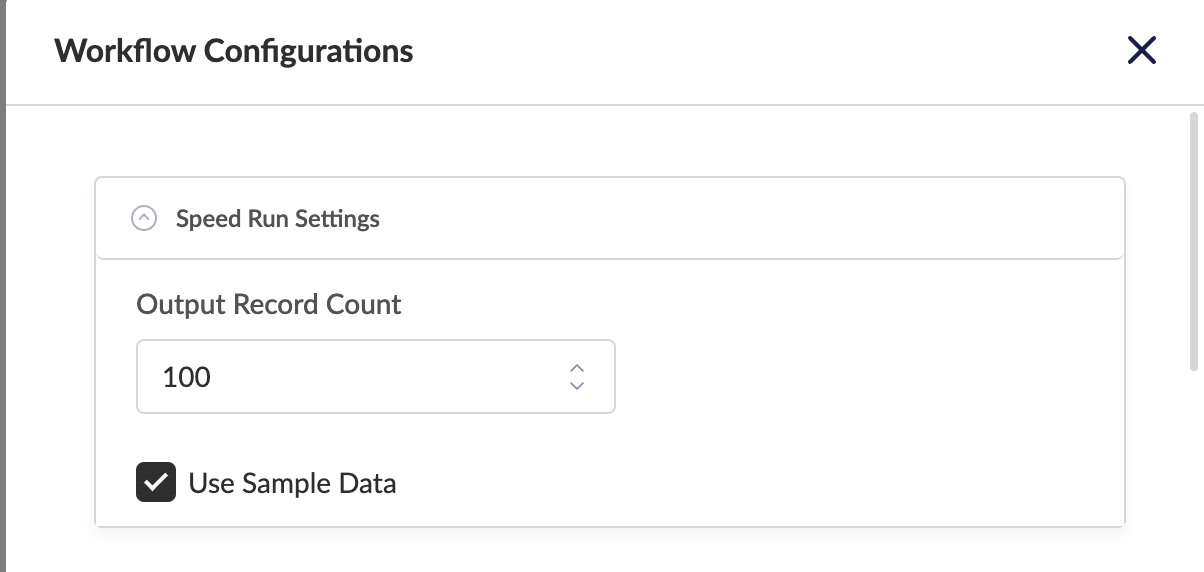

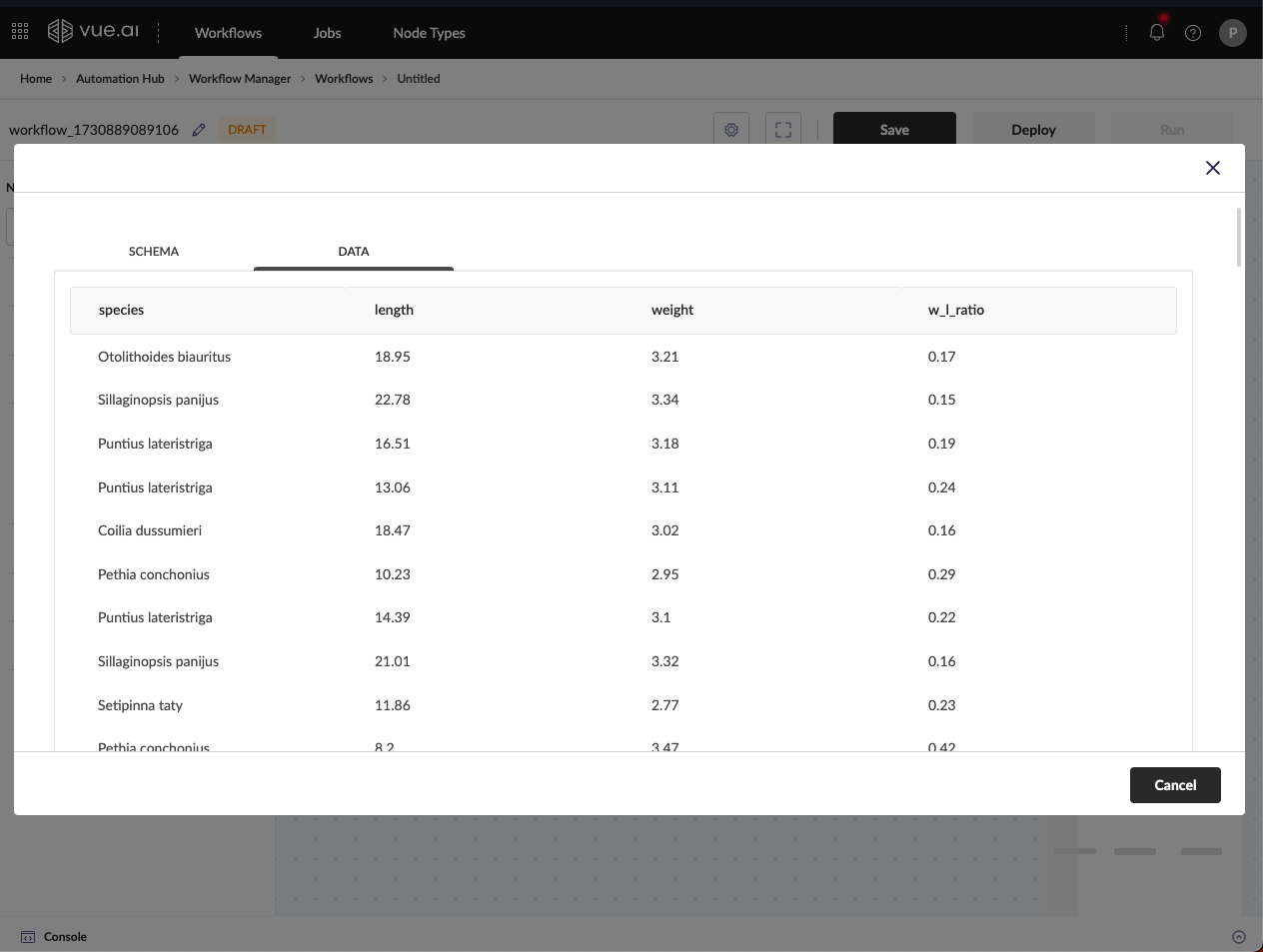

SpeedRun the Workflow

- This method serves as a trigger to execute workflows in synchronous mode using Pandas. It is designed for running lighter workloads, ensuring that the logic functions correctly by providing quick results for faster validation.

- Click the run icon on the sink node after each transformation to execute the speed run.

- This method serves as a trigger to execute workflows in synchronous mode using Pandas. It is designed for running lighter workloads, ensuring that the logic functions correctly by providing quick results for faster validation.

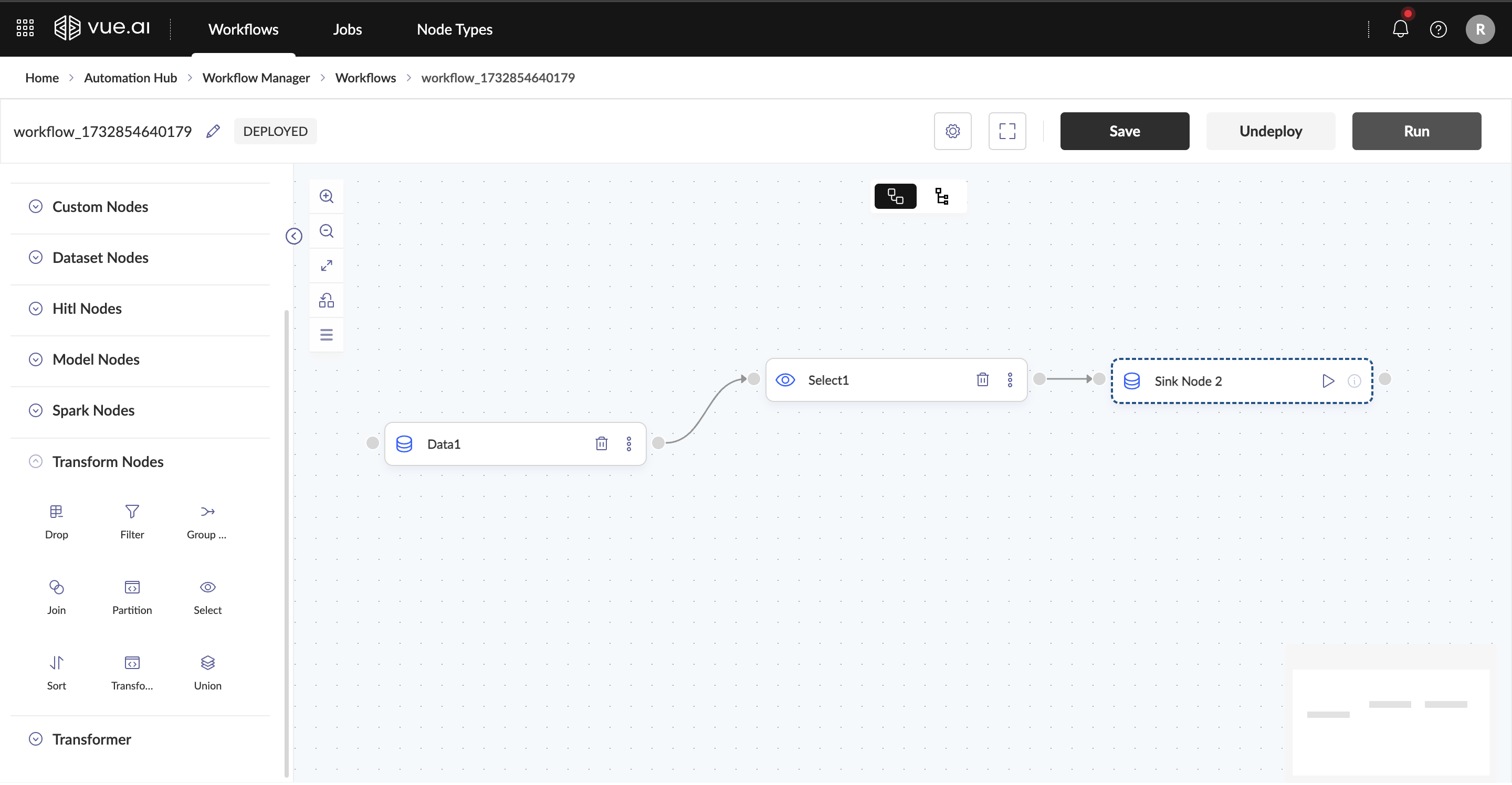

Deploy and Run the Workflow

To modify the workflow configuration, click the gear icon at the top of the canvas.

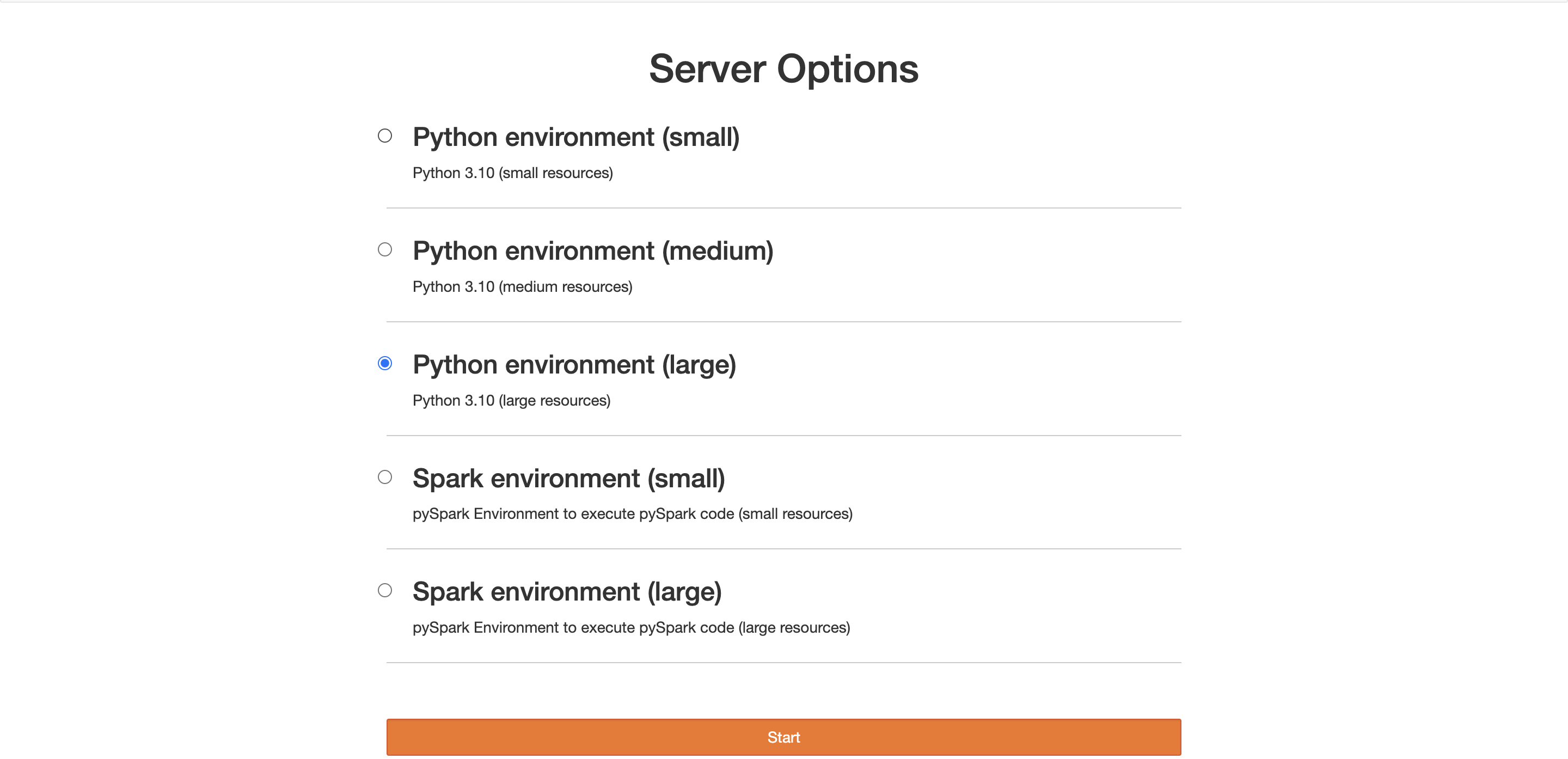

Select the engine (Pandas/Spark) in which the workflow needs to be deployed

Click Deploy to initiate the deployment process.

Once deployed, click Run to execute the workflow.

Navigate to the Jobs Page to check the workflow job status.

Expected Outcome: The workflow is successfully deployed and executed.

Scheduling the Workflow